WbW-Pro function documentation

Each of the following functions are methods of WbEnvironment class. Tools may be called using the convention in the following example:

from whitebox_workflows import WbEnvironment

wbe = WbEnvironment()

# Set up the environment, e.g. working directory, verbose mode, num_procs

raster = wbe.read_raster('my_raster.tif') # Read some kind of data

result = wbe.shape_index(raster) # Call some kind of function

...

- accumulation_curvature

- assess_route

- average_horizon_distance

- breakline_mapping

- canny_edge_detection

- classify_lidar

- colourize_based_on_class

- colourize_based_on_point_returns

- curvedness

- dbscan

- dem_void_filling

- depth_to_water

- difference_curvature

- evaluate_training_sites

- filter_lidar

- filter_lidar_by_percentile

- filter_lidar_by_reference_surface

- fix_dangling_arcs

- fuzzy_knn_classification

- generalize_classified_raster

- generalize_with_similarity

- generating_function

- horizon_area

- horizontal_excess_curvature

- hydrologic_connectivity

- image_segmentation

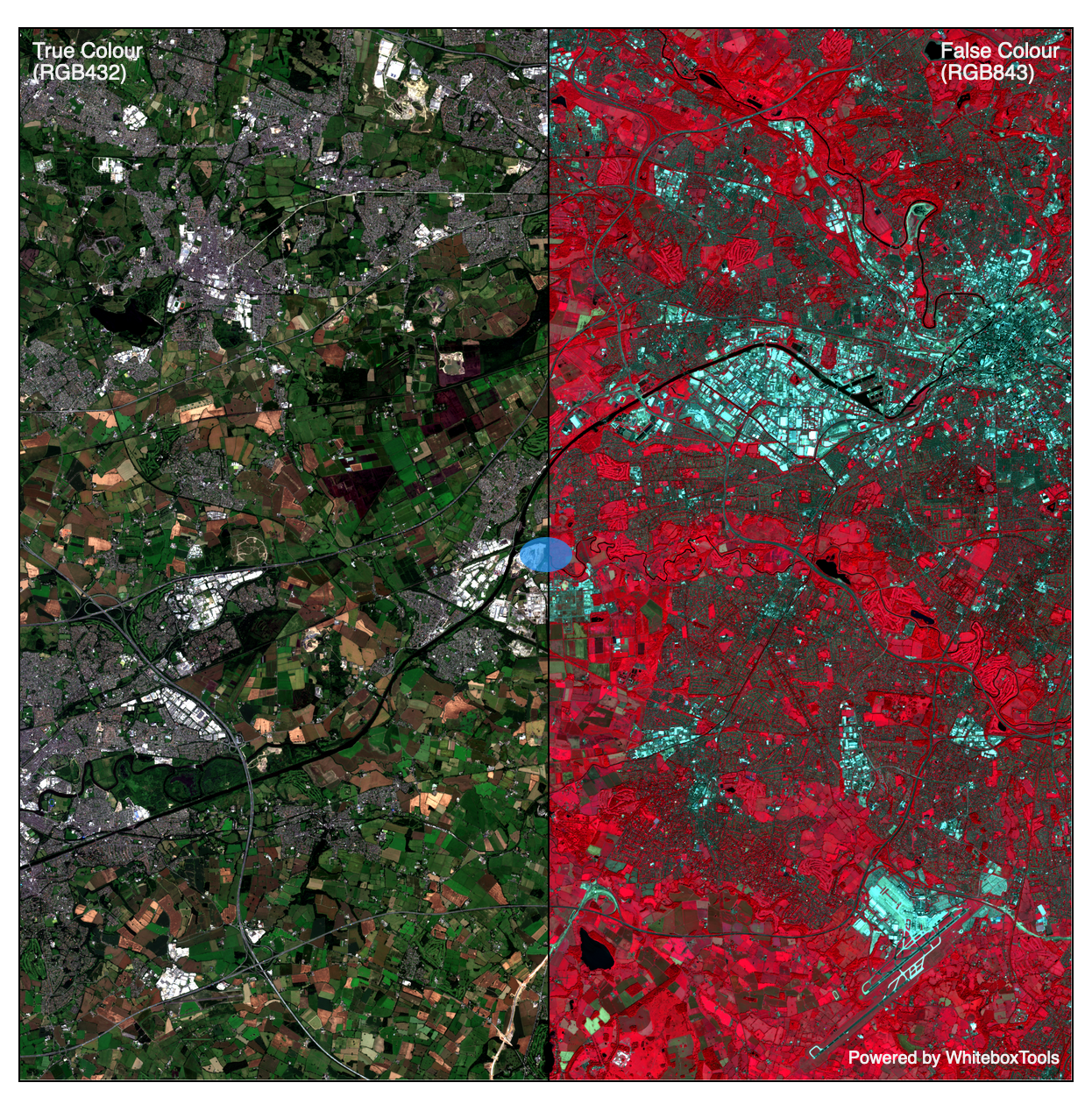

- image_slider

- improved_ground_point_filter

- inverse_pca

- knn_classification

- knn_regression

- lidar_contour

- lidar_eigenvalue_features

- lidar_point_return_analysis

- lidar_sibson_interpolation

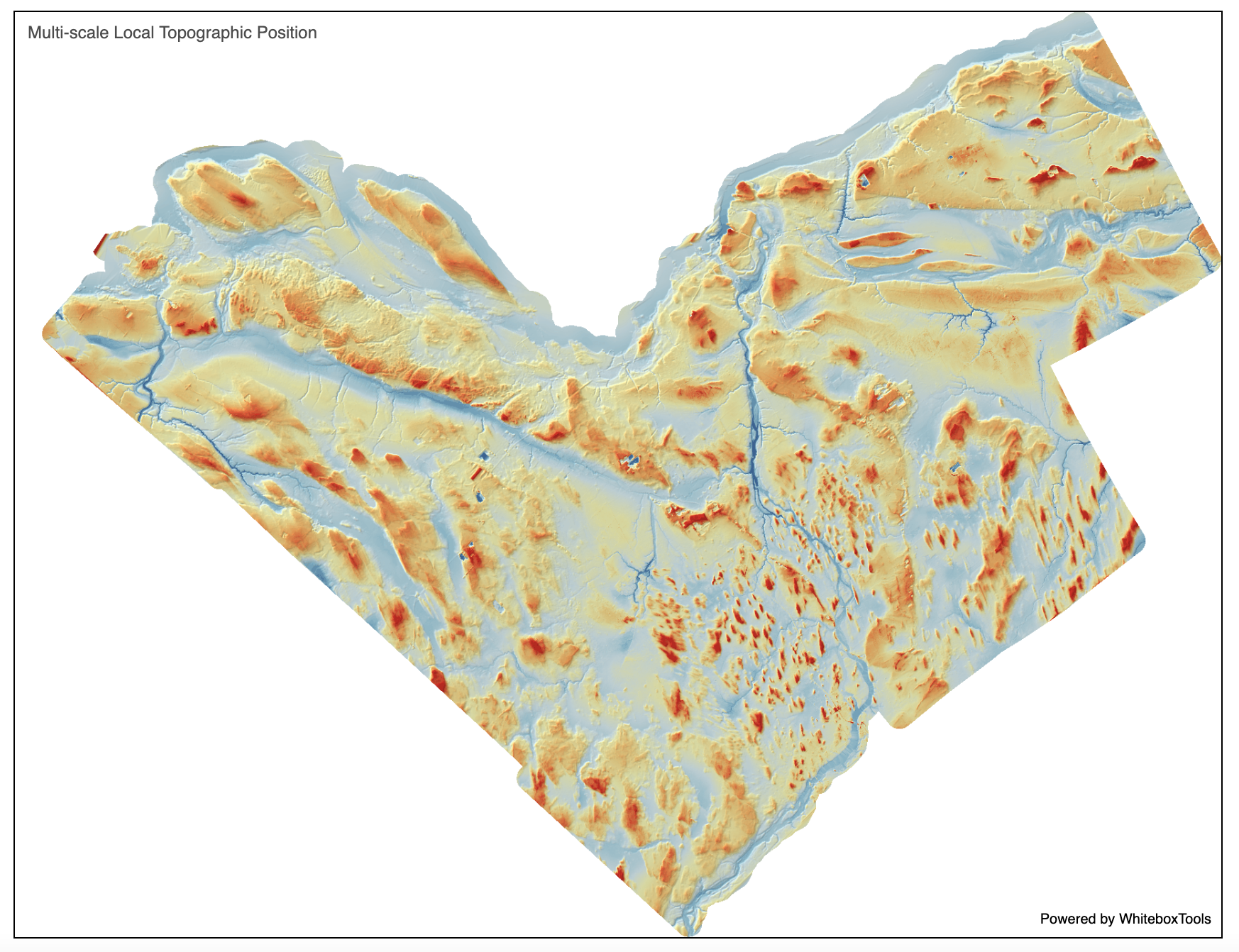

- local_hypsometric_analysis

- logistic_regression

- low_points_on_headwater_divides

- min_dist_classification

- minimal_dispersion_flow_algorithm

- modify_lidar

- multiscale_curvatures

- nibble

- openness

- parallelepiped_classification

- phi_coefficient

- piecewise_contrast_stretch

- prune_vector_streams

- random_forest_classification_fit

- random_forest_classification_predict

- random_forest_regression_fit

- random_forest_regression_predict

- reconcile_multiple_headers

- recover_flightline_info

- recreate_pass_lines

- remove_field_edge_points

- remove_raster_polygon_holes

- ridge_and_valley_vectors

- ring_curvature

- river_centerlines

- rotor

- shadow_animation

- shadow_image

- shape_index

- sieve

- sky_view_factor

- skyline_analysis

- slope_vs_aspect_plot

- smooth_vegetation_residual

- sort_lidar

- split_lidar

- svm_classification

- svm_regression

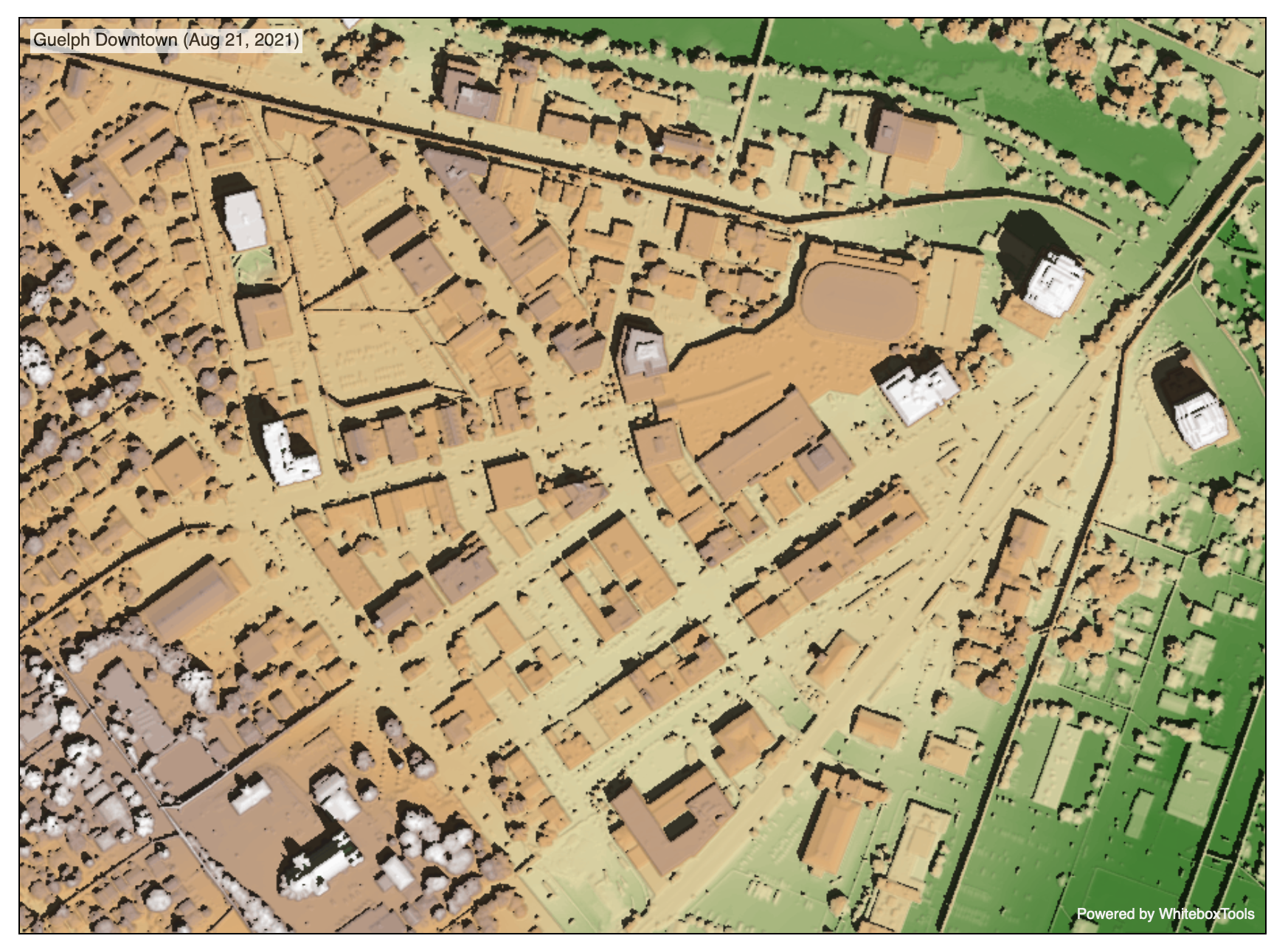

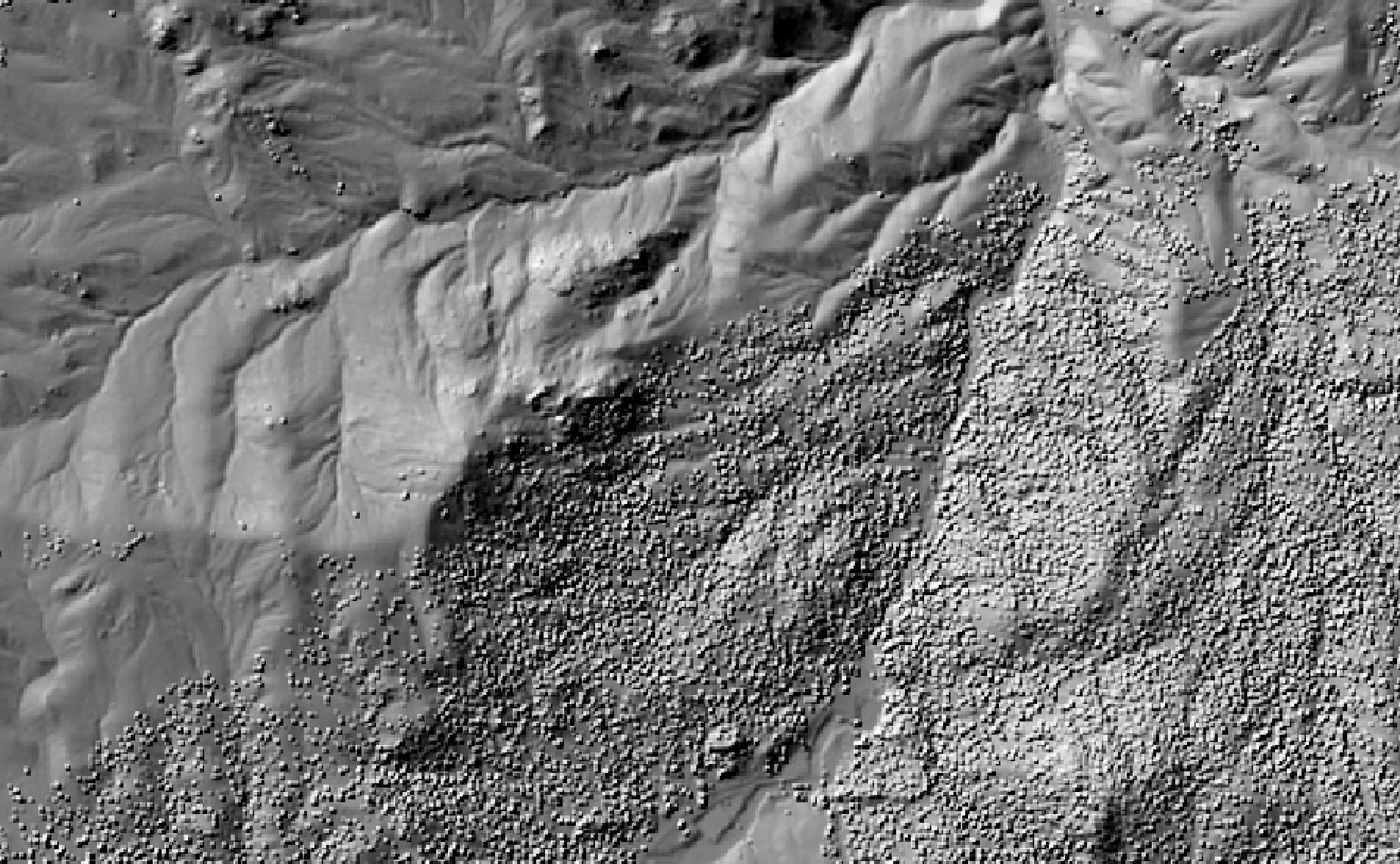

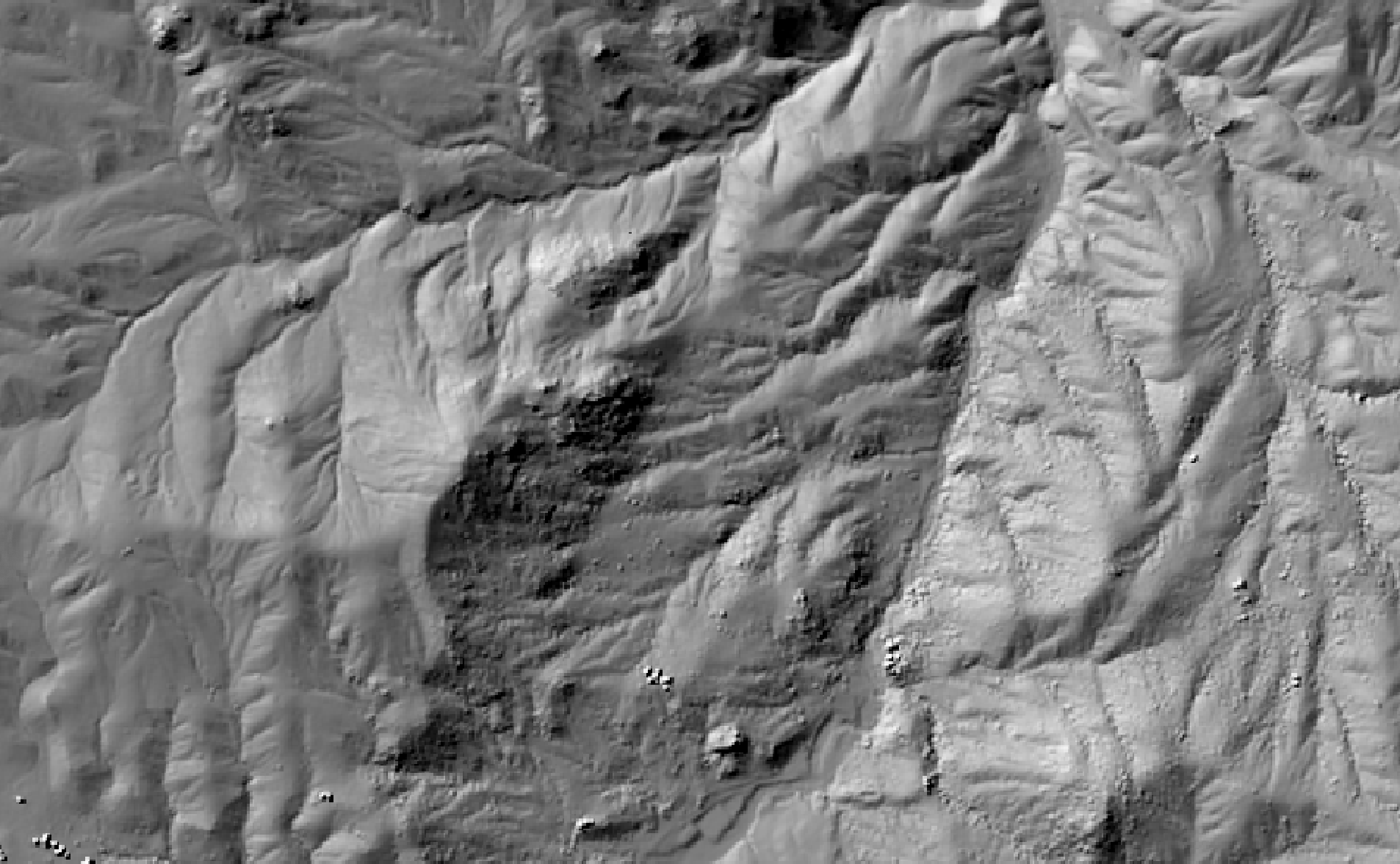

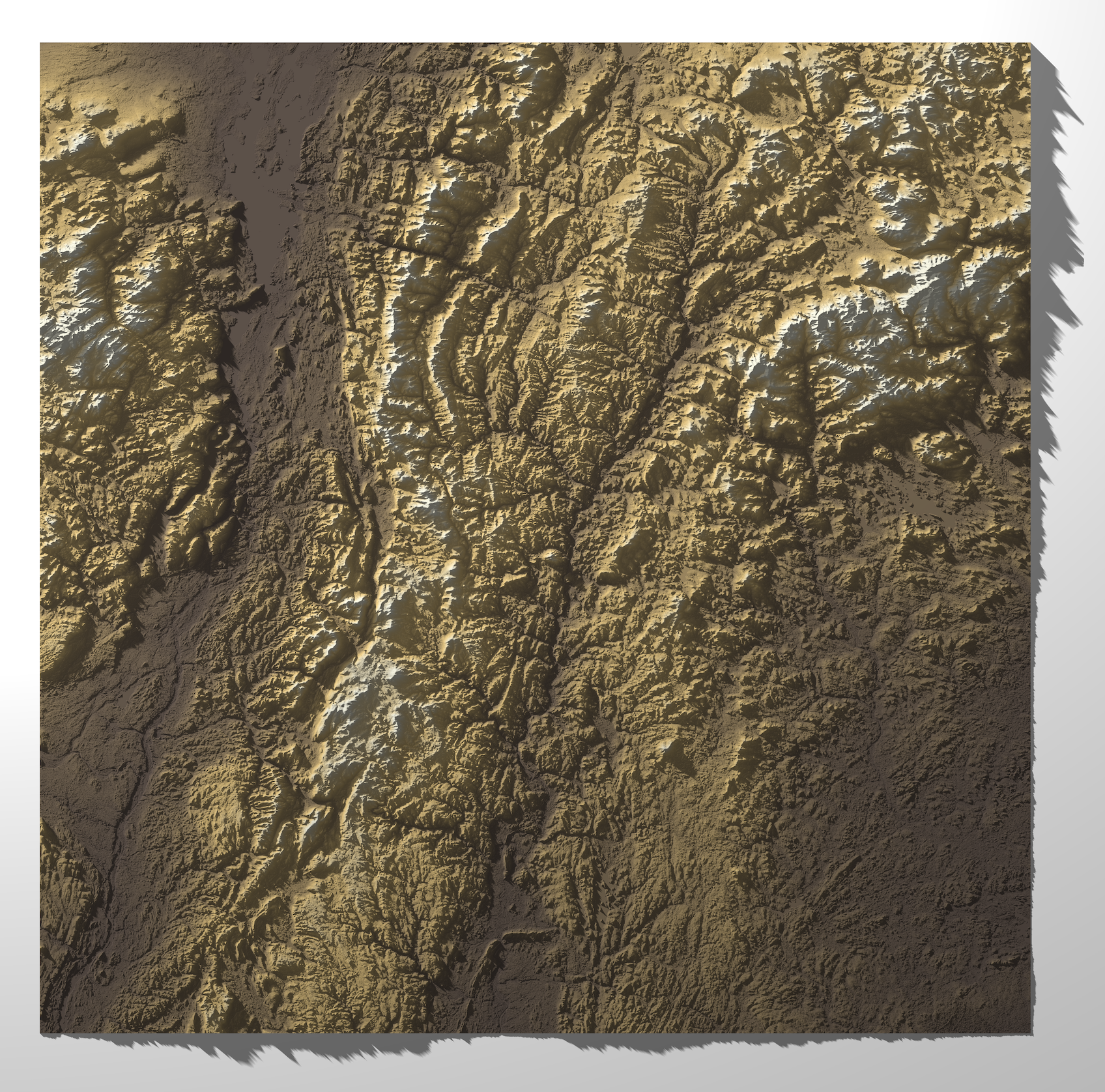

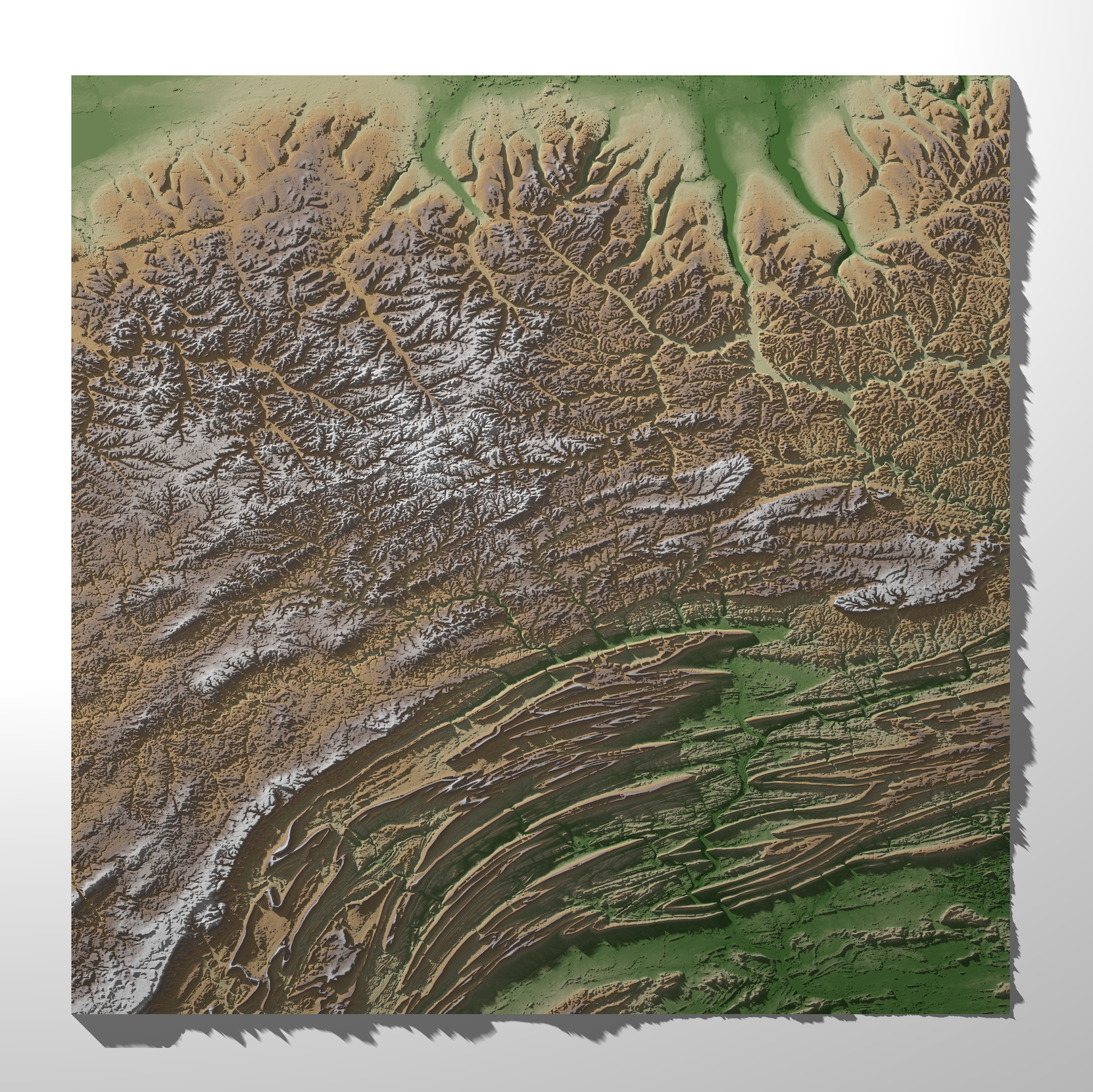

- topo_render

- topographic_position_animation

- topological_breach_burn

- unsphericity

- vertical_excess_curvature

- yield_filter

- yield_map

- yield_normalization

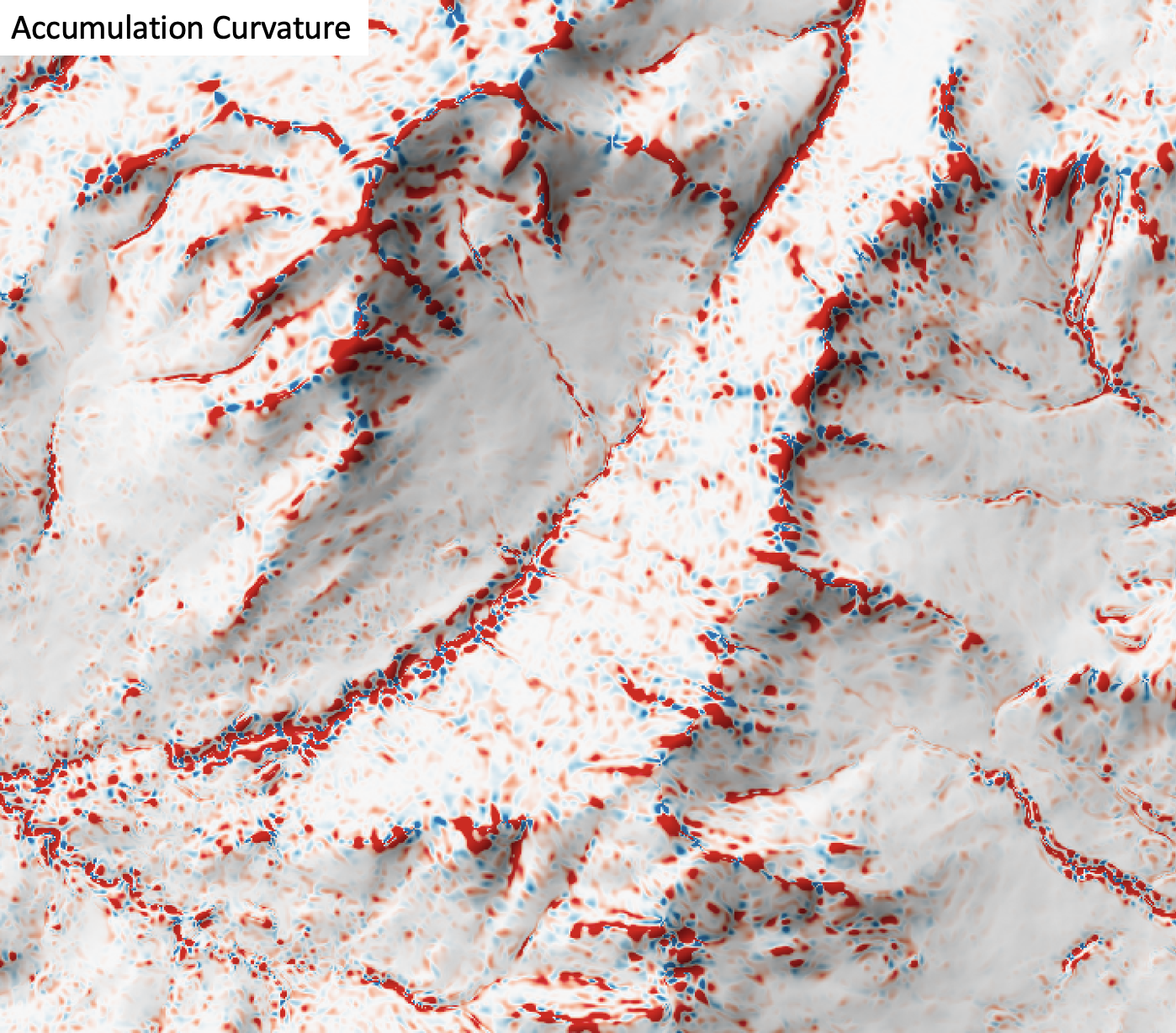

accumulation_curvature

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

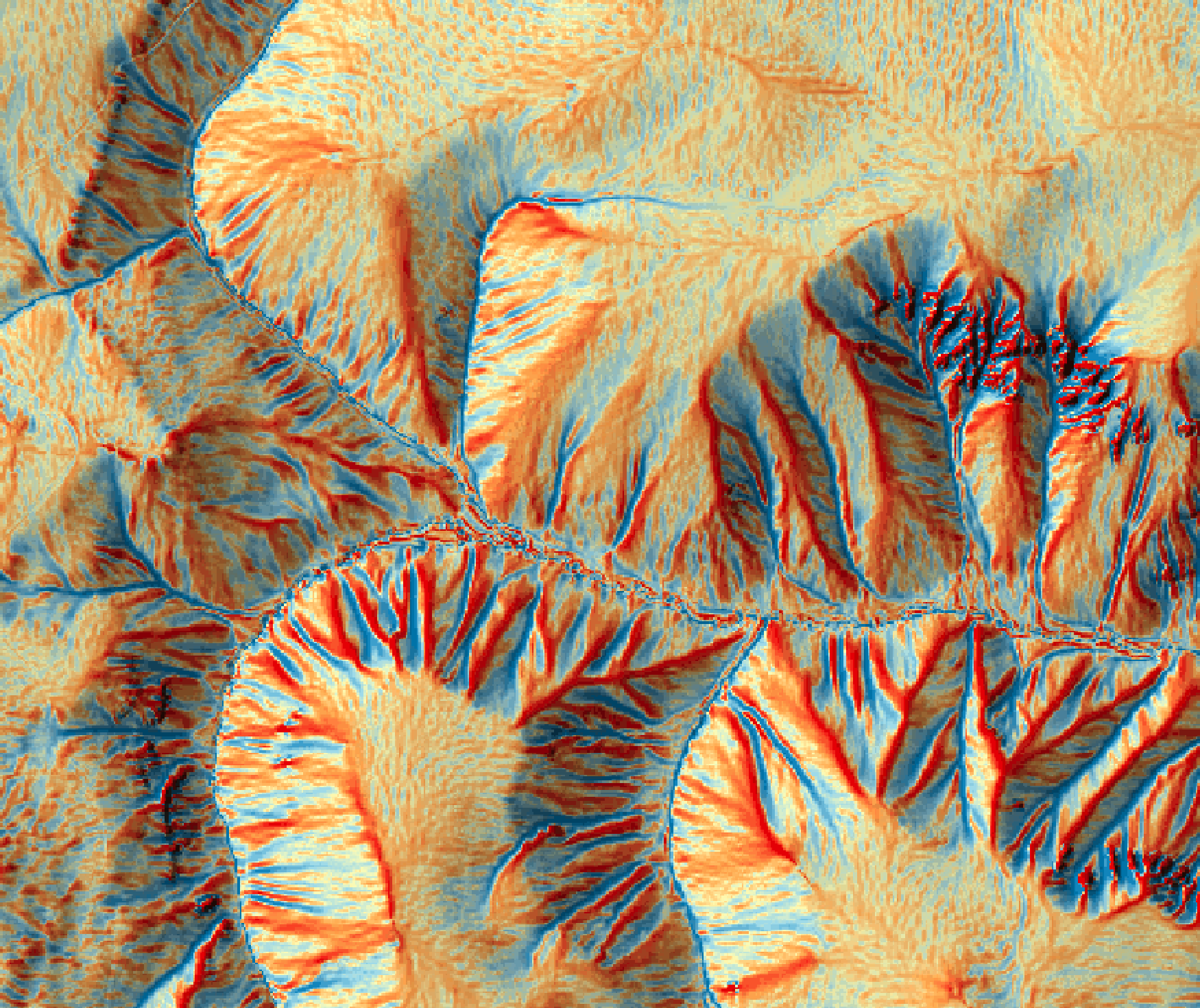

This tool calculates the accumulation curvature from a digital elevation model (DEM). Accumulation curvature is the product of profile (vertical) and tangential (horizontal) curvatures at a location (Shary, 1995). This variable has positive values, zero or greater. Florinsky (2017) states that accumulation curvature is a measure of the extent of local accumulation of flows at a given point in the topographic surface. Accumulation curvature is measured in units of m-2.

The user must specify the name of the input DEM (dem) and the output raster (output). The

The Z conversion factor (zfactor) is only important when the vertical and horizontal units are not the

same in the DEM. When this is the case, the algorithm will multiply each elevation in the DEM by the

Z Conversion Factor. Curvature values are often very small and as such the user may opt to log-transform

the output raster (log). Transforming the values applies the equation by Shary et al. (2002):

Θ' = sign(Θ) ln(1 + 10n|Θ|)

where Θ is the parameter value and n is dependent on the grid cell size.

For DEMs in projected coordinate systems, the tool uses the 3rd-order bivariate Taylor polynomial method described by Florinsky (2016). Based on a polynomial fit of the elevations within the 5x5 neighbourhood surrounding each cell, this method is considered more robust against outlier elevations (noise) than other methods. For DEMs in geographic coordinate systems (i.e. angular units), the tool uses the 3x3 polynomial fitting method for equal angle grids also described by Florinsky (2016).

References

Florinsky, I. (2016). Digital terrain analysis in soil science and geology. Academic Press.

Florinsky, I. V. (2017). An illustrated introduction to general geomorphometry. Progress in Physical Geography, 41(6), 723-752.

Shary PA (1995) Land surface in gravity points classification by a complete system of curvatures. Mathematical Geology 27: 373–390.

Shary P. A., Sharaya L. S. and Mitusov A. V. (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107: 1–32.

See Also

tangential_curvature, profile_curvature, minimal_curvature, maximal_curvature, mean_curvature, gaussian_curvature

Function Signature

def accumulation_curvature(self, dem: Raster, log_transform: bool = False, z_factor: float = 1.0) -> Raster: ...

assess_route

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

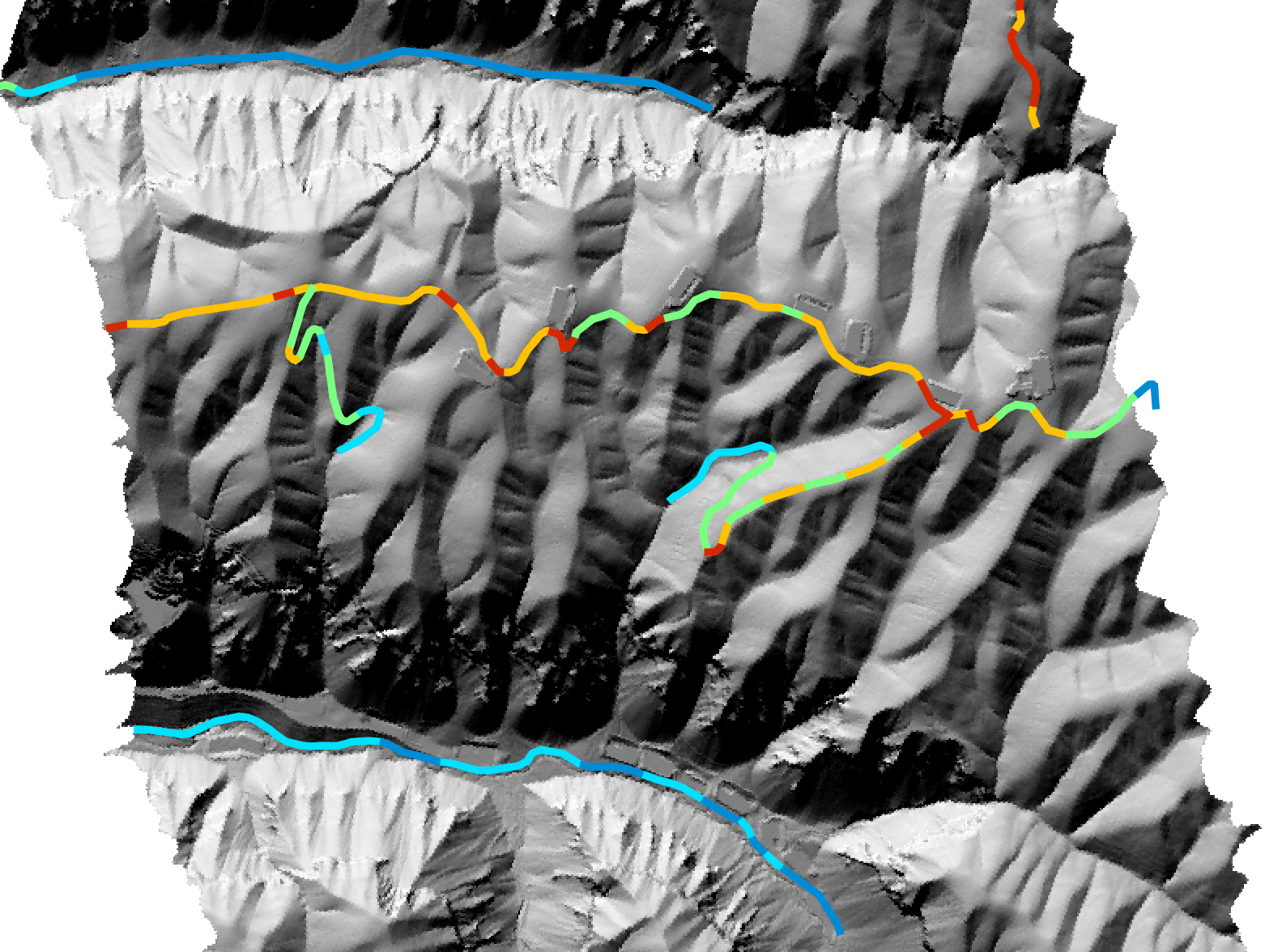

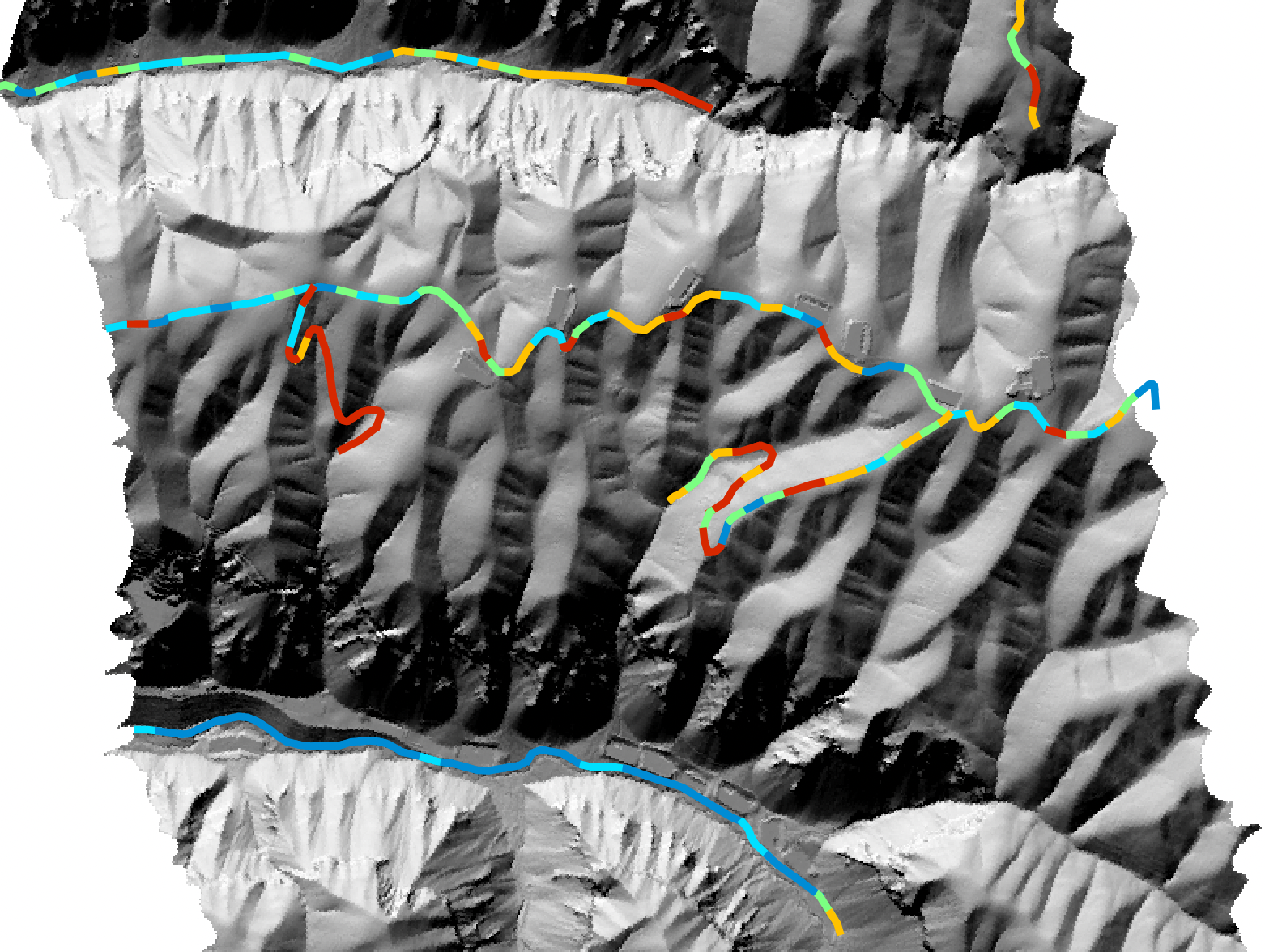

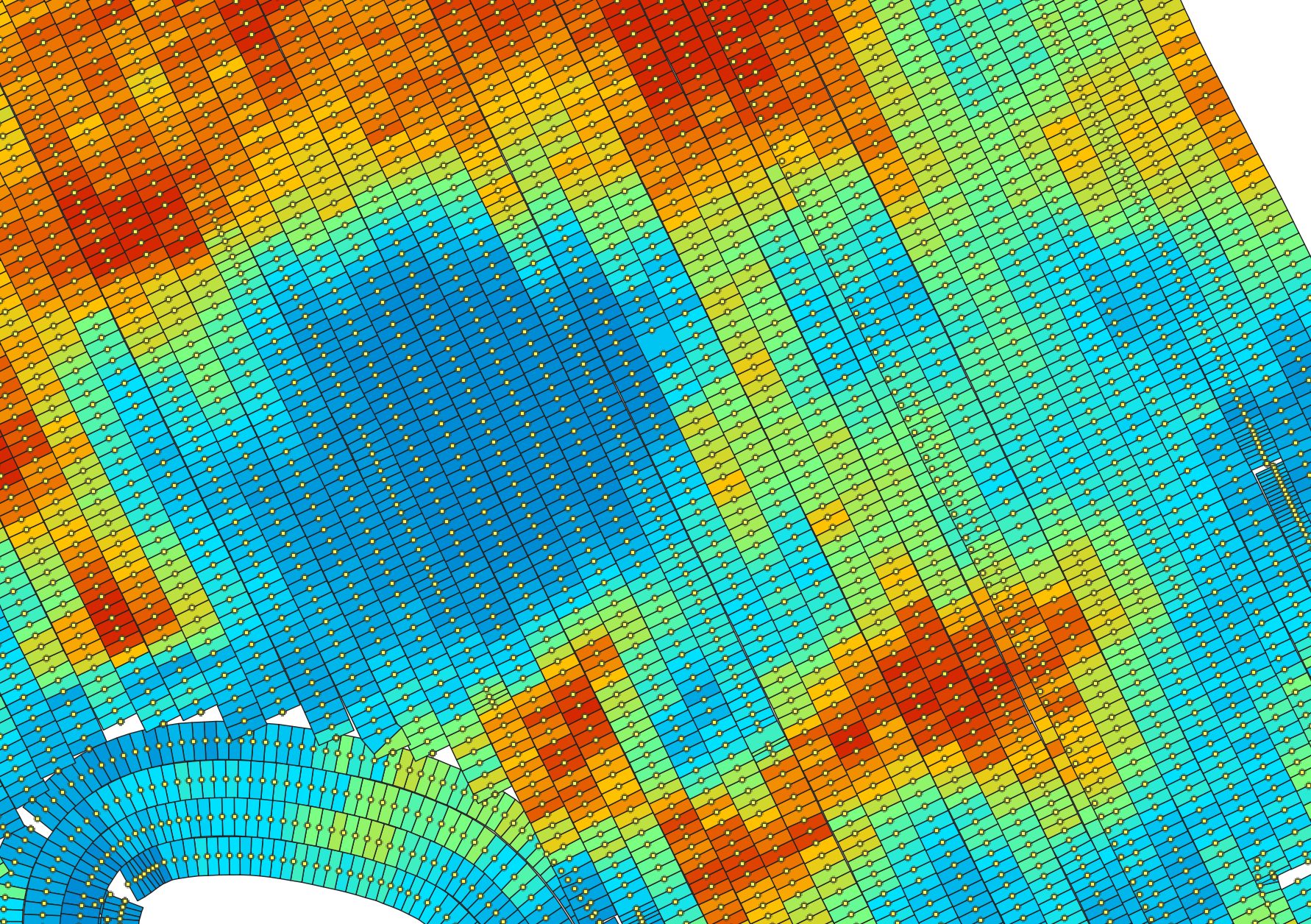

This tool assesses the variability in slope, elevation, and visibility along a line vector, which may

be a footpath, road, river or any other route. The user must specify the name of the input line vector

(routes), the input raster digital elevation model file (dem), and the output line vector

(output). The algorithm initially splits the input line vector in equal-length segments (length).

For each line segment, the tool then calculates the average slope (AVG_SLOPE), minimum and maximum

elevations (MIN_ELEV, MAX_ELEV), the elevation range or relief (RELIEF), the path sinuosity

(SINUOSITY), the number of changes in slope direction or breaks-in-slope (CHG_IN_SLP), and the

maximum visibility (VISIBILITY). Each of these metrics are output to the attribute table of the output

vector, along with the feature identifier (FID); any attributes associated with the input parent

feature will also be copied into the output table. Slope and elevation metrics are measured along the

2D path based on the elevations of each of the row and column intersection points of the raster with

the path, estimated from linear-interpolation using the two neighbouring elevations on either side of

the path. Sinuosity is calculated as the ratio of the along-surface (i.e. 3D) path length, divided by

the 3D distance between the start and end points of the segment. CHG_IN_SLP can be thought of as a crude

measure of path roughness, although this will be very sensitive to the quality of the DEM. The visibility

metric is based on the Yokoyama et al. (2002) openness index, which calculates the average horizon

angle in the eight cardal directions to a maximum search distance (dist), measured in grid cells.

Note that the input DEM must be in a projected coordinate system. The DEM and the input routes vector must be also share the same coordinate system. This tool also works best when the input DEM is of high quality and fine spatial resolution, such as those derived from LiDAR data sets.

Maximum segment visibility:

Average segment slope:

For more information about this tool, see this blog on the WhiteboxTools homepage.

See Also

Function Signature

def assess_route(self, routes: Vector, dem: Raster, segment_length: float = 100.0, search_radius: int = 15) -> Vector: ...

average_horizon_distance

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

This tool calculates the spatial pattern of average distance to the horizon based on an input digital elevation model (DEM). As such, the index is a measure of landscape visibility. In the image below, lighter areas have a longer average distance to the horizon, measured in map units.

The user must specify an input DEM (dem), the azimuth fraction (az_fraction), the maximum search

distance (max_dist), and the height offset of the observer (observer_hgt_offset). The input DEM

should usually be a digital surface model (DSM) that contains significant off-terrain objects. Such a

model, for example, could be created using the first-return points of a LiDAR data set, or using the

lidar_digital_surface_model tool. The azimuth

fraction should be an even divisor of 360-degrees and must be between 1-45 degrees.

The tool operates by calculating horizon angle (see horizon_angle)

rasters from the DSM based on the user-specified azimuth fraction (az_fraction). For example, if an azimuth

fraction of 15-degrees is specified, horizon angle rasters would be calculated for the solar azimuths 0,

15, 30, 45... A horizon angle raster evaluates the vertical angle between each grid cell in a DSM and a

distant obstacle (e.g. a mountain ridge, building, tree, etc.) that obscures the view in a specified

direction. In calculating horizon angle, the user must specify the maximum search distance (max_dist),

in map units, beyond which the query for higher, more distant objects will cease. This parameter strongly

impacts the performance of the function, with larger values resulting in significantly longer processing-times.

The observer_hgt_offset parameter can be used to add an increment to the source cell's elevation. For

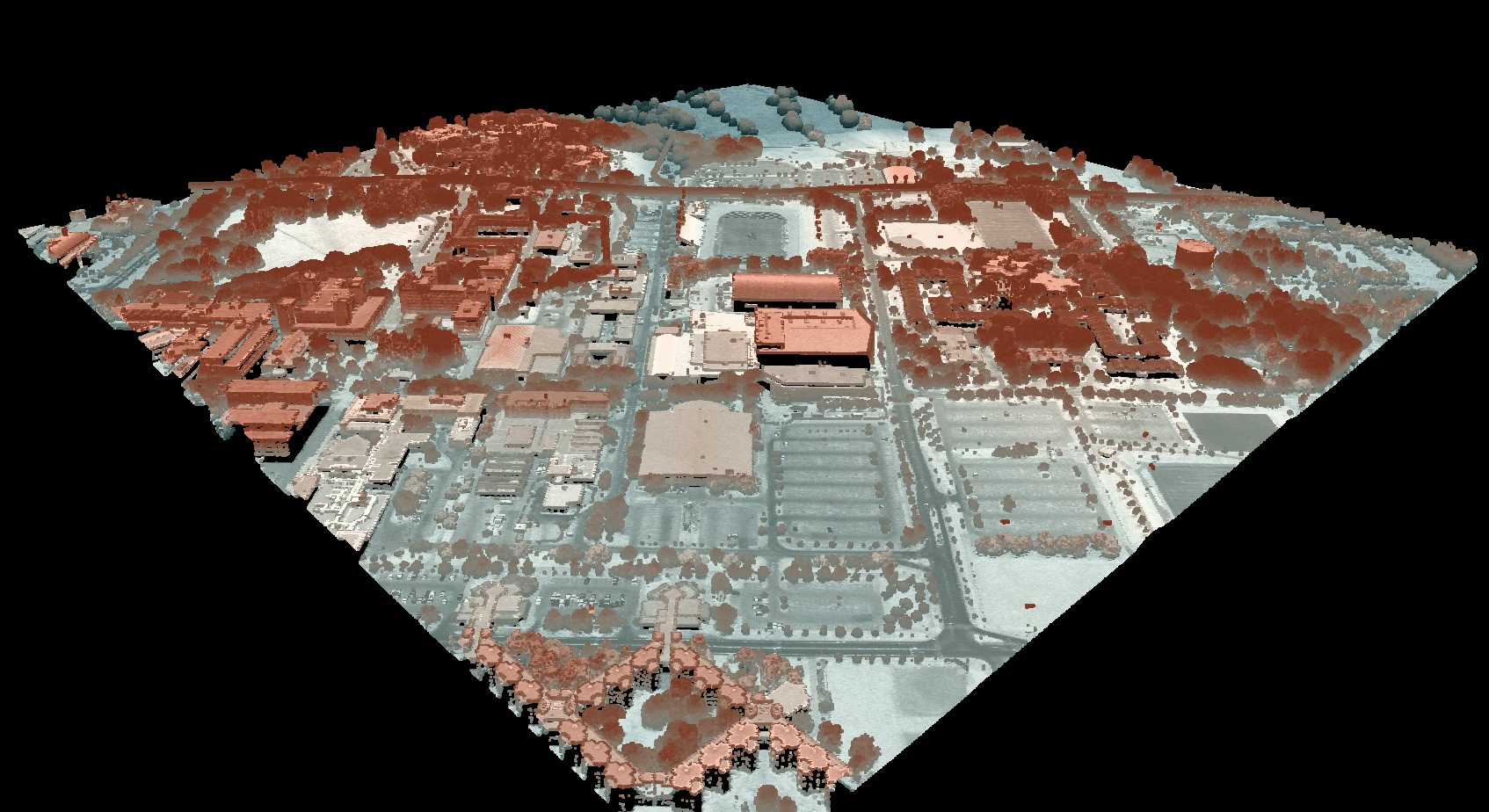

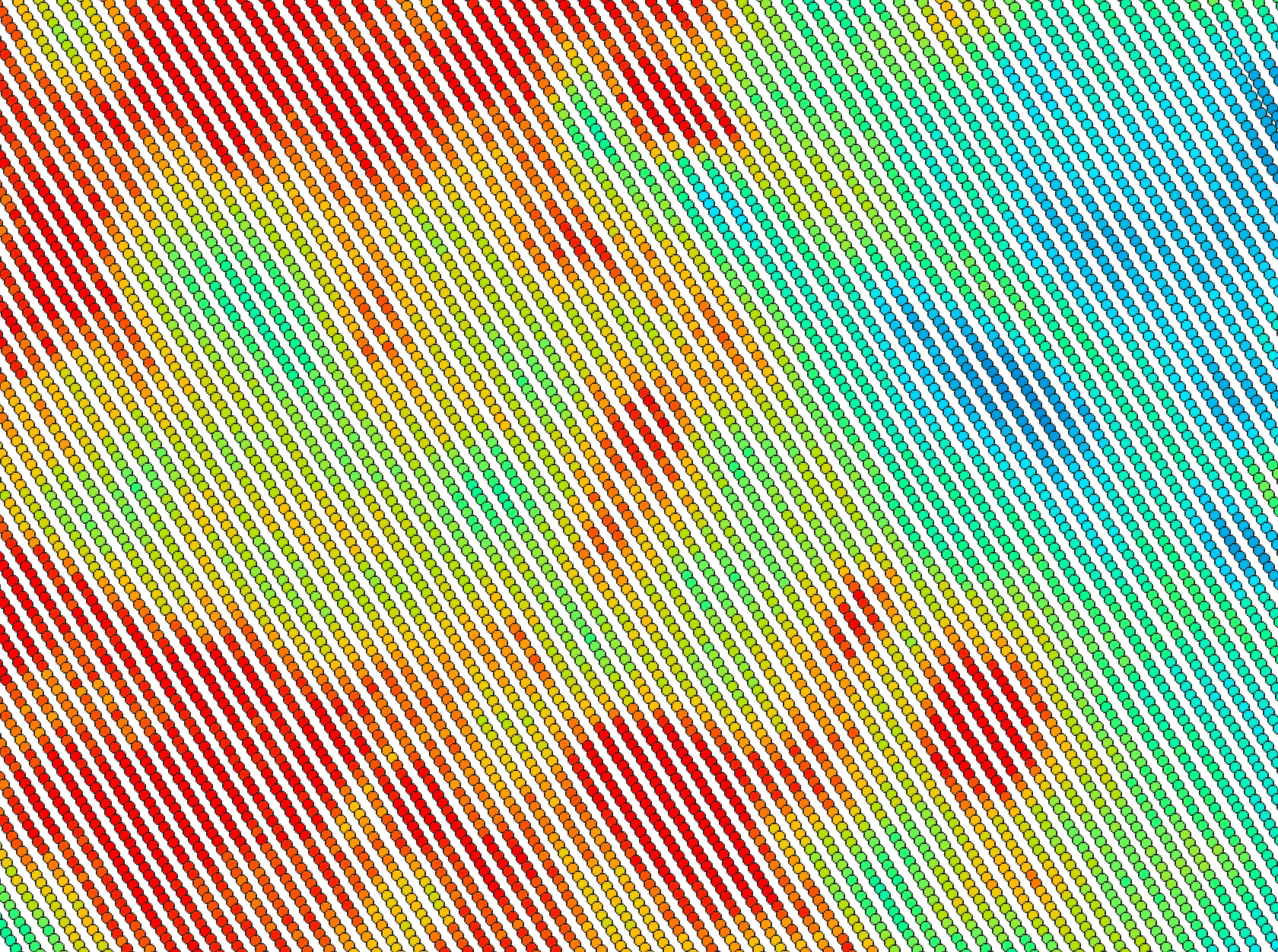

example, the following image shows the spatial pattern derived from a LiDAR DSM using observer_hgt_offset = 0.0:

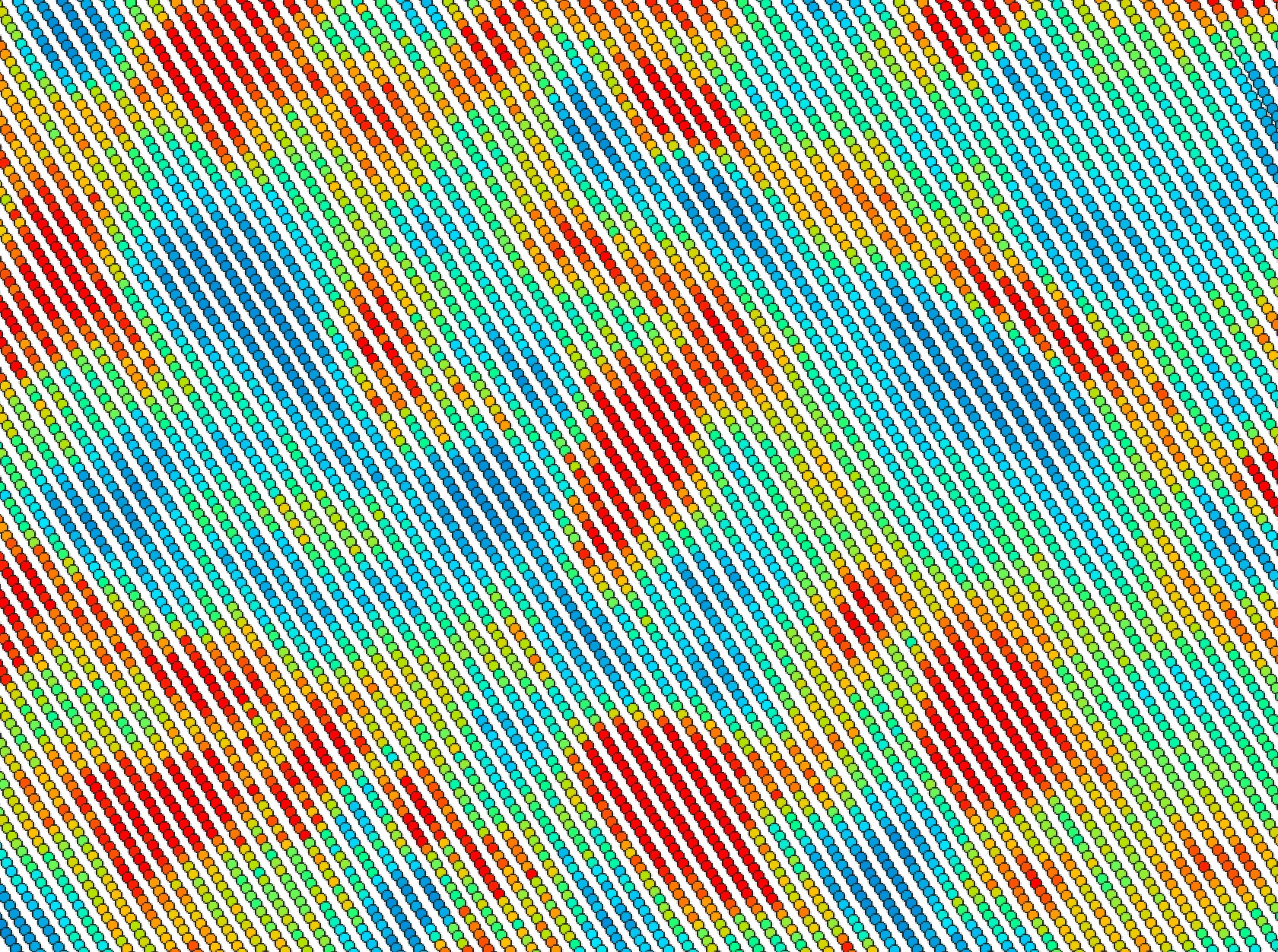

Notice that there are several places, plarticularly on the flatter rooftops, where the local noise in the LiDAR DEM, associated with the individual scan lines, has resulted in a noisy pattern in the output. By adding a small height offset of the scale of this noise variation (0.15 m), we see that most of this noisy pattern is removed in the output below:

This feature makes the function more robust against DEM noise. As another example of the usefulness of

this additional parameter, in the image below, the observer_hgt_offset parameter has been used to

measure the pattern of the index at a typical human height (1.7 m):

Notice how at this height the average horizon distance becomes much farther on some of the flat rooftops where a guard wall prevents further viewing areas at shorter observer heights.

The output of this function is similar to the Average View Distance provided by the Sky View tool in Saga GIS. However, for a given maximum search distance, the Whitebox tool is likely faster to compute and has the added advantage of offering the observer's height parameter, as described above.

See Also

sky_view_factor, horizon_area, openness, lidar_digital_surface_model, horizon_angle

Function Signature

def average_horizon_distance(self, dem: Raster, az_fraction: float = 5.0, max_dist: float = float('inf'), observer_hgt_offset: float = 0.0) -> Raster: ...

breakline_mapping

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

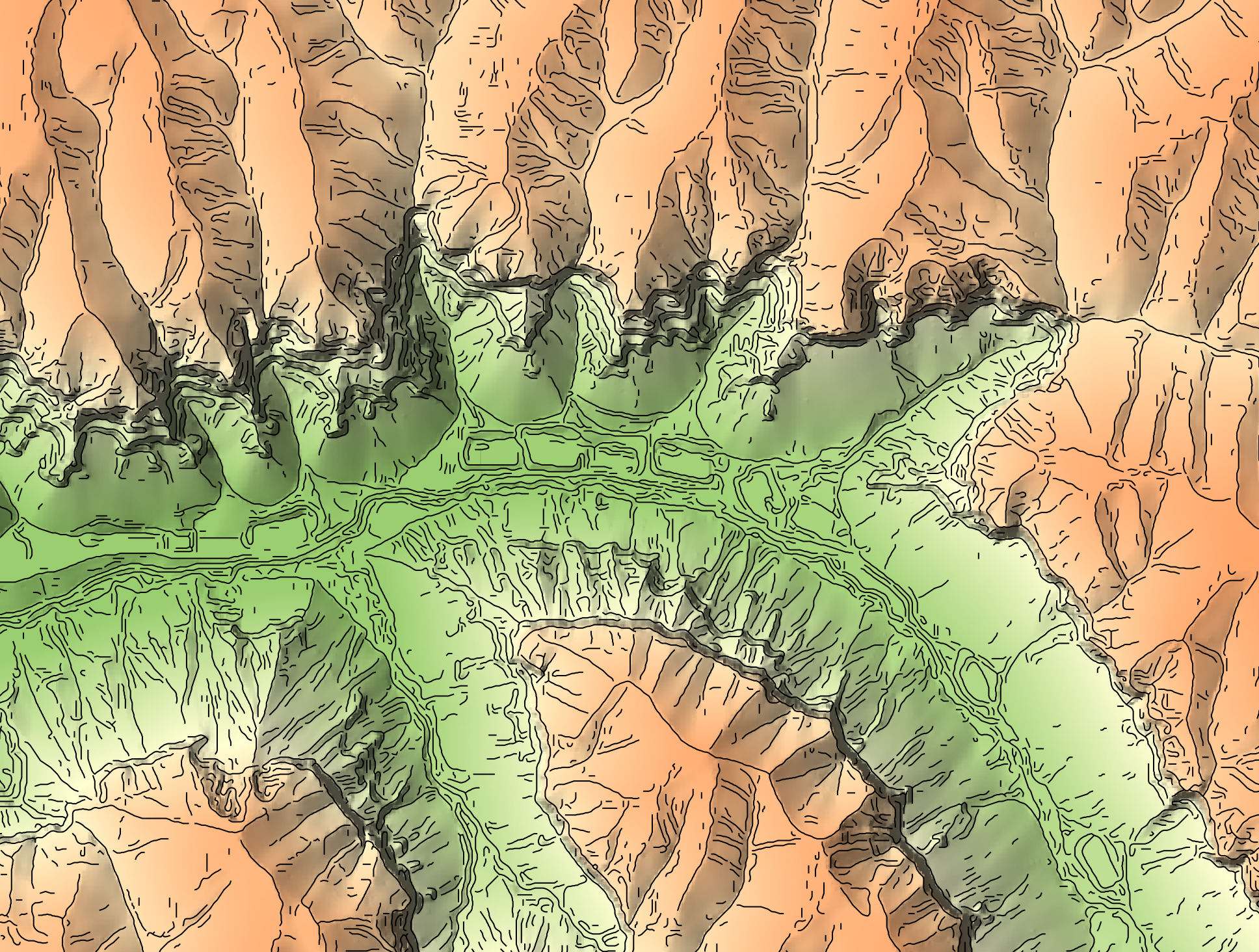

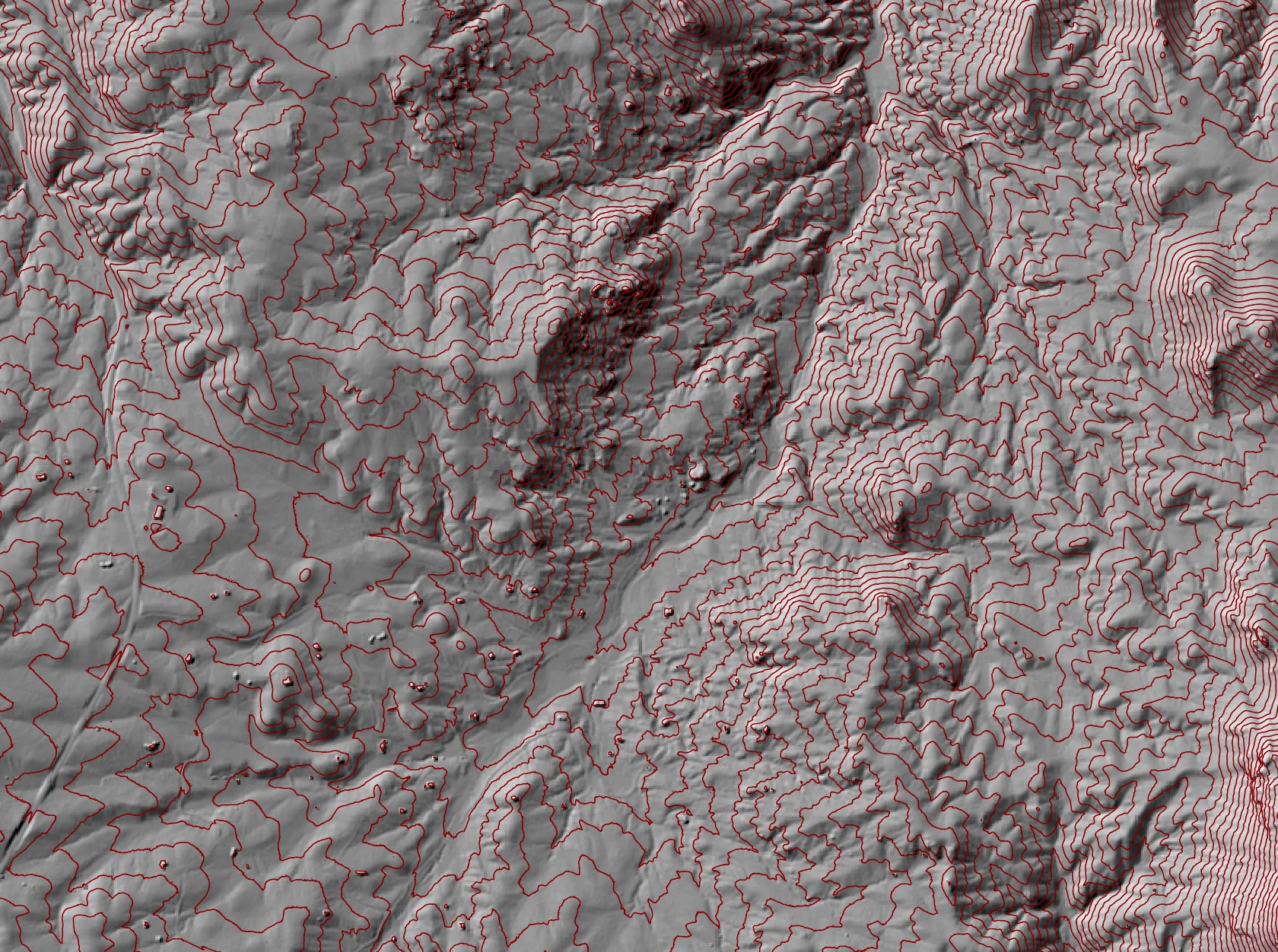

This tool can be used to map breaklines in an input digital elevation model (DEM; input). Breaklines are

locations of high surface curvature, in any direction, measured using curvedness. Curvedness values are

log-transformed using the resolution-dependent method proposed by Shary et al. (2002). Breaklines are coincident

with grid cells that have log-transformed curvedness values exceeding a user-specified threshold value

(thresshold). While curvedness is measured within the range 0 to infinity, values are typically lower.

Appropriate values for the threshold parameter are commonly in the 1 to 5 range. Lower threshold values will

result in more extensive breakline mapping and vice versa. The algorithm will vectorize breakline features and

the output of this tool (output) is a line vector. Line features that are less than a user-specified length

(in grid cells; min_length), will not be output.

Watch the breakline mapping video for an example of how to run the tool.

References

Shary P. A., Sharaya L. S. and Mitusov A. V. (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107: 1–32.

See Also

Function Signature

def breakline_mapping(self, dem: Raster, threshold: float = 0.8, min_length: int = 3) -> Vector: ...

canny_edge_detection

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool performs a Canny edge-detection filtering

operation on an input image (input). The Canny edge-detection filter is a multi-stage filter that

combines a Gassian filtering (gaussian_filter) operation with various thresholding operations to

generate a single-cell wide edges output raster (output). The sigma parameter, measured in grid

cells determines the size of the Gaussian filter kernel. The low and high parameters determine

the characteristics of the thresholding steps; both parameters range from 0.0 to 1.0.

By default, the output raster will be Boolean, with 1's designating edge-cells. It is possible, using the

add_back parameter to add the edge cells back into the original image, providing an edge-enchanced

output, similar in concept to the unsharp_masking operation.

References

This implementation was inspired by the algorithm described here: https://towardsdatascience.com/canny-edge-detection-step-by-step-in-python-computer-vision-b49c3a2d8123

See Also

gaussian_filter, sobel_filter, unsharp_masking, scharr_filter

Function Signature

def canny_edge_detection(self, input: Raster, sigma: float = 0.5, low_threshold: float = 0.05, high_threshold: float = 0.15, add_back_to_image: bool = False) -> Raster: ...

classify_lidar

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool provides a basic classification of a LiDAR point cloud into ground, building, and vegetation classes. The algorithm performs the classification based on point neighbourhood geometric properties, including planarity, linearity, and height above the ground. There is also a point segmentation involved in the classification process.

The user may specify the names of the input and output LiDAR files (input and output).

Note that if the user does not specify the optional input/output LiDAR files, the tool will search for all

valid LiDAR (*.las, *.laz, *.zlidar) files contained within the current working directory. This feature can be useful

for processing a large number of LiDAR files in batch mode. When this batch mode is applied, the output file

names will be the same as the input file names but with a '_classified' suffix added to the end.

The search distance (radius), defining the radius of the neighbourhood window surrounding each point, must

also be specified. If this parameter is set to a value that is too large, areas of high surface curvature on the

ground surface will be left unclassed and smaller buildings, e.g. sheds, will not be identified. If the parameter is

set too small, areas of low point density may provide unsatisfactory classification values. The larger this search

distance is, the longer the algorithm will take to processs a data set. For many airborne LiDAR data sets, a value

between 1.0 - 3.0 meters is likely appropriate.

The ground threshold parameter (grd_threshold) determines how far above the tophat-transformed

surface a point must be to be excluded from the ground surface. This parameter also determines the maximum distance

a point can be from a plane or line model fit to a neighbourhood of points to be considered part of the model

geometry. Similarly the off-terrain object threshold parameter (oto_threshold) is used to determine how high

above the ground surface a point must be to be considered either a vegetation or building point. The ground threshold

must be smaller than the off-terrain object threshold. If you find that breaks-in-slope in areas of more complex

ground topography are left unclassed (class = 1), this can be addressed by raising the ground threshold parameter.

The planarity and linearity thresholds (planarity_threshold and linearity_threshold) describe the minimum proportion

(0-1) of neighbouring points that must be part of a fitted model before the point is considered to be planar or linear.

Both of these properties are used by the algorithm in a variety of ways to determine final class values. Planar and

linear models are fit using a RANSAC-like algorithm, with the

main user-specified parameter of the number of iterations (iterations). The larger the number of iterations the greater

the processing time will be.

The facade threshold (facade_threshold) is the last user-specified parameter, and determines the maximum horizontal distance

that a point beneath a rooftop edge point may be to be considered part of the building facade (i.e. walls). The default

value is 0.5 m, although this value will depend on a number of factors, such as whether or not the building has balconies.

The algorithm generally does very well to identify deciduous (broad-leaf) trees but can at times struggle with incorrectly classifying dense coniferous (needle-leaf) trees as buildings. When this is the case, you may counter this tendency by lowering the planarity threshold parameter value. Similarly, the algorithm will generally leave overhead power lines as unclassified (class = 1), howevever, if you find that the algorithm misclassifies most such points as high vegetation (class = 5), this can be countered by lowering the linearity threshold value.

Note that if the input file already contains class data, these data will be overwritten in the output file.

See Also

colourize_based_on_class, filter_lidar, modify_lidar, sort_lidar, split_lidar

Function Signature

def classify_lidar(self, input_lidar: Optional[Lidar], search_radius: float = 2.5, grd_threshold: float = 0.1, oto_threshold: float = 1.0, linearity_threshold: float = 0.5, planarity_threshold: float = 0.85, num_iter: int = 30, facade_threshold: float = 0.5) -> Optional[Lidar]: ...

colourize_based_on_class

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

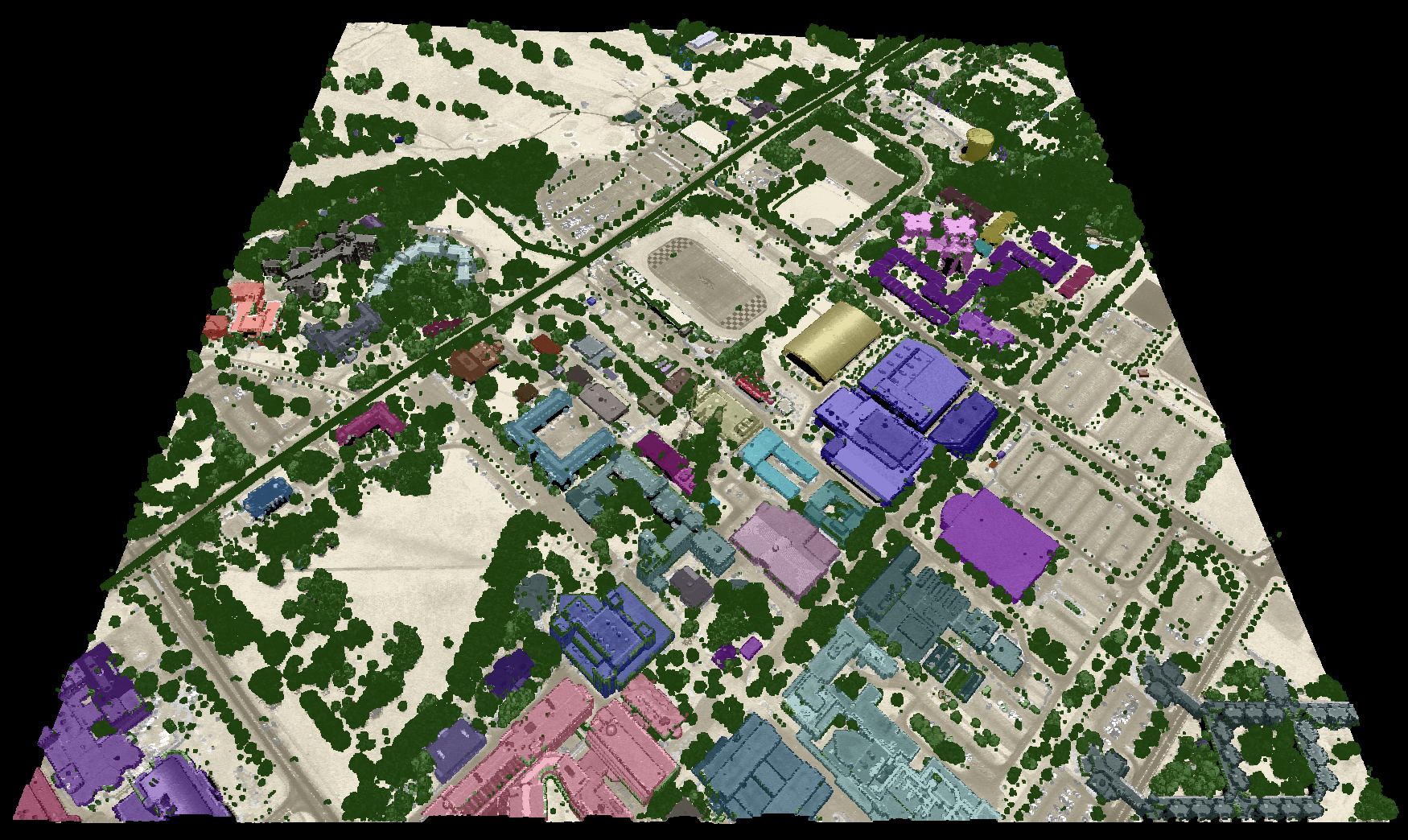

This tools sets the RGB colour values of an input LiDAR point cloud (input) based on the point classifications.

Rendering a point cloud in this way can aid with the determination of point classification accuracy, by allowing

you to determine if there are certain areas within a LiDAR tile, or certain classes, that are problematic during

the point classification process.

By default, the tool renders buildings in red (see table below). However, the tool also provides the option to

render each building in a unique colour (use_unique_clrs_for_buildings), providing a visually stunning

LiDAR-based map of built-up areas. When this option is selected, the user must also specify the radius

parameter, which determines the search distance used during the building segmentation operation. The radius

parameter is optional, and if unspecified (when the use_unique_clrs_for_buildings flag is used), a value of

2.0 will be used.

The specific colours used to render each point class can optionally be set by the user with the clr_str parameter.

The value of this parameter may list specific class values (0-18) and corresponding colour values in either a

red-green-blue (RGB) colour triplet form (i.e. (r, g, b)), or or a hex-colour, of either form #e6d6aa or

0xe6d6aa (note the # and 0x prefixes used to indicate hexadecimal numbers; also either lowercase or

capital letter values are acceptable). The following is an example of the a valid clr_str that sets the

ground (class 2) and high vegetation (class 5) colours used for rendering:

2: (184, 167, 108); 5: #9ab86c

Notice that 1) each class is separated by a semicolon (';'), 2) class values and colour values are separated by colons (':'), and 3) either RGB and hex-colour forms are valid.

If a clr_str parameter is not provided, the tool will use the default colours used for each class (see table below).

Class values are assumed to follow the class designations listed in the LAS specification:

| Classification Value | Meaning | Default Colour |

|---|---|---|

| 0 | Created never classified | |

| 1 | Unclassified | |

| 2 | Ground | |

| 3 | Low Vegetation | |

| 4 | Medium Vegetation | |

| 5 | High Vegetation | |

| 6 | Building | |

| 7 | Low Point (noise) | |

| 8 | Reserved | |

| 9 | Water | |

| 10 | Rail | |

| 11 | Road Surface | |

| 12 | Reserved | |

| 13 | Wire – Guard (Shield) | |

| 14 | Wire – Conductor (Phase) | |

| 15 | Transmission Tower | |

| 16 | Wire-structure Connector (e.g. Insulator) | |

| 17 | Bridge Deck | |

| 18 | High noise |

The point RGB colour values can be blended with the intensity data to create a particularly effective

visualization, further enhancing the visual interpretation of point return properties. The intensity_blending

parameter value, which must range from 0% (no intensity blending) to 100% (all intensity), is used to

set the degree of intensity/RGB blending.

Because the output file contains RGB colour data, it is possible that it will be larger than the input file. If the input file does contain valid RGB data, the output will be similarly sized, but the input colour data will be replaced in the output file with the point-return colours.

The output file can be visualized using any point cloud renderer capable of displaying point RGB information. We recommend the plas.io LiDAR renderer but many similar open-source options exist.

See Also

colourize_based_on_point_returns, lidar_colourize

Function Signature

def colourize_based_on_class(self, input_lidar: Optional[Lidar], intensity_blending_amount: float = 50.0, clr_str: str = "", use_unique_clrs_for_buildings: bool = False, search_radius: float = 2.0) -> Optional[Lidar]: ...

colourize_based_on_point_returns

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

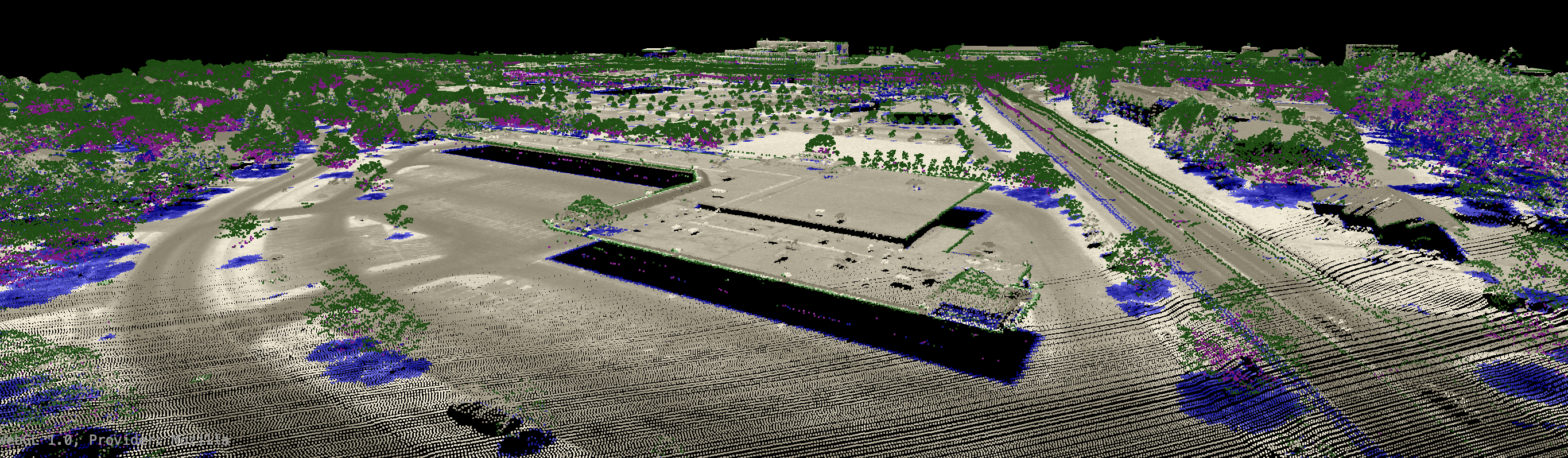

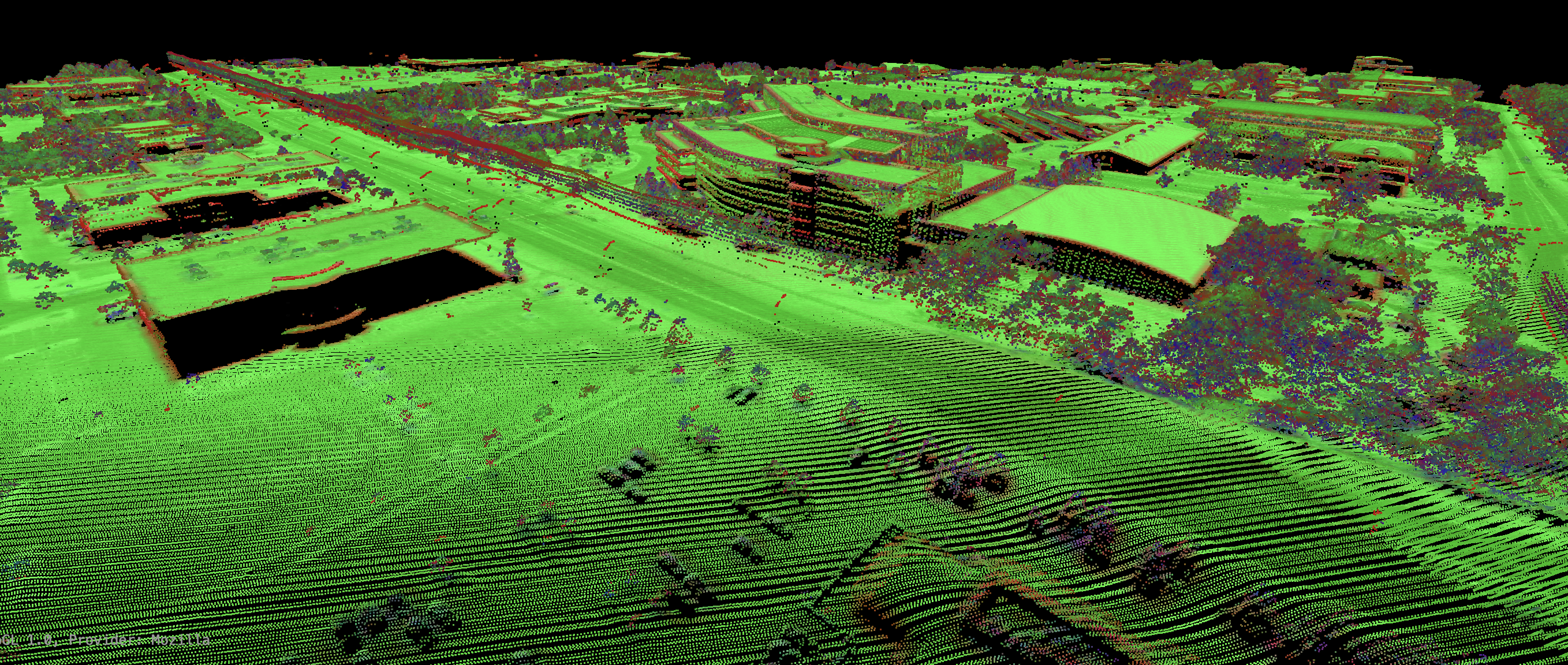

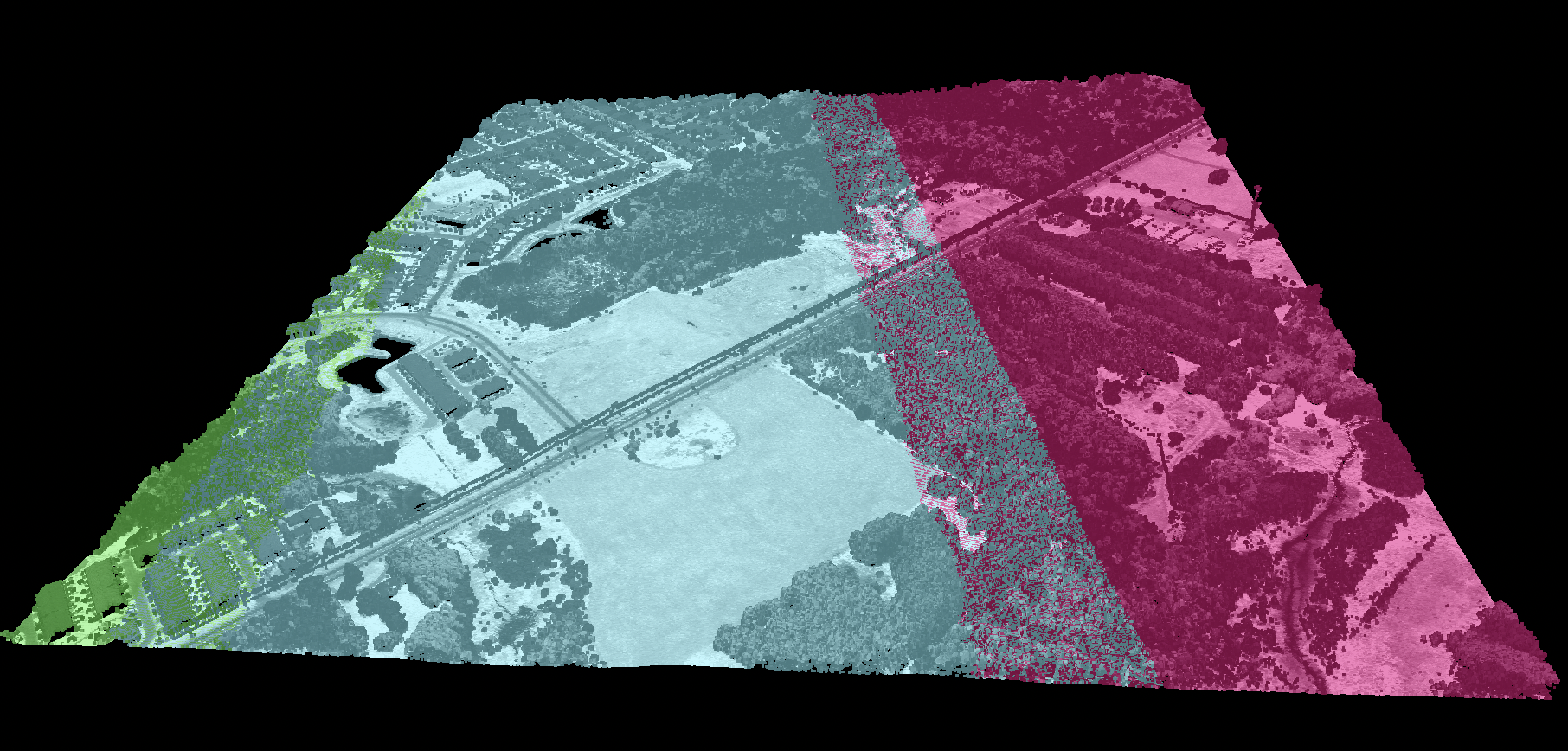

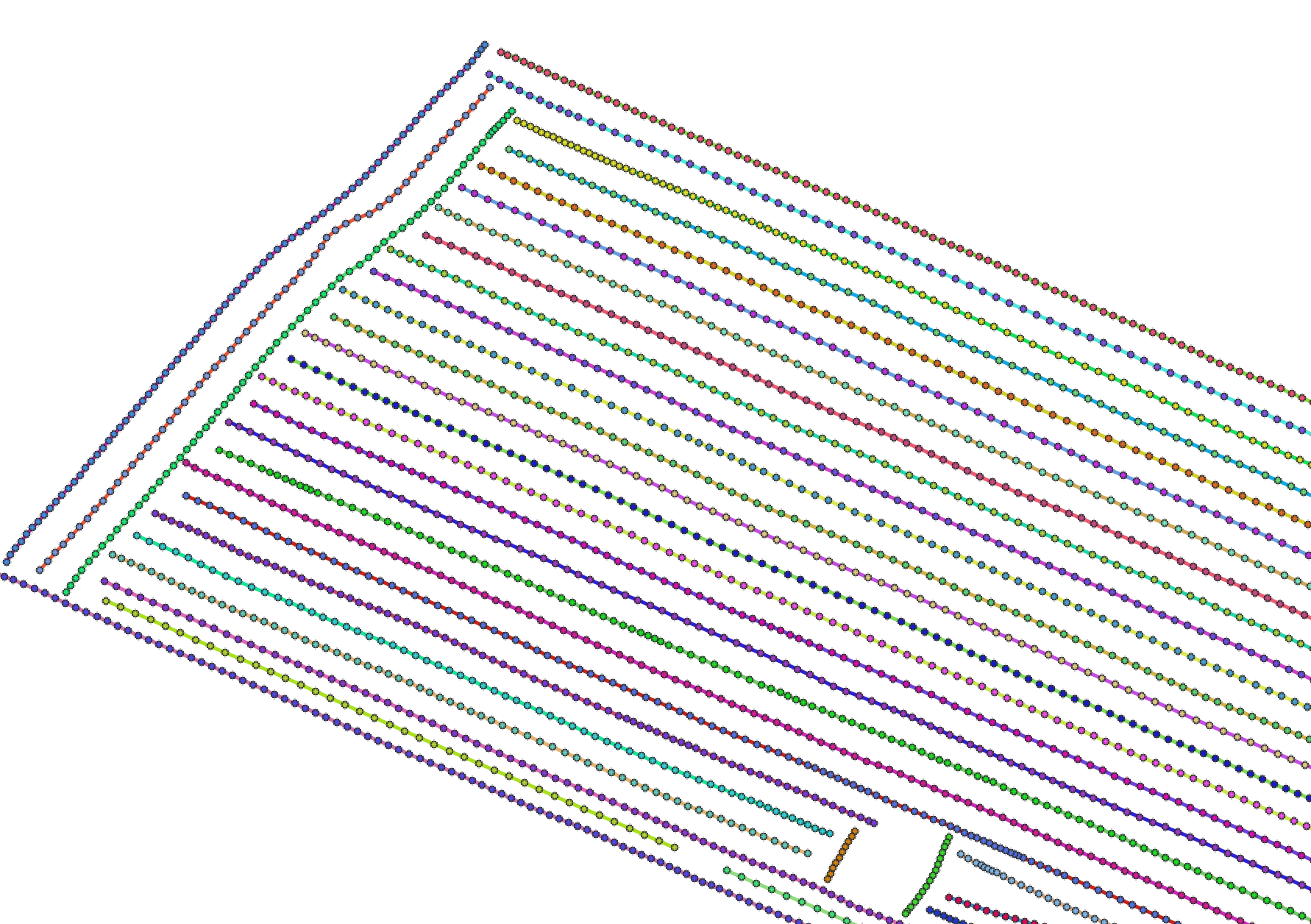

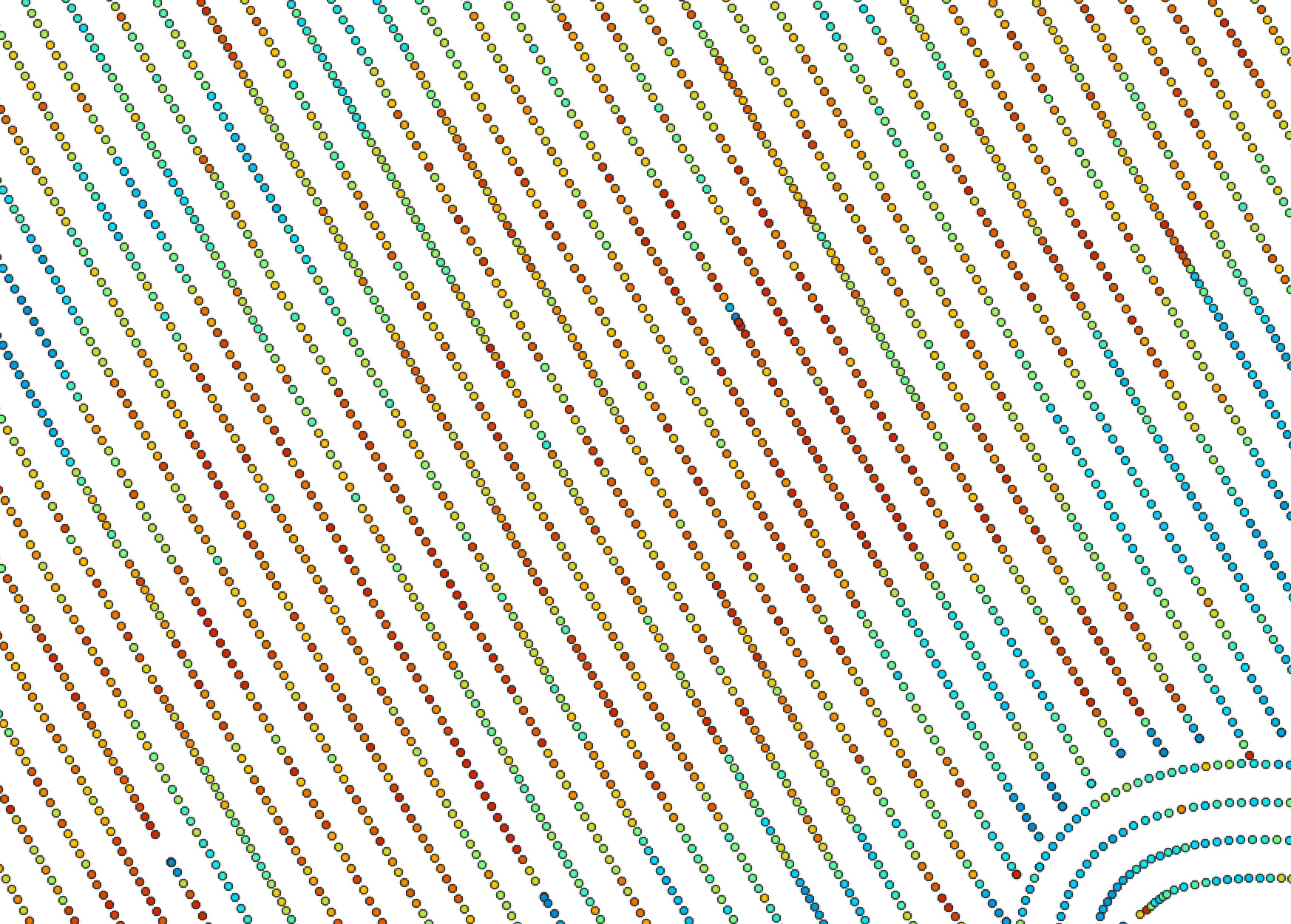

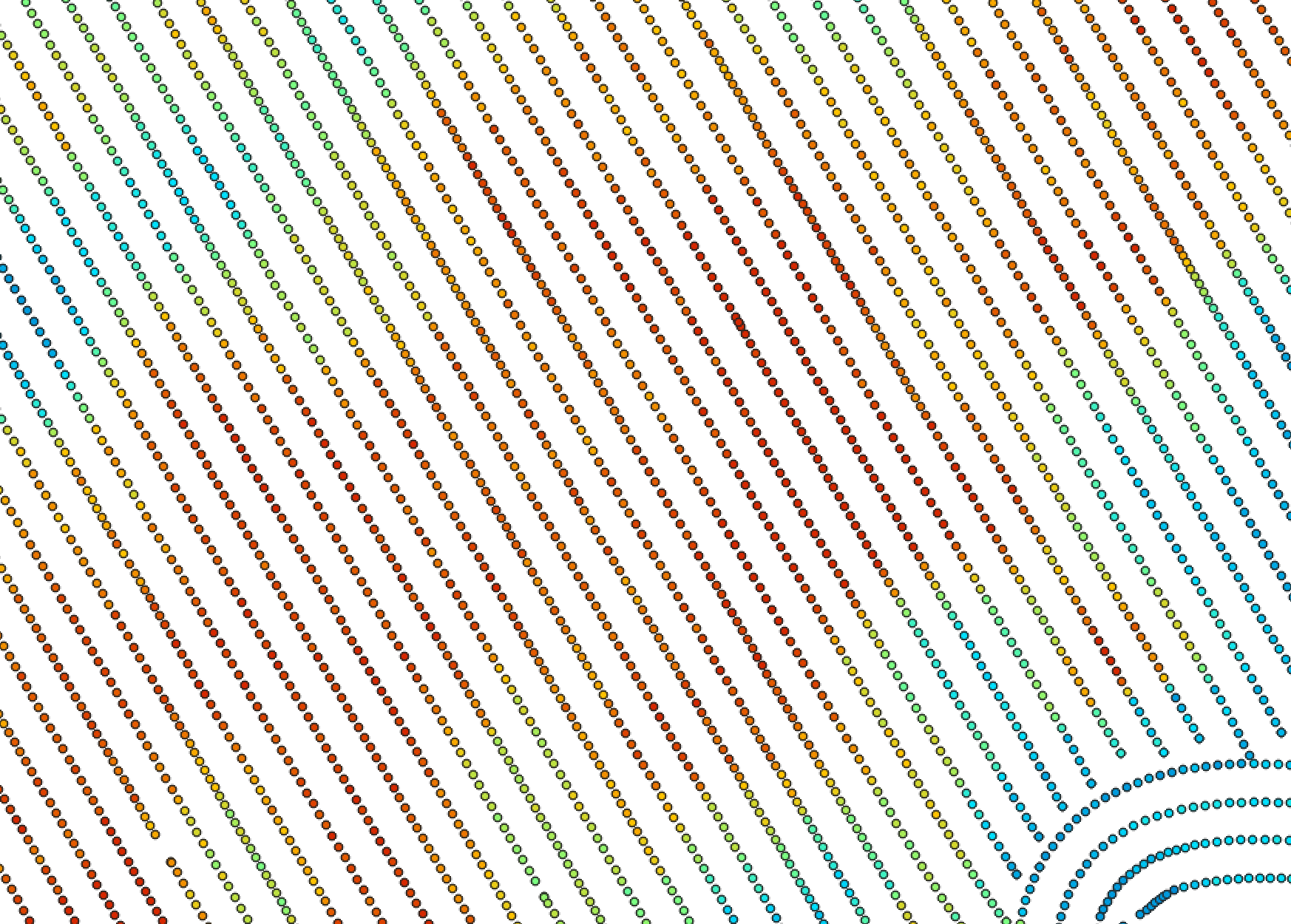

This tool sets the RGB colour values of a LiDAR point cloud (input) based on the point returns. It specifically

renders only-return, first-return, intermediate-return, and last-return points in different colours, storing

these data in the RGB colour data of the output LiDAR file (output). Colourizing the points in a LiDAR

point cloud based on return properties can aid with the visual inspection of point distributions, and therefore,

the quality assurance/quality control (QA/QC) of LiDAR data tiles. For example, this visualization process can

help to determine if there are areas of vegetation where there is insufficient coverage of ground points,

perhaps due to acquisition of the data during leaf-on conditions. There is often an assumption in LiDAR data

processing that the ground surface can be modelled using a subset of the only-return and last-return points

(beige and blue in the image below). However, under heavy forest cover, and in particular if the data were

collected during leaf-on conditions or if there is significant coverage of conifer trees, the only-return

and last-return points may be poor approximations of the ground surface. This tool can help to determine the

extent to which this is the case for a particular data set.

The specific colours used to render each return type can be set by the user with the only, first,

intermediate, and last parameters. Each parameter takes either a red-green-blue (RGB) colour triplet,

of the form (r,g,b), or a hex-colour, of either form #e6d6aa or 0xe6d6aa (note the # and 0x prefixes

used to indicate hexadecimal numbers; also either lowercase or capital letter values are acceptable).

The point RGB colour values can be blended with the intensity data to create a particularly effective

visualization, further enhancing the visual interpretation of point return properties. The intensity_blending

parameter value, which must range from 0% (no intensity blending) to 100% (all intensity), is used to

set the degree of intensity/RGB blending.

Because the output file contains RGB colour data, it is possible that it will be larger than the input file. If the input file does contain valid RGB data, the output will be similarly sized, but the input colour data will be replaced in the output file with the point-return colours.

The output file can be visualized using any point cloud renderer capable of displaying point RGB information. We recommend the plas.io LiDAR renderer but many similar open-source options exist.

This tool is a convenience function and can alternatively be achieved using the modify_lidar tool with the statement:

rgb=if(is_only, (230,214,170), if(is_last, (0,0,255), if(is_first, (0,255,0), (255,0,255))))

The colourize_based_on_point_returns tool is however significantly faster for this operation than the modify_lidar tool because the expression above must be executed dynamically for each point.

See Also

Function Signature

def colourize_based_on_point_returns(self, input_lidar: Optional[Lidar], intensity_blending_amount: float = 50.0, only_ret_colour: str = "(230,214,170)", first_ret_colour:str = "(0,140,0)", intermediate_ret_colour: str = "(255,0,255)", last_ret_colour: str = "(0,0,255)") -> Optional[Lidar]: ...

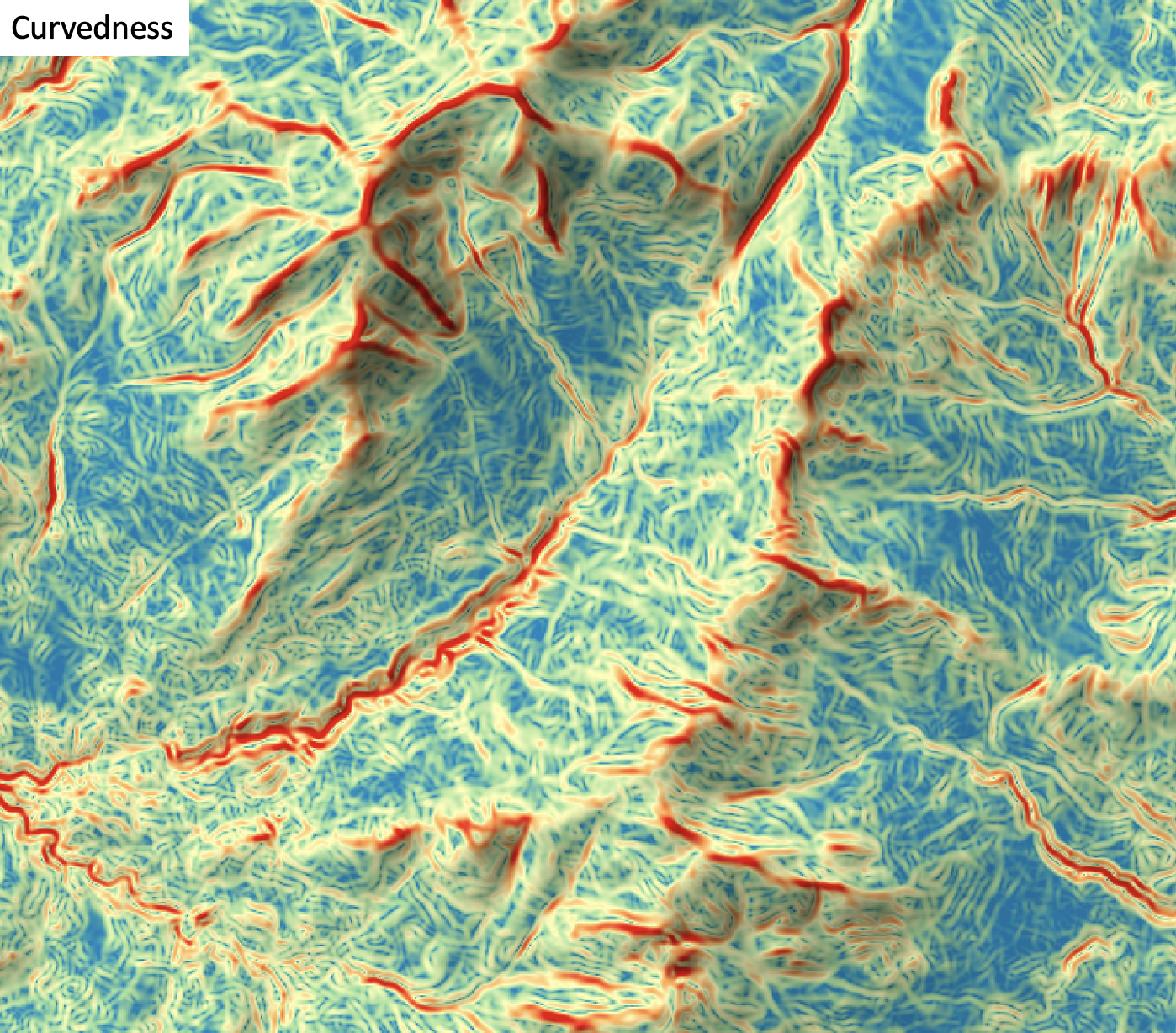

curvedness

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool calculates the curvedness (Koenderink and van Doorn, 1992) from a digital elevation model (DEM). Curvedness is the root mean square of maximal and minimal curvatures, and measures the magnitude of surface bending, regardless of shape (Florinsky, 2017). Curvedness is characteristically low-values for flat areas and higher for areas of sharp bending (Florinsky, 2017). The index is also inversely proportional with the size of the object (Koenderink and van Doorn, 1992). Curvedness has values equal to or greater than zero and is measured in units of m-1.

The user must specify the name of the input DEM (dem) and the output raster (output). The

The Z conversion factor (zfactor) is only important when the vertical and horizontal units are not the

same in the DEM. When this is the case, the algorithm will multiply each elevation in the DEM by the

Z Conversion Factor. Raw curvedness values are often challenging to visualize given their range and magnitude,

and as such the user may opt to log-transform the output raster (log). Transforming the values

applies the equation by Shary et al. (2002):

Θ' = sign(Θ) ln(1 + 10n|Θ|)

where Θ is the parameter value and n is dependent on the grid cell size.

For DEMs in projected coordinate systems, the tool uses the 3rd-order bivariate Taylor polynomial method described by Florinsky (2016). Based on a polynomial fit of the elevations within the 5x5 neighbourhood surrounding each cell, this method is considered more robust against outlier elevations (noise) than other methods. For DEMs in geographic coordinate systems (i.e. angular units), the tool uses the 3x3 polynomial fitting method for equal angle grids also described by Florinsky (2016).

References

Florinsky, I. (2016). Digital terrain analysis in soil science and geology. Academic Press.

Florinsky, I. V. (2017). An illustrated introduction to general geomorphometry. Progress in Physical Geography, 41(6), 723-752.

Koenderink, J. J., and Van Doorn, A. J. (1992). Surface shape and curvature scales. Image and vision computing, 10(8), 557-564.

Shary P. A., Sharaya L. S. and Mitusov A. V. (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107: 1–32.

See Also

shape_index, minimal_curvature, maximal_curvature, tangential_curvature, profile_curvature, mean_curvature, gaussian_curvature

Function Signature

def curvedness(self, dem: Raster, log_transform: bool = False, z_factor: float = 1.0) -> Raster: ...

dbscan

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool performs an unsupervised DBSCAN clustering operation, based

on a series of input rasters (inputs). Each grid cell defines a stack of feature values (one value for

each input raster), which serves as a point within the multi-dimensional feature space. The DBSCAN

algorithm identifies clusters in feature space by identifying regions of high density (core points)

and the set of points connected to these high-density areas. Points in feature space that are not

connected to high-density regions are labeled by the DBSCAN algorithm as 'noise' and the associated

grid cell in the output raster (output) is assigned the nodata value. Areas of high density (i.e. core

points) are defined as those points for which the number of neighbouring points within a search distance

(search_dist) is greater than some user-defined minimum threshold (min_points).

The main advantages of the DBSCAN algorithm over other clustering methods, such as k-means (k_means_clustering), is that 1) you do not need to specify the number of clusters a priori, and 2) that the method does not make assumptions about the shape of the cluster (spherical in the k-means method). However, DBSCAN does assume that the density of every cluster in the data is approximately equal, which may not be a valid assumption. DBSCAN may also produce unsatisfactory results if there is significant overlap among clusters, as it will aggregate the clusters. Finding search distance and minimum core-point density thresholds that apply globally to the entire data set may be very challenging or impossible for certain applications.

The DBSCAN algorithm is based on the calculation of distances in multi-dimensional space. Feature scaling is

essential to the application of DBSCAN clustering, especially when the ranges of the features are different, for

example, if they are measured in different units. Without scaling, features with larger ranges will have

greater influence in computing the distances between points. The tool offers three options for feature-scaling (scaling),

including 'None', 'Normalize', and 'Standardize'. Normalization simply rescales each of the features onto

a 0-1 range. This is a good option for most applications, but it is highly sensitive to outliers because

it is determined by the range of the minimum and maximum values. Standardization

rescales predictors using their means and standard deviations, transforming the data into z-scores. This

is a better option than normalization when you know that the data contain outlier values; however, it does

does assume that the feature data are somewhat normally distributed, or are at least symmetrical in

distribution.

One should keep the impact of feature scaling in mind when setting the search_dist parameter. For

example, if applying normalization, the entire range of values for each dimension of feature space will

be bound within the 0-1 range, meaning that the search distance should be smaller than 1.0, and likely

significantly smaller. If standardization is used instead, features space is technically infinite,

although the vast majority of the data are likely to be contained within the range -2.5 to 2.5.

Because the DBSCAN algorithm calculates distances in feature-space, like many other related algorithms, it suffers from the curse of dimensionality. Distances become less meaningful in high-dimensional space because the vastness of these spaces means that distances between points are less significant (more similar). As such, if the predictor list includes insignificant or highly correlated variables, it is advisable to exclude these features during the model-building phase, or to use a dimension reduction technique such as principal_component_analysis to transform the features into a smaller set of uncorrelated predictors.

Memory Usage

The peak memory usage of this tool is approximately 8 bytes per grid cell × # predictors.

See Also

k_means_clustering, modified_k_means_clustering, principal_component_analysis

Function Signature

def dbscan(self, input_rasters: List[Raster], scaling_method: str = "none", search_distance: float = 1.0, min_points: int = 5) -> Raster: ...

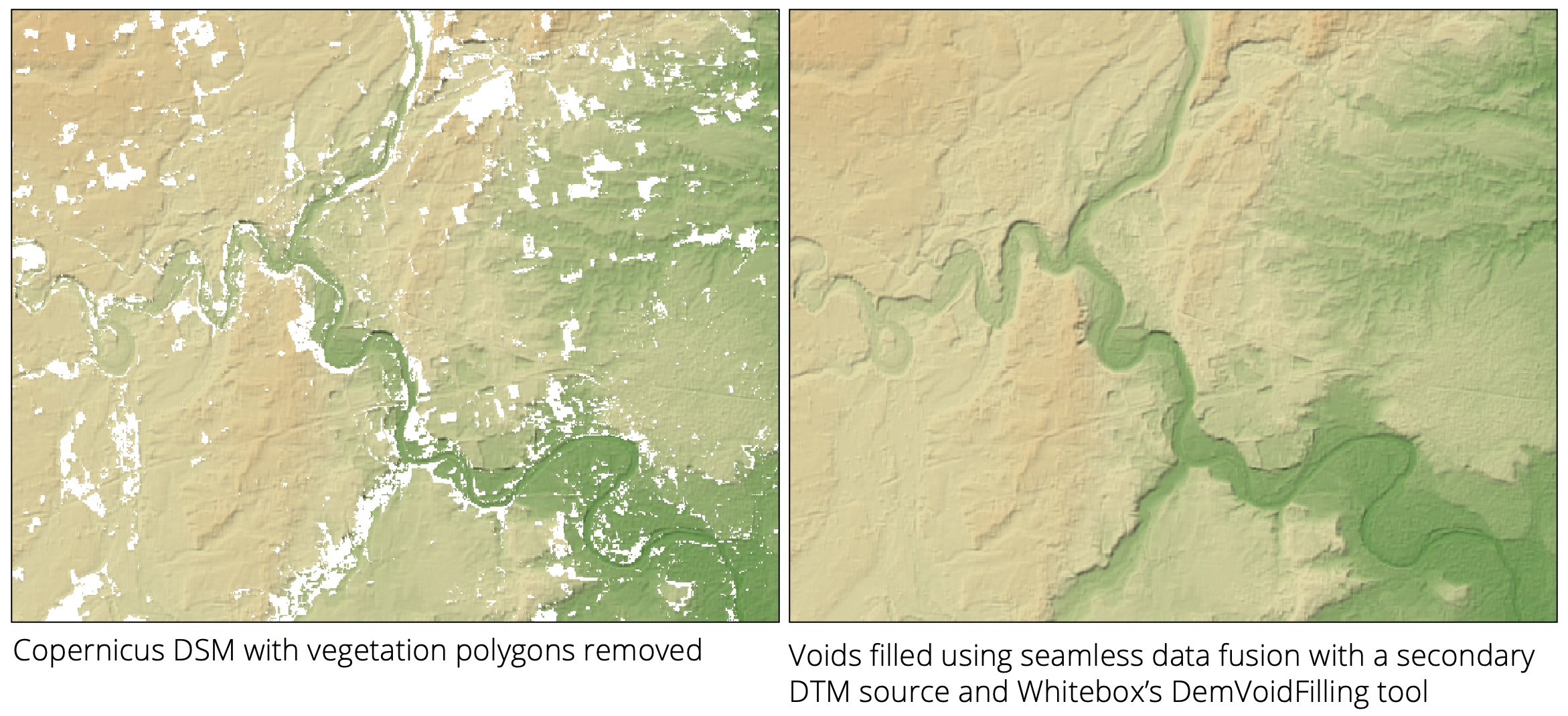

dem_void_filling

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool implements a modified version of the Delta Surface Fill method of Grohman et al. (2006). It can

fill voids (i.e., data holes) contained within a digital elevation model (dem) by fusing the data with a

second DEM (fill) that defines the topographic surface within the void areas. The two surfaces are fused

seamlessly so that the transition from the source and fill surfaces is undetectable. The fill surface need

not have the same resolution as the source DEM.

The algorithm works by computing a DEM-of-difference (DoD) for each valid grid cell in the source DEM that also has a valid elevation in the corresponding location within the fill DEM. This difference surface is then used to define offsets within the near void-edge locations. The fill surface elevations are then combined with interpolated offsets, with the interpolation based on near-edge offsets, and used to define a new surface within the void areas of the source DEM in such a way that the data transitions seamlessly from the source data source to the fill data. The image below provides an example of this method.

The user must specify the mean_plane_dist parameter, which defines the distance (measured in grid cells)

within a void area from the void's edge. Grid cells within larger voids that are beyond this distance

from their edges have their vertical offsets, needed during the fusion of the DEMs, set to the mean offset

for all grid cells that have both valid source and fill elevations. Void cells that are nearer their void

edges have vertical offsets that are interpolated based on nearby offset values (i.e., the DEM of difference).

The interpolation uses inverse-distance weighted (IDW) scheme, with a user-specified weight parameter (weight_value).

The edge_treatment parameter describes how the data fusion operates at the edges of voids, i.e., the first line

of grid cells for which there are both source and fill elevation values. This parameter has values of "use DEM",

"use Fill", and "average". Grohman et al. (2006) state that sometimes, due to a weakened signal within these

marginal locations between the area of valid data and voids, the estimated elevation values are inaccurate. When

this is the case, it is best to use fill elevations in the transitional areas. If this isn't the case, the

"use DEM" is the better option. A compromise between the two options is to average the two elevation sources.

References

Grohman, G., Kroenung, G. and Strebeck, J., 2006. Filling SRTM voids: The delta surface fill method. Photogrammetric Engineering and Remote Sensing, 72(3), pp.213-216.

Function Signature

def dem_void_filling(self, dem: Raster, fill: Raster, mean_plane_dist: int = 20, edge_treatment: str = "dem", weight_value: float = 2.0) -> Raster: ...

depth_to_water

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool calculates the cartographic depth-to-water (DTW) index described by Murphy et al. (2009). The DTW index has been shown to be related to soil moisture, and is useful for identifying low-lying positions that are likely to experience surface saturated conditions. In this regard, it is similar to each of wetness_index, elevation_above_stream (HAND), and probability-of-depressions (i.e. stochastic_depression_analysis).

The index is the cumulative slope gradient along the least-slope path connecting each grid cell in an input DEM (dem) to

a surface water cell. Tangent slope (i.e. rise / run) is calculated for each grid cell based on the neighbouring elevation

values in the input DEM. The algorithm

operates much like a cost-accumulation analysis (cost_distance), where the cost of moving through a cell is determined

by the cell's tangent slope value and the distance travelled. Therefore, lower DTW values are associated with wetter soils and

higher values indicate drier conditions, over longer time periods. Areas of surface water have DTW values of zero. The user

must input surface water features, including vector stream lines (streams) and/or vector waterbody polygons

(lakes, i.e. lakes, ponds, wetlands, etc.). At least one of these two optional water feature inputs must be specified. The

tool internally rasterizes these vector features, setting the DTW value in the output raster to zero. DTW tends

to increase with greater distances from surface water features, and increases more slowly in flatter topography and more

rapidly in steeper settings. Murphy et al. (2009) state that DTW is a probablistic model that assumes uniform soil properties,

climate, and vegetation.

Note that DTW values are highly dependent upon the accuracy and extent of the input streams/lakes layer(s).

References

Murphy, PNC, Gilvie, JO, and Arp, PA (2009) Topographic modelling of soil moisture conditiTons: a comparison and verification of two models. European Journal of Soil Science, 60, 94–109, DOI: 10.1111/j.1365-2389.2008.01094.x.

See Also

wetness_index, elevation_above_stream, stochastic_depression_analysis

Function Signature

def depth_to_water(self, dem: Raster, streams: Optional[Vector] = None, lakes: Optional[Vector] = None) -> Raster: ...

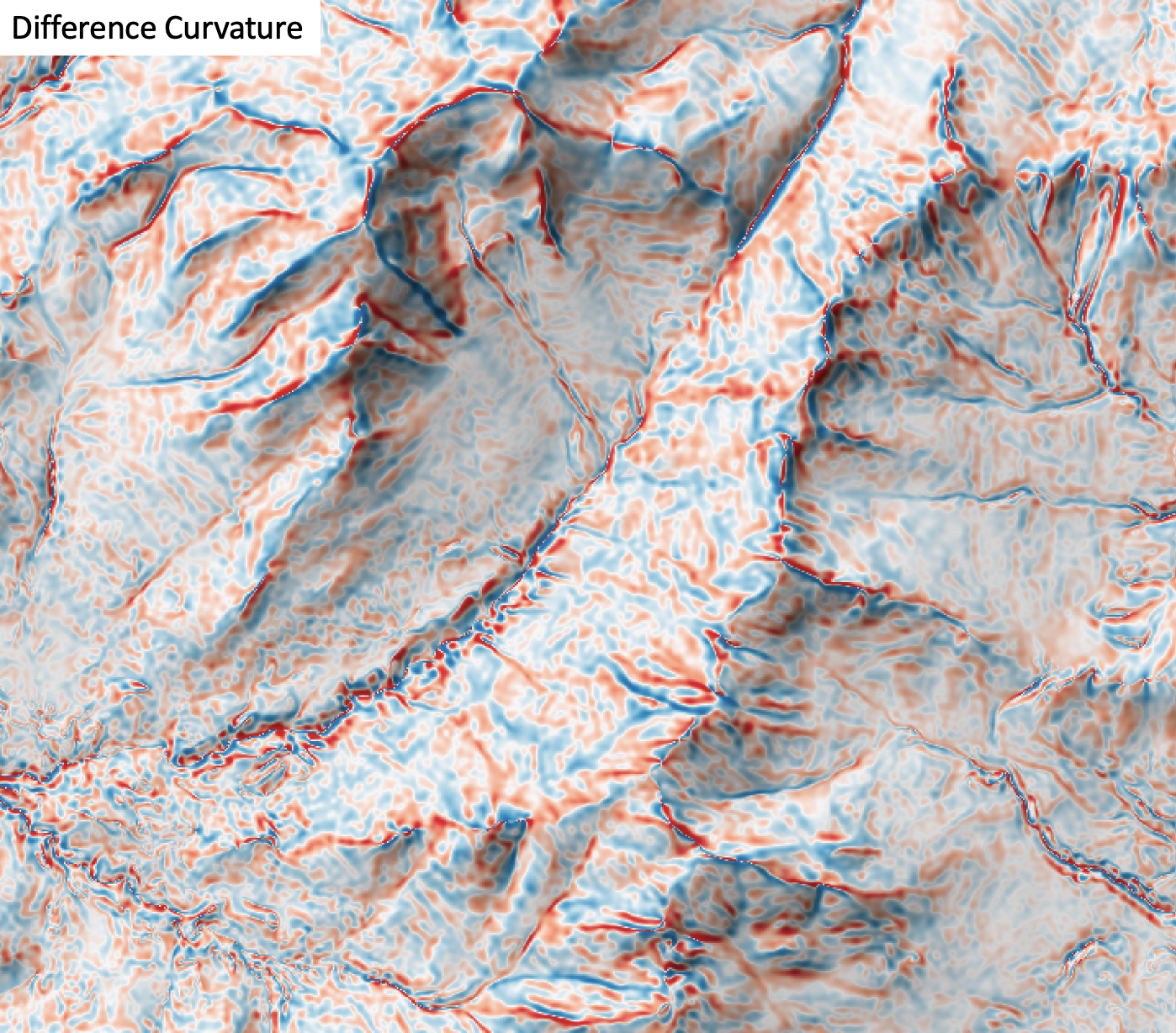

difference_curvature

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

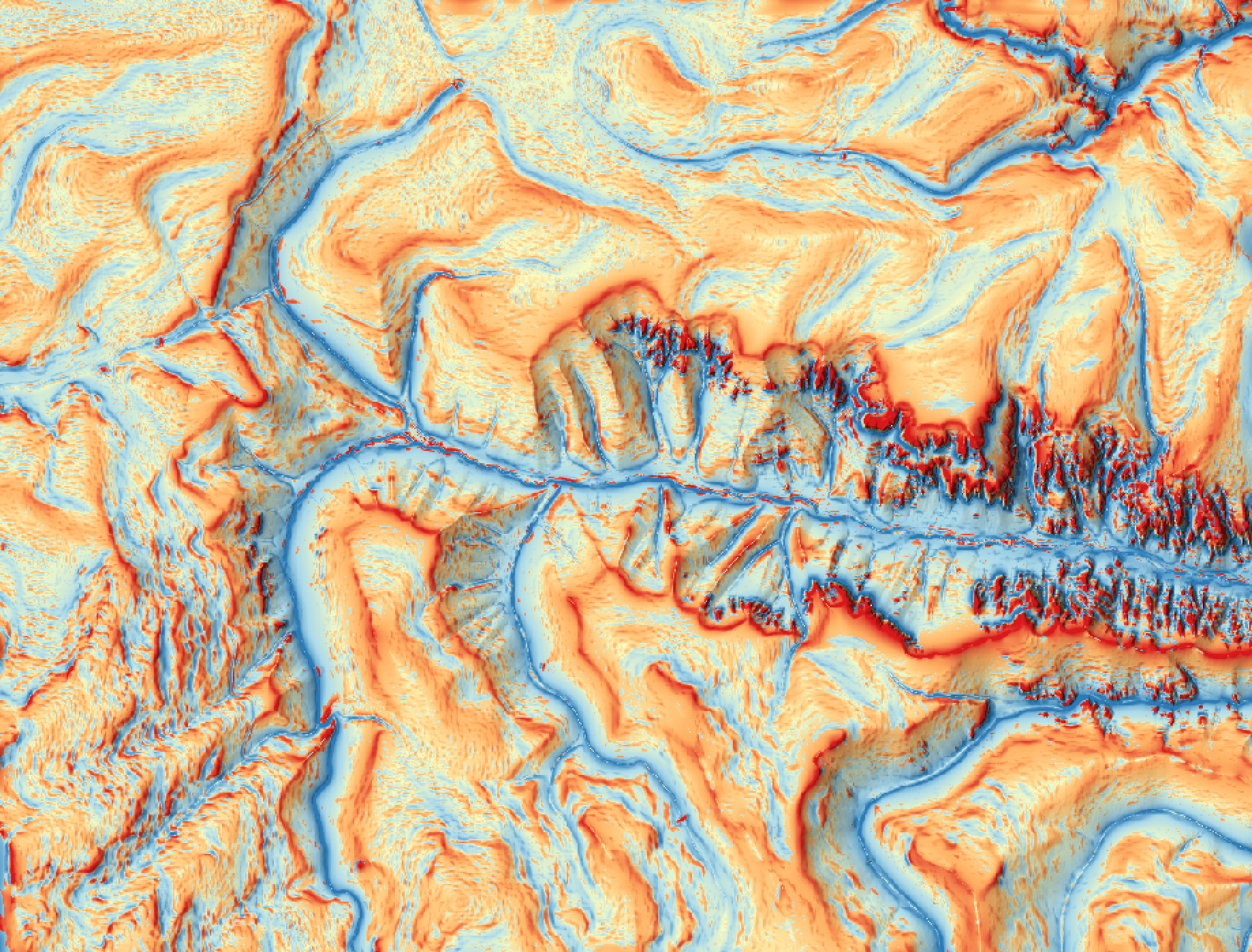

This tool calculates the difference curvature from a digital elevation model (DEM). Difference curvature is half of the difference between profile and tangential curvatures, sometimes called the vertical and horizontal curvatures (Shary, 1995). This variable has an unbounded range that can take either positive or negative values. Florinsky (2017) states that difference curvature measures the extent to which the relative deceleration of flows (measured by kv) is higher than flow convergence at a given point of the topographic surface. Difference curvature is measured in units of m-1.

The user must specify the name of the input DEM (dem) and the output raster (output). The

The Z conversion factor (zfactor) is only important when the vertical and horizontal units are not the

same in the DEM. When this is the case, the algorithm will multiply each elevation in the DEM by the

Z Conversion Factor. Curvature values are often very small and as such the user may opt to log-transform

the output raster (log). Transforming the values applies the equation by Shary et al. (2002):

Θ' = sign(Θ) ln(1 + 10n|Θ|)

where Θ is the parameter value and n is dependent on the grid cell size.

For DEMs in projected coordinate systems, the tool uses the 3rd-order bivariate Taylor polynomial method described by Florinsky (2016). Based on a polynomial fit of the elevations within the 5x5 neighbourhood surrounding each cell, this method is considered more robust against outlier elevations (noise) than other methods. For DEMs in geographic coordinate systems (i.e. angular units), the tool uses the 3x3 polynomial fitting method for equal angle grids also described by Florinsky (2016).

References

Florinsky, I. (2016). Digital terrain analysis in soil science and geology. Academic Press.

Florinsky, I. V. (2017). An illustrated introduction to general geomorphometry. Progress in Physical Geography, 41(6), 723-752.

Shary PA (1995) Land surface in gravity points classification by a complete system of curvatures. Mathematical Geology 27: 373–390.

Shary P. A., Sharaya L. S. and Mitusov A. V. (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107: 1–32.

See Also

profile_curvature, tangential_curvature, rotor, minimal_curvature, maximal_curvature, mean_curvature, gaussian_curvature

Function Signature

def difference_curvature(self, dem: Raster, log_transform: bool = False, z_factor: float = 1.0) -> Raster: ...

evaluate_training_sites

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

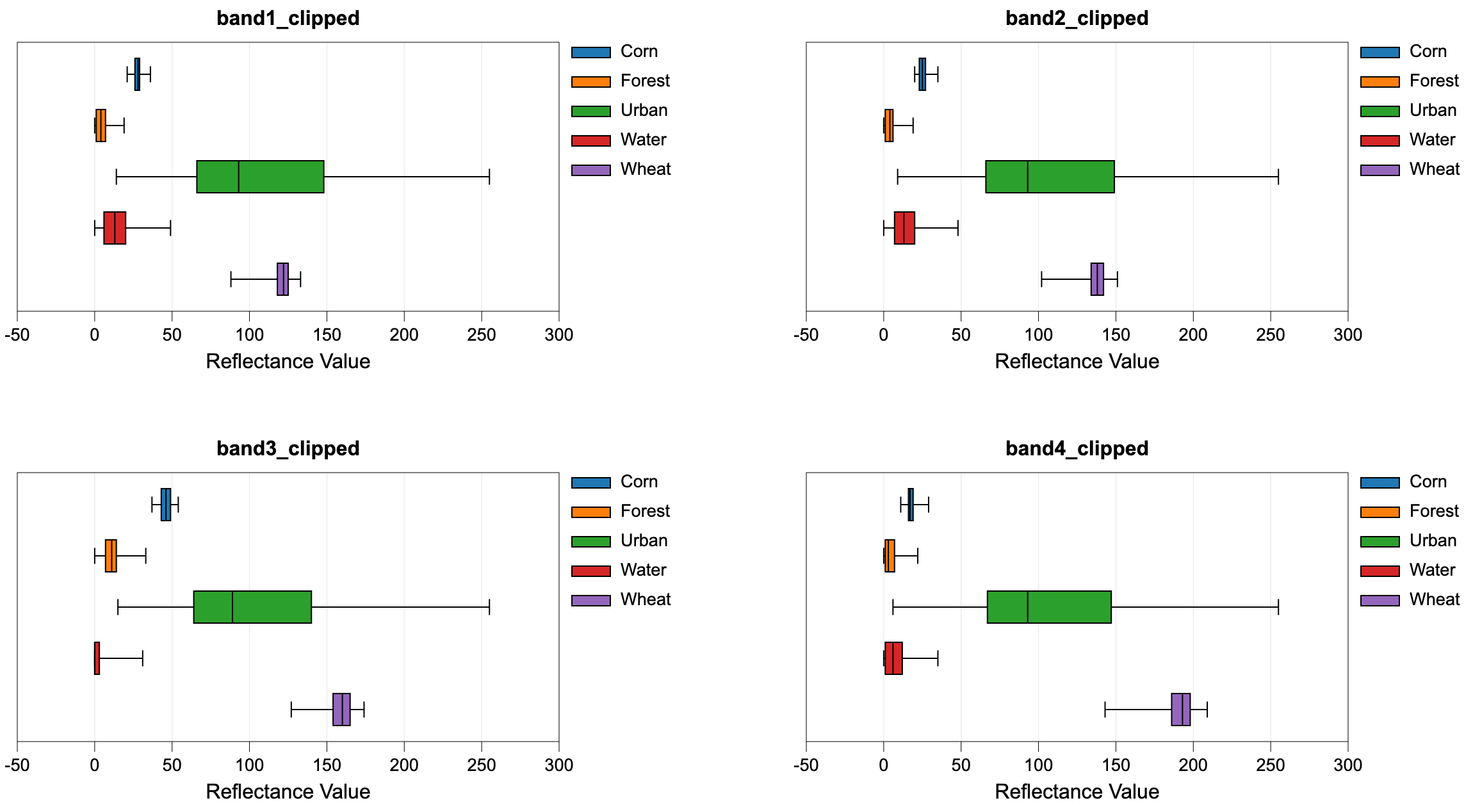

This tool performs an evaluation of the reflectance properties of multi-spectral image dataset for a

group of digitized class polygons. This is often viewed as the first step in a supervised classification

procedure, such as those performed using the min_dist_classification or parallelepiped_classification

tools. The analysis is based on a series of one or more input images (inputs) and an input polygon

vector file (polys). The user must also specify the attribute name (field), within the attribute

table, containing the class ID associated with each feature in input the polygon vector. A single class

may be designated by multiple polygon features in the test site polygon vector. Note that the

input polygon file is generally created by digitizing training areas of exemplar reflectance properties for each

class type. The input polygon vector should be in the same coordinate system as the input multi-spectral images.

The input images must represent a multi-spectral data set made up of individual bands.

Do not input colour composite images. Lastly, the user must specify the name of the output HTML file.

This file will contain a series of box-and-whisker plots, one

for each band in the multi-spectral data set, that visualize the distribution of each class in the

associated bands. This can be helpful in determining the overlap between spectral properties for the

classes, which may be useful if further class or test site refinement is necessary. For a subsequent

supervised classification to be successful, each class should not overlap significantly with the other

classes in at least one of the input bands. If this is not the case, the user may need to refine

the class system.

See Also

min_dist_classification, parallelepiped_classification

Function Signature

def evaluate_training_sites(self, input_rasters: List[Raster], training_polygons: Vector, class_field_name: str, output_html_file: str) -> None: ...

filter_lidar

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

The FilterLidar tool is a very powerful tool for filtering points within a LiDAR point cloud based on point

properties. Complex filter statements (statement) can be used to include or exclude points in the

output file (output).

Note that if the user does not specify the optional input LiDAR file (input), the tool will search for all

valid LiDAR (*.las, *.laz, *.zlidar) files contained within the current working directory. This feature can be useful

for processing a large number of LiDAR files in batch mode. When this batch mode is applied, the output file

names will be the same as the input file names but with a '_filtered' suffix added to the end.

Points are either included or excluded from the output file by creating conditional filter statements. Statements must be valid Rust syntax and evaluate to a Boolean. Any of the following variables are acceptable within the filter statement:

| Variable Name | Description |

|---|---|

| x | The point x coordinate |

| y | The point y coordinate |

| z | The point z coordinate |

| intensity | The point intensity value |

| ret | The point return number |

| nret | The point number of returns |

| is_only | True if the point is an only return (i.e. ret == nret == 1), otherwise false |

| is_multiple | True if the point is a multiple return (i.e. nret > 1), otherwise false |

| is_early | True if the point is an early return (i.e. ret == 1), otherwise false |

| is_intermediate | True if the point is an intermediate return (i.e. ret > 1 && ret < nret), otherwise false |

| is_late | True if the point is a late return (i.e. ret == nret), otherwise false |

| is_first | True if the point is a first return (i.e. ret == 1 && nret > 1), otherwise false |

| is_last | True if the point is a last return (i.e. ret == nret && nret > 1), otherwise false |

| class | The class value in numeric form, e.g. 0 = Never classified, 1 = Unclassified, 2 = Ground, etc. |

| is_noise | True if the point is classified noise (i.e. class == 7 |

| is_synthetic | True if the point is synthetic, otherwise false |

| is_keypoint | True if the point is a keypoint, otherwise false |

| is_withheld | True if the point is withheld, otherwise false |

| is_overlap | True if the point is an overlap point, otherwise false |

| scan_angle | The point scan angle |

| scan_direction | True if the scanner is moving from the left towards the right, otherwise false |

| is_flightline_edge | True if the point is situated along the filightline edge, otherwise false |

| user_data | The point user data |

| point_source_id | The point source ID |

| scanner_channel | The point scanner channel |

| time | The point GPS time, if it exists, otherwise 0 |

| red | The point red value, if it exists, otherwise 0 |

| green | The point green value, if it exists, otherwise 0 |

| blue | The point blue value, if it exists, otherwise 0 |

| nir | The point near infrared value, if it exists, otherwise 0 |

| pt_num | The point number within the input file |

| n_pts | The number of points within the file |

| min_x | The file minimum x value |

| mid_x | The file mid-point x value |

| max_x | The file maximum x value |

| min_y | The file minimum y value |

| mid_y | The file mid-point y value |

| max_y | The file maximum y value |

| min_z | The file minimum z value |

| mid_z | The file mid-point z value |

| max_z | The file maximum z value |

| dist_to_pt | The distance from the point to a specified xy or xyz point, e.g. dist_to_pt(562500, 4819500) or dist_to_pt(562500, 4819500, 320) |

| dist_to_line | The distance from the point to the line passing through two xy points, e.g. dist_to_line(562600, 4819500, 562750, 4819750) |

| dist_to_line_seg | The distance from the point to the line segment defined by two xy end-points, e.g. dist_to_line_seg(562600, 4819500, 562750, 4819750) |

| within_rect | 1 if the point falls within the bounds of a 2D or 3D rectangle, otherwise 0. Bounds are defined as within_rect(ULX, ULY, LRX, LRY) or within_rect(ULX, ULY, ULZ, LRX, LRY, LRZ) |

In addition to the point properties defined above, if the user applies the lidar_eigenvalue_features

tool on the input LiDAR file, the filter_lidar tool will automatically read in the additional *.eigen

file, which include the eigenvalue-based point neighbourhood measures, such as lambda1, lambda2, lambda3,

linearity, planarity, sphericity, omnivariance, eigentropy, slope, and residual. See the

lidar_eigenvalue_features documentation for details on each of these metrics describing the structure

and distribution of points within the neighbourhood surrounding each point in the LiDAR file.

Statements can be as simple or complex as desired. For example, to filter out all points that are classified noise (i.e. class numbers 7 or 18):

!is_noise

The following is a statement to retain only the late returns from the input file (i.e. both last and single returns):

ret == nret

Notice that equality uses the == symbol an inequality uses the != symbol. As an equivalent to the above

statement, we could have used the is_late point property:

is_late

If we want to remove all points outside of a range of xy values:

x >= 562000 && x <= 562500 && y >= 4819000 && y <= 4819500

Notice how we can combine multiple constraints using the && (logical AND) and || (logical OR) operators.

As an alternative to the above statement, we could have used the within_rect function:

within_rect(562000, 4819500, 562500, 4819000)

If we want instead to exclude all of the points within this defined region, rather than to retain them, we

simply use the ! (logial NOT):

!(x >= 562000 && x <= 562500 && y >= 4819000 && y <= 4819500)

or, simply:

!within_rect(562000, 4819500, 562500, 4819000)

If we need to find all of the ground points within 150 m of (562000, 4819500), we could use:

class == 2 && dist_to_pt(562000, 4819500) <= 150.0

The following statement outputs all non-vegetation classed points in the upper-right quadrant:

!(class == 3 && class != 4 && class != 5) && x < min_x + (max_x - min_x) / 2.0 && y > max_y - (max_y - min_y) / 2.0

As demonstrated above, the filter_lidar tool provides an extremely flexible, powerful, and easy means for retaining and removing points from point clouds based on any of the common LiDAR point attributes.

See Also

filter_lidar_classes, filter_lidar_scan_angles, modify_lidar, erase_polygon_from_lidar, clip_lidar_to_polygon, sort_lidar, lidar_eigenvalue_features

Function Signature

def filter_lidar(self, statement: str, input_lidar: Optional[Lidar]) -> Optional[Lidar]: ...

filter_lidar_by_percentile

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool can be used to extract a subset of points from an input LiDAR point cloud (input_lidar) that correspond

to a user-specified percentile of the points within the local neighbourhood. The algorithm works by overlaying a

grid of a specified size (block_size). The group of LiDAR points contained within each block in the superimposed

grid are identified and are sorted by elevation. The point with the elevation that corresponds most closely to the

specified percentile is then inserted into the output LiDAR point cloud. For example, if percentile = 0.0, the

lowest point within each block will be output, if percentile = 100.0 the highest point will be output, and if

percentile = 50.0 the point that is nearest the median elevation will be output. Notice that the lower the number

of points contained within a block, the more approximate the calculation will be. For example, if a block only contains

three points, no single point occupies the 25th percentile. The equation that is used to identify the closest

corresponding point (zero-based) from a list of n sorted by elevation values is:

point_num = ⌊percentile / 100.0 * (n - 1)⌉

Increasing the block size (default is 1.0 xy-units) will increase the average number of points within blocks, allowing for a more accurate percentile calculation.

Like many of the LiDAR functions, the input LiDAR point cloud (input_lidar) is optional. If an input LiDAR file

is not specified, the tool will search for all valid LiDAR (*.las, *.laz, *.zlidar) files contained within the current

working directory. This feature can be very useful when you need to process a large number of LiDAR files contained

within a directory. This batch processing mode enables the function to run in a more optimized parallel manner.

When run in this batch mode, no output LiDAR object will be created. Instead the function will create

an output file (saved to disc) with the same name as each input LiDAR file, but with the .tif extension. This can provide a very

efficient means for processing extremely large LiDAR data sets.

See Also

filter_lidar, lidar_block_minimum, lidar_block_maximum

Function Signature

def filter_lidar_by_percentile(self, input_lidar: Optional[Lidar], percentile: float = 0.0, block_size: float = 1.0) -> Optional[Lidar]: ...

filter_lidar_by_reference_surface

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool can be used to extract a subset of points from an input LiDAR point cloud (input_lidar) that satisfy a

query relation with a user-specified raster reference surface (ref_surface). For example, you may use this

function to extract all of the points that are below (query="<" or query="<=") or above (query=">" or query=">=")

a surface model. The default query mode is "within" (i.e. query="within"), which extracts all of the points that

are within a specified absolute vertical distance (threshold) of the surface. Notice that the threshold parameter

is ignored for query types other than "within".

By default, the function will return a point cloud containing only the subset of points in the input dataset that satisfy

the condition of the query. Setting the classify parameter to True modifies this behaviour such that the output

point cloud will contain all of the points within the input dataset, but will have the classification value of the

query-satifying points will be set to the true_class_value parameter (0-255) and points that do not satisfy the query

will be assigned the false_class_value (0-255). By setting the preserve_classes paramter to True, all points that do not

satisfy the query will have unmodified class values from the input dataset.

Unlike many of the LiDAR functions, this function does not have a batch mode and operates on single tiles only.

See Also

Function Signature

def filter_lidar_by_reference_surface(self, input_lidar: Lidar, ref_surface: Raster, query: str = "within", threshold: float = 0.0) -> Lidar: ...

fix_dangling_arcs

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool can be used to fix undershot and overshot arcs, two common topological errors,

in an input vector lines file (input). In addition to the input lines vector, the user must

also specify the output vector (output) and the snap distance (snap). All dangling arcs

that are within this threshold snap distance of another line feature will be connected to the

neighbouring feature. If the input lines network is a vector stream network, users are advised

to apply the repair_stream_vector_topology tool instead.

See Also

repair_stream_vector_topology, clean_vector

Function Signature

def fix_dangling_arcs(self, input: Vector, snap_dist: float) -> Vector: ...

fuzzy_knn_classification

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool performs a supervised fuzzy k-nearest neighbour (k-NN) classification

using multiple predictor rasters (inputs), or features, and training data (training). This implementation is based

on the Keller et al. (1985) algorithm. The function can be used to model the spatial distribution of class data,

such as land-cover type, soil class, or vegetation type. The training data take the form of an input vector Shapefile

containing a set of points or polygons, for which the known class information is contained within a field (field) of

the attribute table. Each grid cell defines a stack of feature values (one value for each input raster), which serves as

a point within the multi-dimensional feature space. The algorithm works by identifying a user-defined number (k, -k) of

feature-space neighbours from the training set for each grid cell. The class that is then assigned to

the grid cell in the output raster (output) is then determined as a distance-weighted sum of classes among the

set of training data neighbours. The implementation uses Keller et al.'s (1985) third method of assigning membership to

training points. This method attempts to "fuzzify" the memberships of the labeled samples, which are in the class regions

intersecting in the sample space, and leaves the samples that are well away from this area with complete membership in the

known class. As a result, an unknown sample lying in this intersecting region will be influenced to a lesser extent by the

labeled samples of the training data that are in the "fuzzy" area of the class boundary.

Fuzzy kNN has often been found to outperform the regular kNN method for classification problems and has the extra advantage of outputing information about class membership uncertainty. This function optionally outputs two rasters, the first being the classified image (i.e., the crisply classified image determined by assigning each cell to the class for which the membership probability is highest) and the second raster indicating the membership probability. Grid cells with relatively low membership probability are indicative of greater uncertainty associated with the classification. Note that because membership probabilities across all classes must sum to 1.0 for each grid cell, the lowest possible membership probability for the "winning" class is 1 / N, where N is the number of input features. Examining the membership probability raster can provide insight into how the information class structure or training data sets can be improved, if for example, certain classes are commonly associated with lower membership probability.

The function uses leave-one-out-cross-validation (LOOCV) to measure the accuracy of the fit model. This approach is used to calculate the overall accuracy and Cohen's kappa index of agreement.

Note that the output image parameter (output) is optional. When unspecified, the tool will simply

report the model accuracy statistics and variable importance, allowing the user to experiment with different parameter

settings and input predictor raster combinations to optimize the model before applying it to classify

the whole image data set.

Like all supervised classification methods, this technique relies heavily on proper selection of training data. Training sites are exemplar areas/points of known and representative class value (e.g. land cover type). The algorithm determines the feature signatures of the pixels within each training area. In selecting training sites, care should be taken to ensure that they cover the full range of variability within each class. Otherwise the classification accuracy will be impacted. If possible, multiple training sites should be selected for each class. It is also advisable to avoid areas near the edges of class objects (e.g. land-cover patches), where mixed pixels may impact the purity of training site values.

After selecting training sites, the feature value distributions of each class type can be assessed using the evaluate_training_sites tool. In particular, the distribution of class values should ideally be non-overlapping in at least one feature dimension.

The fuzzy k-NN algorithm is based on the calculation of distances in multi-dimensional space. Feature scaling is

essential to the application of fuzzy k-NN modelling, especially when the ranges of the features are different, for

example, if they are measured in different units. Without scaling, features with larger ranges will have

greater influence in computing the distances between points. The tool offers three options for feature-scaling (scaling),

including 'None', 'Normalize', and 'Standardize'. Normalization simply rescales each of the features onto

a 0-1 range. This is a good option for most applications, but it is highly sensitive to outliers because

it is determined by the range of the minimum and maximum values. Standardization

rescales predictors using their means and standard deviations, transforming the data into z-scores. This

is a better option than normalization when you know that the data contain outlier values; however, it does

does assume that the feature data are somewhat normally distributed, or are at least symmetrical in

distribution.

Because the fuzzy k-NN algorithm calculates distances in feature-space, like many other related algorithms, it suffers from the curse of dimensionality. Distances become less meaningful in high-dimensional space because the vastness of these spaces means that distances between points are less significant (more similar). As such, if the predictor list includes insignificant or highly correlated variables, it is advisable to exclude these features during the model-building phase, or to use a dimension reduction technique such as principal_component_analysis to transform the features into a smaller set of uncorrelated predictors.

Note that the knn_regression tool can be used to apply the k-NN method to the modelling of continuous data.

Memory Usage

The peak memory usage of this tool is approximately 8 bytes per grid cell × # predictors.

References

Keller, J. M., Gray, M. R., and Givens, J. A. (1985). A fuzzy k-nearest neighbor algorithm. IEEE transactions on systems, man, and cybernetics, (4), 580-585.

See Also

knn_classification,, knn_regression, random_forest_classification, svm_classification, parallelepiped_classification, evaluate_training_sites

Function Signature

def knn_classification(self, input_rasters: List[Raster], training_data: Vector, class_field_name: str, scaling_method: str = "none", k: int = 5, test_proportion: float = 0.2, use_clipping: bool = False, create_output: bool = False) -> Optional[Raster]: ...

generalize_classified_raster

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

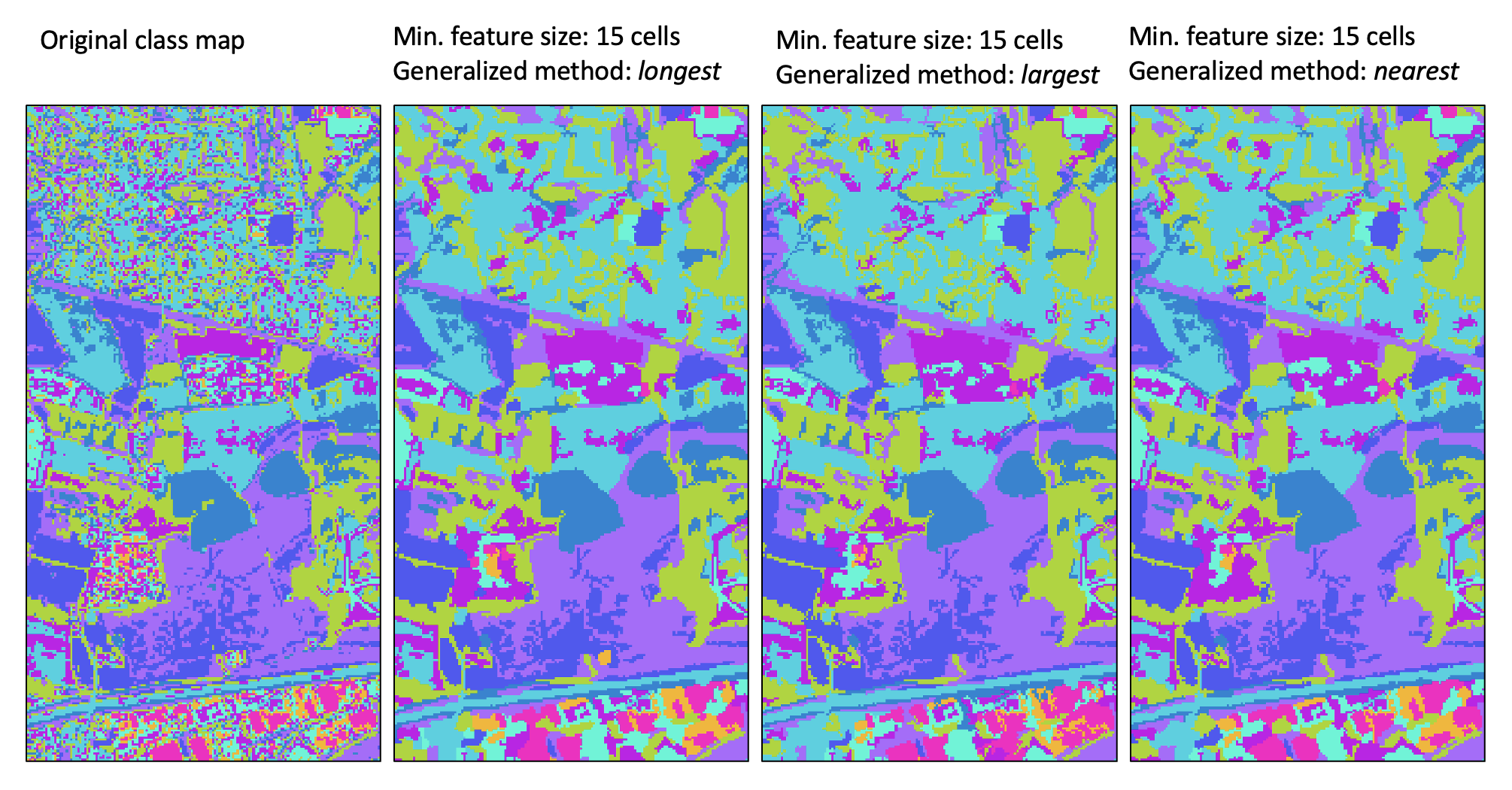

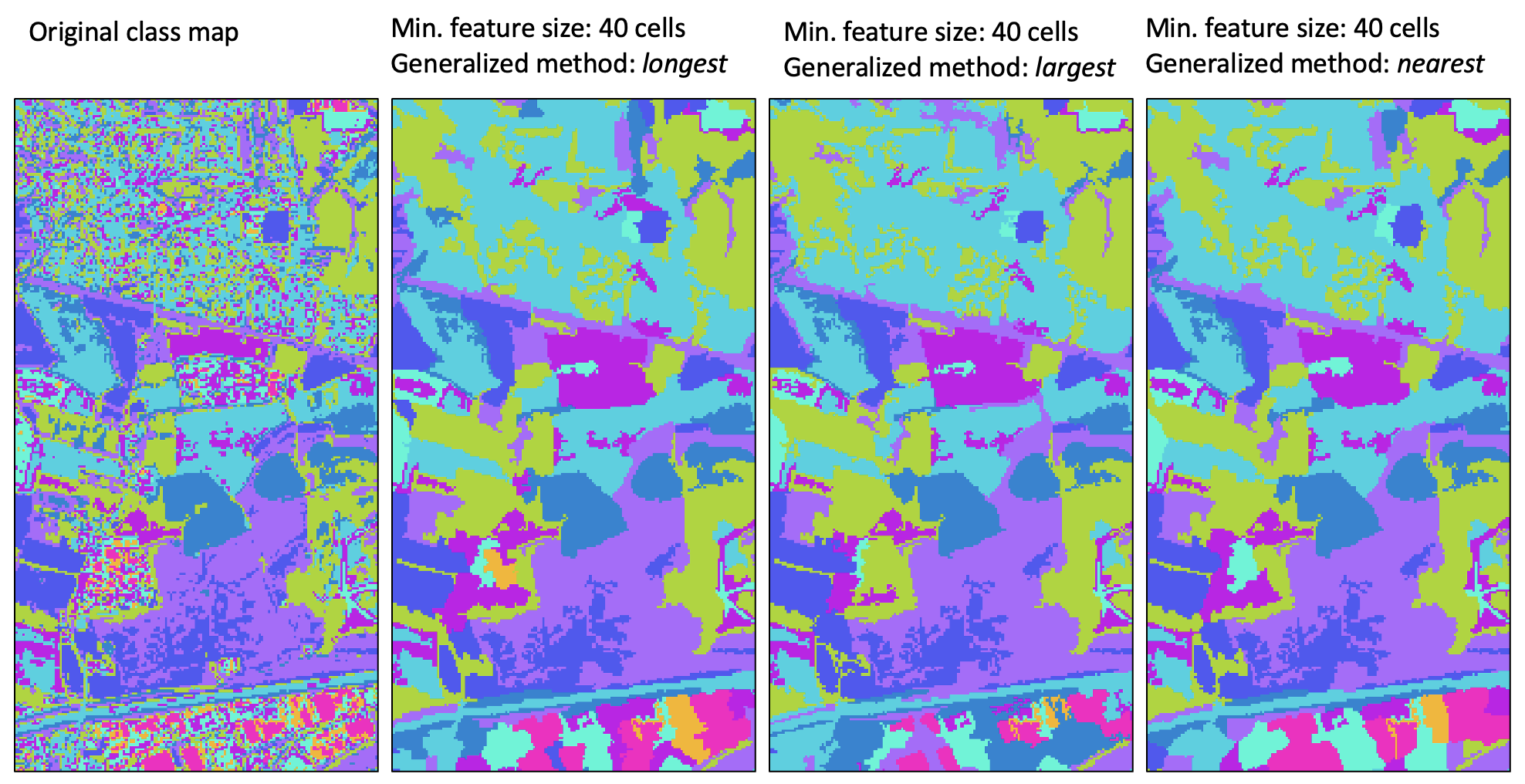

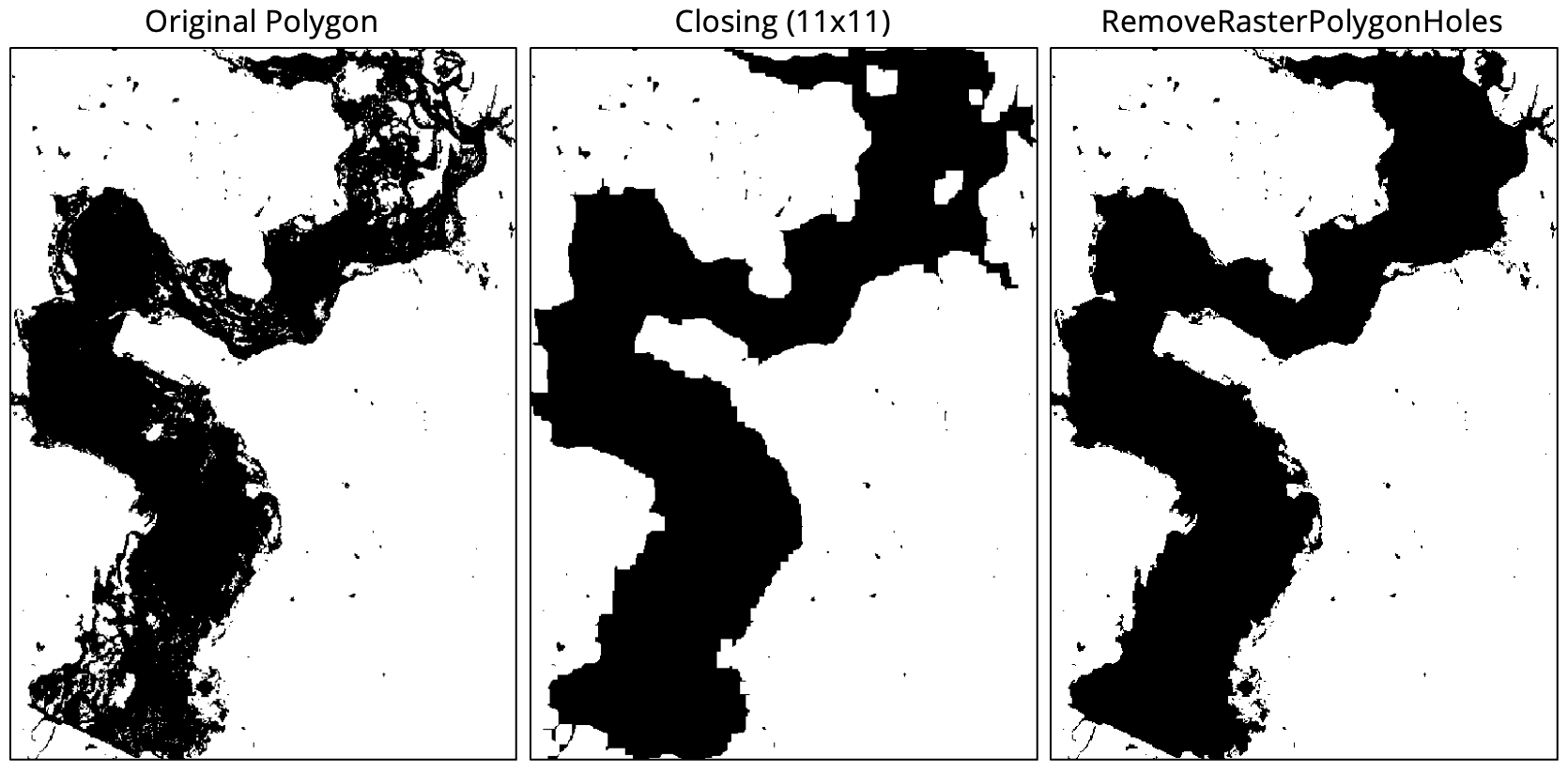

This tool can be used to generalize a raster containing class or object features. Such rasters are usually derived from some classification procedure (e.g. image classification and landform classification), or as the output of a segmentation procedure (image_segmentation). Rasters that are created in this way often contain many very small features that make their interpretation, or vectorization, challenging. Therefore, it is common for practitioners to remove the smaller features. Many different approaches have been used for this task in the past. For example, it is common to remove small features using a filtering based approach (majority_filter). While this can be an effective strategy, it does have the disadvantage of modifying all of the boundaries in the class raster, including those that define larger features. In many applications, this can be a serious issue of concern.

The generalize_classified_raster tool offers an alternative method for simplifying class rasters.

The process begins by identifying each contiguous group of cells in the input (i.e. a clumping

operation) and then defines the subset of features that are smaller than the user-specified minimum

feature size (min_size), in grid cells. This set of small features is then dealt with using

one of three methods (method). In the first method (longest), a small feature may be reassigned the class value

of the neighbouring feature with the longest shared border. The sum of the neighbouring

feature size and the small feature size must be larger than the specified size threshold, and the tool will iterate through this

process of reassigning feature values to neighbouring values until each small feature has been resolved.

The second method, largest, operates in much the same way as the first, except that objects are reassigned the value of the largest neighbour. Again, this process of reassigning small feature values iterates until every small feature has been reassigned to a large neighbouring feature.

The third and last method (nearest) takes a different approach to resolving the reassignment of small features. Using the nearest generalization approach, each grid cell contained within a small feature is reassigned the value of the nearest large neighbouring feature. When there are two or more neighbouring features that are equally distanced to a small feature cell, the cell will be reassigned to the largest neighbour. Perhaps the most significant disadvantage of this approach is that it creates a new artificial boundary in the output image that is not contained within the input class raster. That is, with the previous two methods, boundaries associated with smaller features in the input images are 'erased' in the output map, but every boundary in the output raster exactly matches boundaries within the input raster (i.e. the output boundaries are a subset of the input feature boundaries). However, with the nearest method, artificial boundaries, determined by the divide between nearest neighbours, are introduced to the output raster and these new feature boundaries do not have any basis in the original classification/segmentation process. Thus caution should be exercised when using this approach, especially when larger minimum size thresholds are used. The longest method is the recommended approach to class feature generalization.

For a video tutorial on how to use the generalize_classified_raster tool, see this YouTube video.

See Also

generalize_with_similarity, majority_filter, image_segmentation

Function Signature

def generalize_classified_raster(self, raster: Raster, area_threshold: int = 5, method: str = "longest") -> Raster: ...

generalize_with_similarity

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

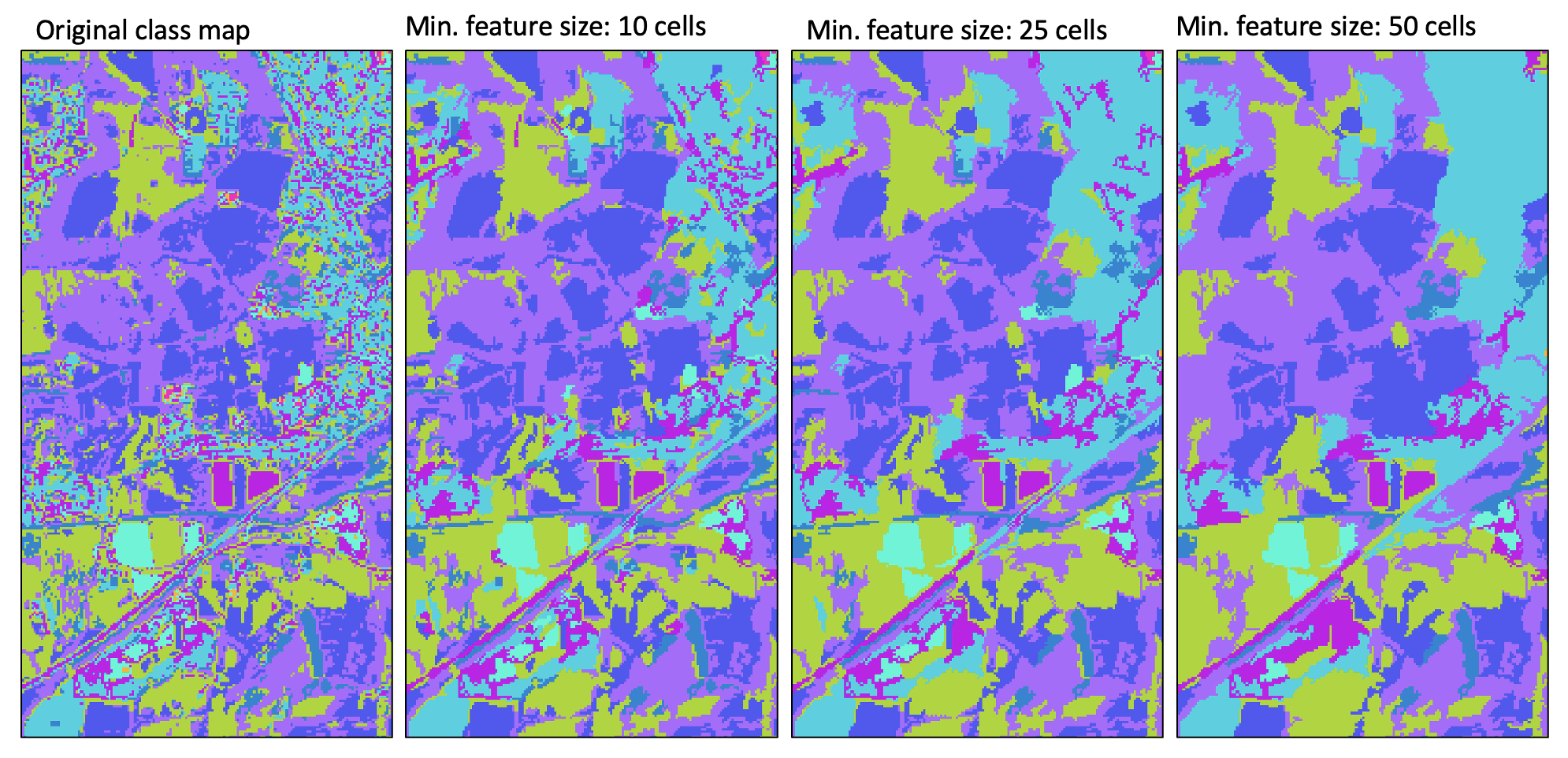

This tool can be used to generalize a raster containing class features (input) by reassigning

the identifier values of small features (min_size) to those of neighbouring features. Therefore, this tool

performs a very similar operation to the generalize_classified_raster tool. However, while the

generalize_classified_raster tool re-labels small features based on the geometric properties of

neighbouring features (e.g. neighbour with the longest shared border, largest neighbour, or

nearest neighbour), the generalize_with_similarity tool reassigns feature labels based on

similarity with neighbouring features. Similarity is determined using a series of input similarity

criteria rasters (similarity), which may be factors used in the creation of the input

class raster. For example, the similarlity rasters may be bands of multi-spectral imagery, if the

input raster is a classified land-cover map, or DEM-derived land surface parameters, if the input

raster is a landform class map.

The tool works by identifying each contiguous group of pixels (features) in the input class raster (input),

i.e. a clumping operation. The mean value is then calculated for each feature and each similarity

input, which defines a multi-dimensional 'similarity centre point' associated with each feature. It should be noted

that the similarity raster data are standardized prior to calculating these centre point values.

Lastly, the tool then reassigns the input label values of all features smaller than the user-specified

minimum feature size (min_size) to that of the neighbouring feature with the shortest distance

between similarity centre points.

For small features that are entirely enclosed by a single larger feature, this process will result in the same generalization solution presented by any of the geometric-based methods of the generalize_classified_raster tool. However, for small features that have more than one neighbour, this tool may provide a superior generalization solution than those based solely on geometric information.

For a video tutorial on how to use the generalize_with_similarity tool, see this YouTube video.

See Also

generalize_classified_raster, majority_filter, image_segmentation

Function Signature

def generalize_with_similarity(self, raster: Raster, similarity_rasters: List[Raster], area_threshold: int = 5) -> Raster: ...

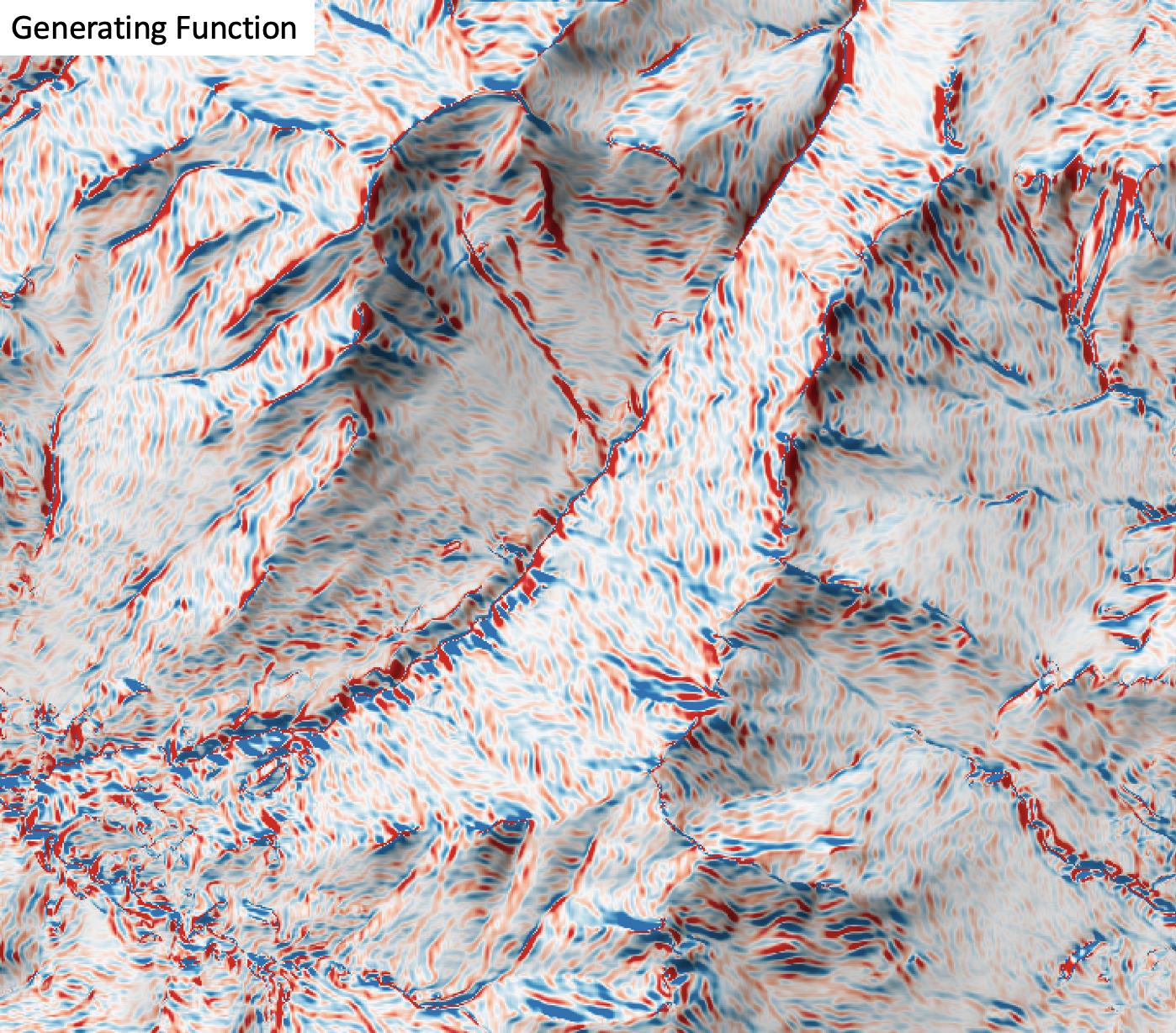

generating_function

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

Description

This tool calculates the generating function (Shary and Stepanov, 1991) from a digital elevation model (DEM). Florinsky (2016) describes generating function as a measure for the deflection of tangential curvature from loci of extreme curvature of the topographic surface. Florinsky (2016) demonstrated the application of this variable for identifying landscape structural lines, i.e. ridges and thalwegs, for which the generating function takes values near zero. Ridges coincide with divergent areas where generating function is approximately zero, while thalwegs are associated with convergent areas with generating function values near zero. This variable has positive values, zero or greater and is measured in units of m-2.

The user must specify the name of the input DEM (dem) and the output raster (output). The

The Z conversion factor (zfactor) is only important when the vertical and horizontal units are not the

same in the DEM. When this is the case, the algorithm will multiply each elevation in the DEM by the

Z Conversion Factor. Raw generating function values are often challenging to visualize given their range and magnitude,

and as such the user may opt to log-transform the output raster (log). Transforming the values

applies the equation by Shary et al. (2002):

Θ' = sign(Θ) ln(1 + 10n|Θ|)

where Θ is the parameter value and n is dependent on the grid cell size.

This tool uses the 3rd-order bivariate Taylor polynomial method described by Florinsky (2016). Based on a polynomial fit of the elevations within the 5x5 neighbourhood surrounding each cell, this method is considered more robust against outlier elevations (noise) than other methods. For DEMs in geographic coordinate systems, however, this tool cannot use the same 3x3 polynomial fitting method for equal angle grids, also described by Florinsky (2016), that is used by the other curvature tools in this software. That is because generating function uses 3rd order partial derivatives, which cannot be calculated using the 9 elevations in a 3x3; more elevation values are required (i.e. a 5x5 window). Thus, this tool uses the same 5x5 method used for DEMs in projected coordinate systems, and calculates the average linear distance between neighbouring cells in the vertical and horizontal directions using the Vincenty distance function. Note that this may cause a notable slow-down in algorithm performance and has a lower accuracy than would be achieved using an equal angle method, because it assumes a square pixel (in linear units).

References

Florinsky, I. (2016). Digital terrain analysis in soil science and geology. Academic Press.

Florinsky, I. V. (2017). An illustrated introduction to general geomorphometry. Progress in Physical Geography, 41(6), 723-752.

Koenderink, J. J., and Van Doorn, A. J. (1992). Surface shape and curvature scales. Image and vision computing, 10(8), 557-564.

Shary P. A., Sharaya L. S. and Mitusov A. V. (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107: 1–32.

Shary P. A. and Stepanov I. N. (1991) Application of the method of second derivatives in geology. Transactions (Doklady) of the USSR Academy of Sciences, Earth Science Sections 320: 87–92.

See Also

shape_index, minimal_curvature, maximal_curvature, tangential_curvature, profile_curvature, mean_curvature, gaussian_curvature

Function Signature

def generating_function(self, dem: Raster, log_transform: bool = False, z_factor: float = 1.0) -> Raster: ...

horizon_area

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

This tool calculates horizon area, i.e., the area of the horizon polygon centered on each point in an

input digital elevation model (DEM). Horizon area is therefore conceptually related to the

viewhed and visibility_index

functions. Horizon area can be thought of as an approximation of the viewshed area and is therefore

faster to calculate a spatial distribution of compared with the visibility index. Horizon area is

measured in hectares.

The user must specify an input DEM (dem), the azimuth fraction (az_fraction), the maximum search

distance (max_dist), and the height offset of the observer (observer_hgt_offset). The input DEM

should usually be a digital surface model (DSM) that contains significant off-terrain objects. Such a

model, for example, could be created using the first-return points of a LiDAR data set, or using the

lidar_digital_surface_model tool. The azimuth

fraction should be an even divisor of 360-degrees and must be between 1-45 degrees.

The tool operates by calculating horizon angle (see horizon_angle)

rasters from the DSM based on the user-specified azimuth fraction (az_fraction). For example, if an azimuth

fraction of 15-degrees is specified, horizon angle rasters would be calculated for the solar azimuths 0,

15, 30, 45... A horizon angle raster evaluates the vertical angle between each grid cell in a DSM and a

distant obstacle (e.g. a mountain ridge, building, tree, etc.) that obscures the view in a specified

direction. In calculating horizon angle, the user must specify the maximum search distance (max_dist),

in map units, beyond which the query for higher, more distant objects will cease. This parameter strongly

impacts the performance of the function, with larger values resulting in significantly longer processing-times.

With each evaluated direction, the coordinates of the horizon point is determined, using the azimuth and the distance to horizon, with each point then serving as a vertex in a horizon polygon. The shoelace algorithm is used to measure the area of each horizon polgon for the set of grid cells, which is then reported in the output raster.

The observer_hgt_offset parameter can be used to add an increment to the source cell's elevation. For

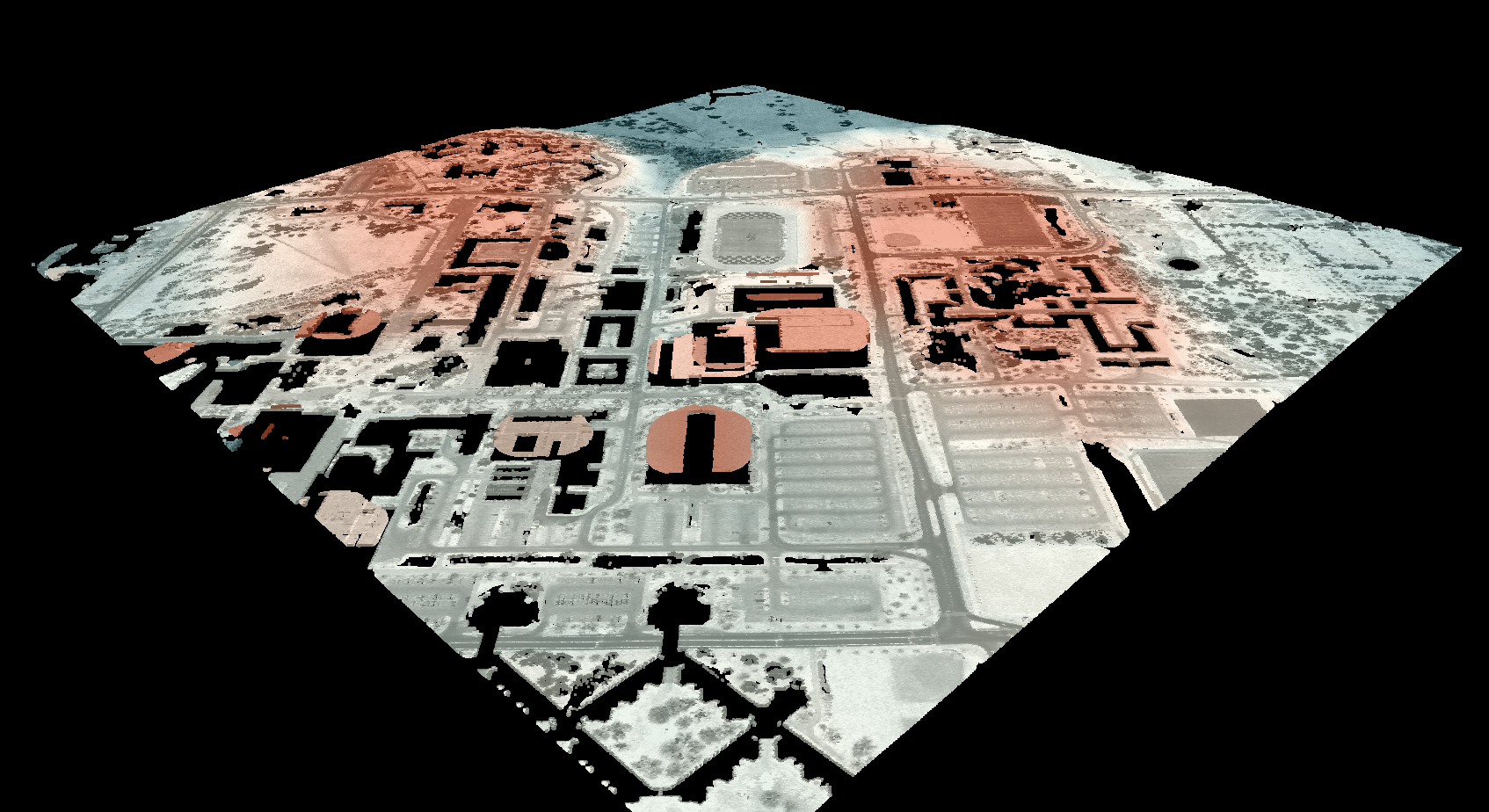

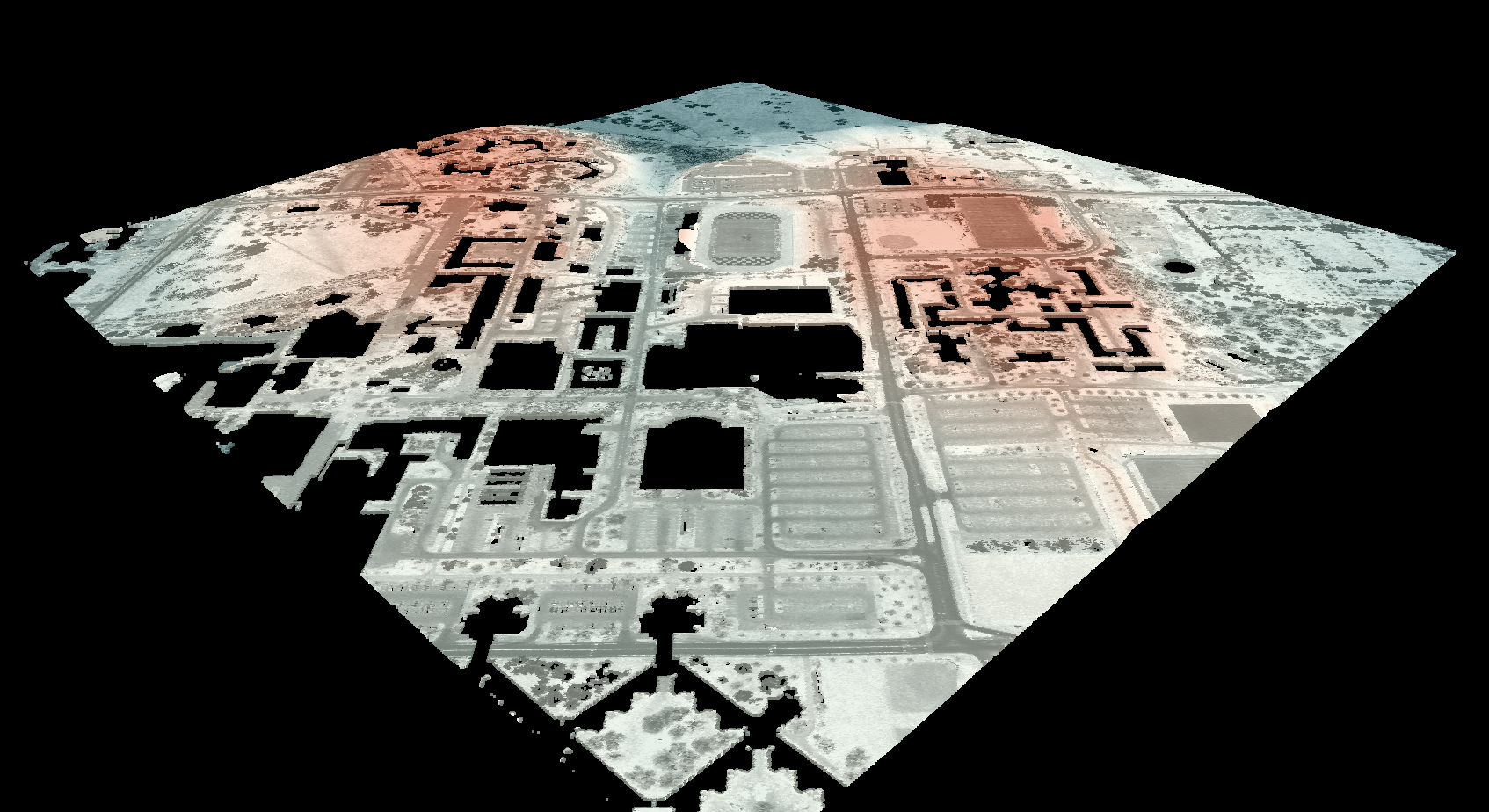

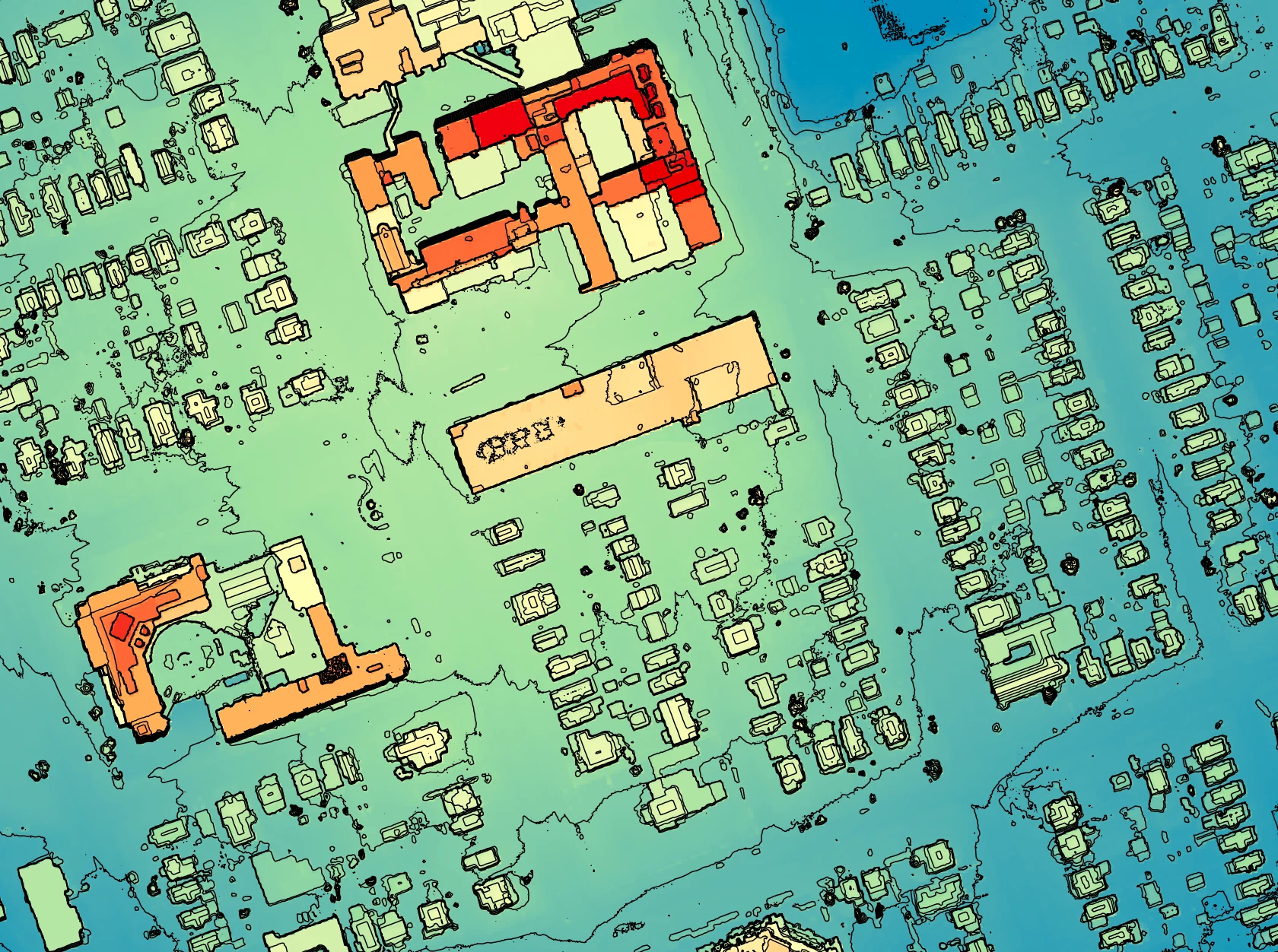

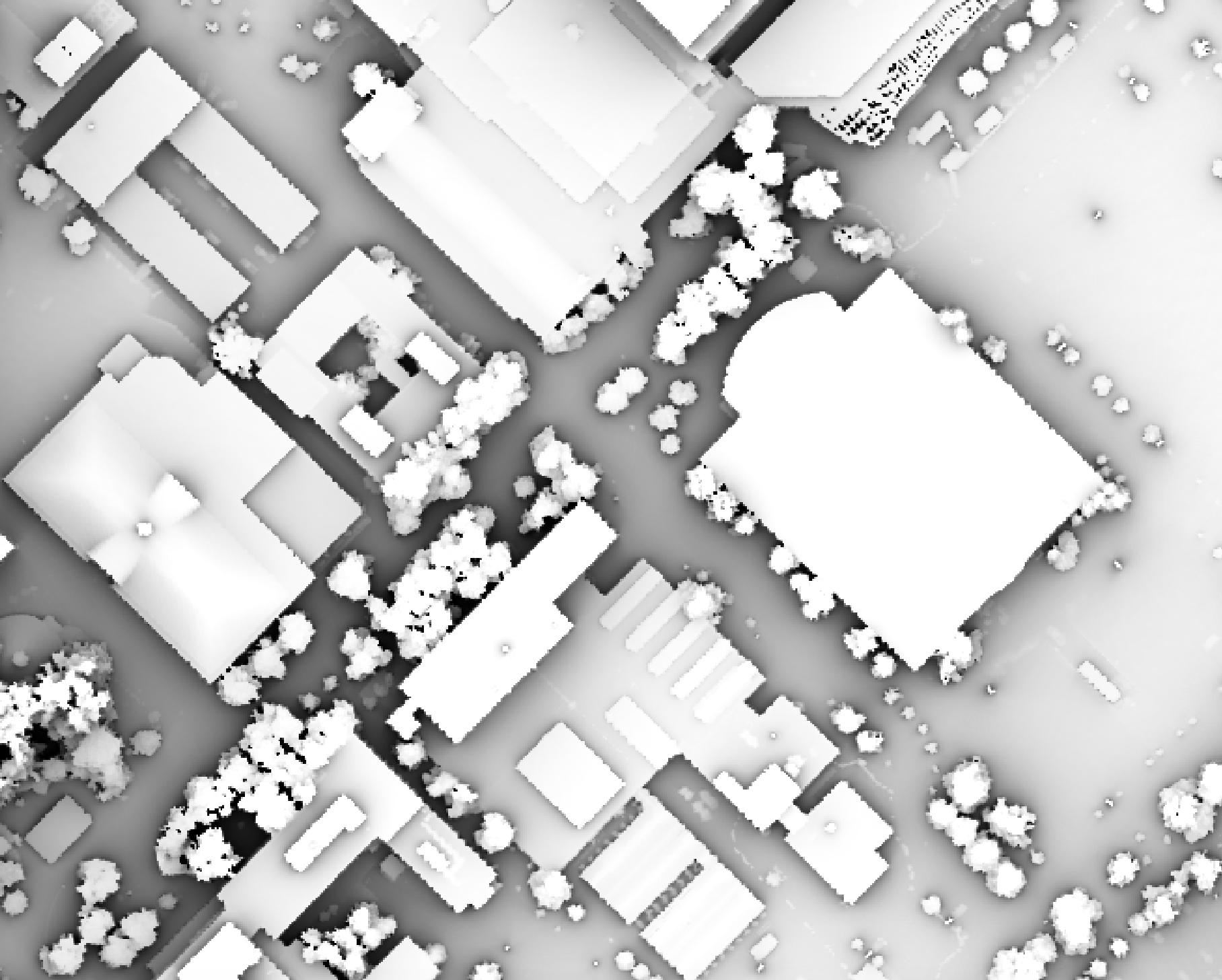

example, the following image shows the spatial pattern derived from a LiDAR DSM using observer_hgt_offset = 0.0:

Notice that there are several places, plarticularly on the flatter rooftops, where the local noise in the LiDAR DEM, associated with the individual scan lines, has resulted in a noisy pattern in the output. By adding a small height offset of the scale of this noise variation (0.15 m), we see that most of this noisy pattern is removed in the output below:

As another example, in the image below, the observer_hgt_offset parameter has been used to measure

the pattern of the index at a typical human height (1.7 m):

Notice how at this height a much larger area becomes visible on some of the flat rooftops where a guard wall prevents further viewing areas at shorter observer heights.

See Also

sky_view_factor, average_horizon_distance, openness, lidar_digital_surface_model, horizon_angle

Function Signature

def horizon_area(self, dem: Raster, az_fraction: float = 5.0, max_dist: float = float('inf'), observer_hgt_offset: float = 0.0) -> Raster: ...

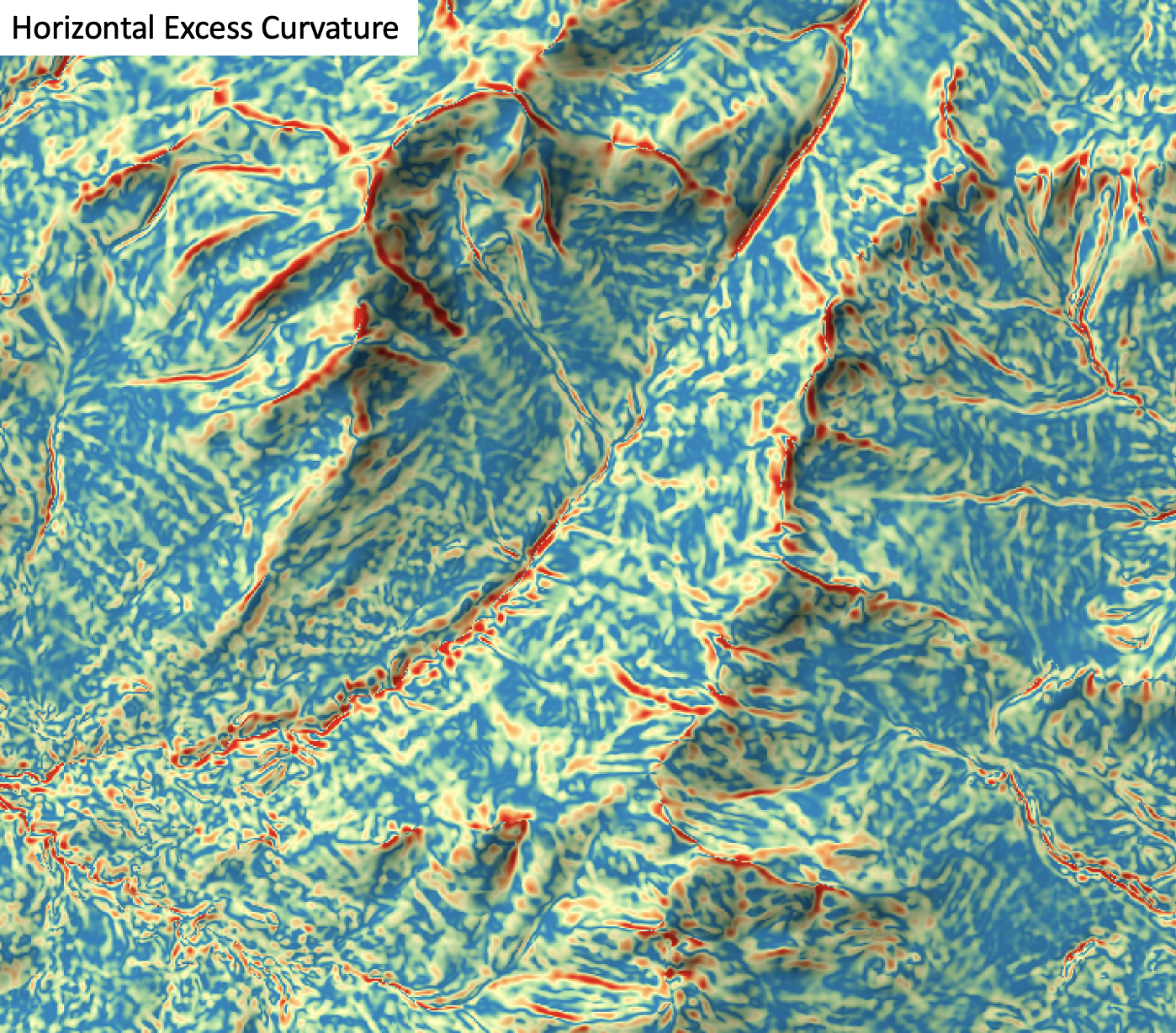

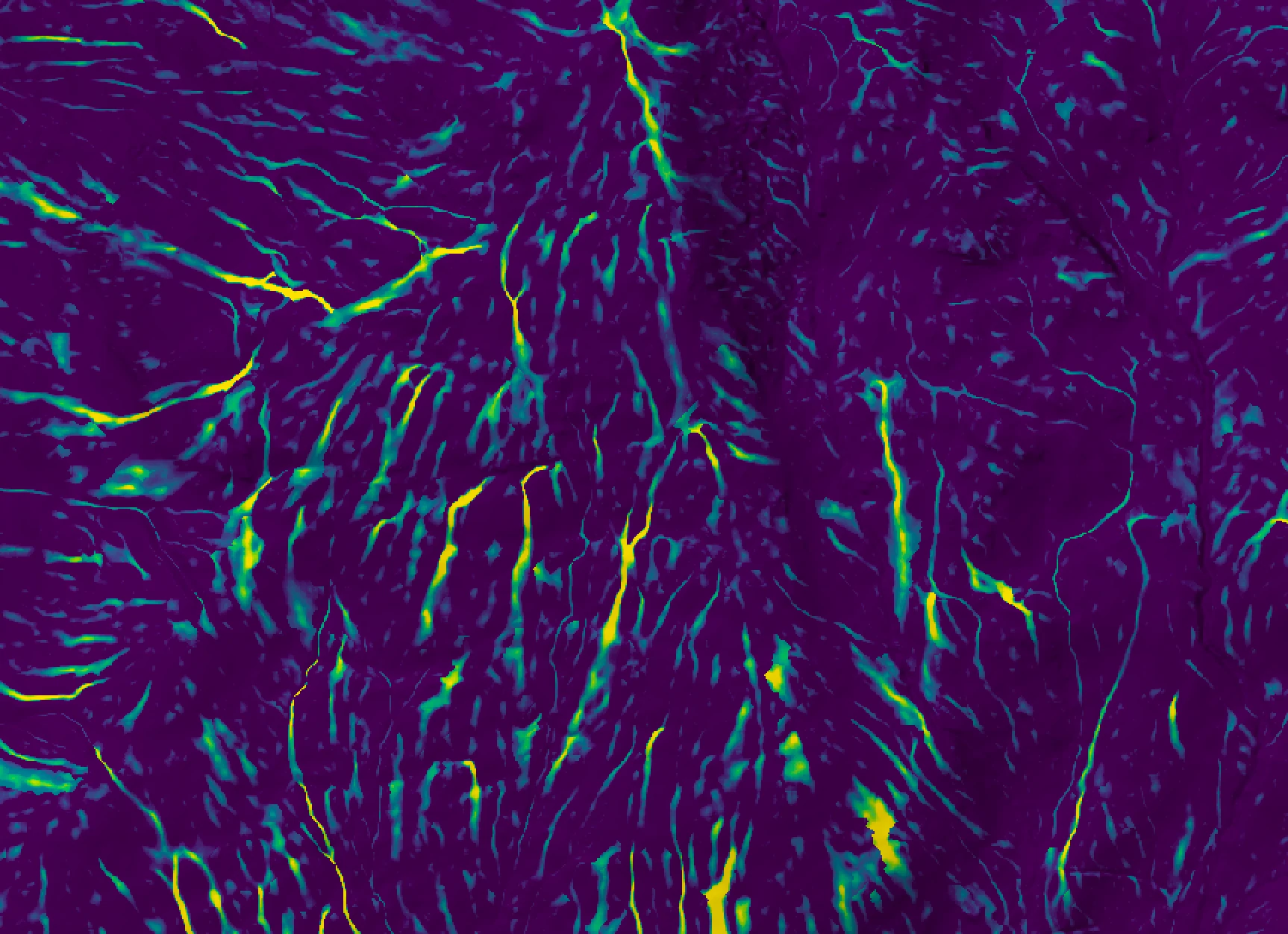

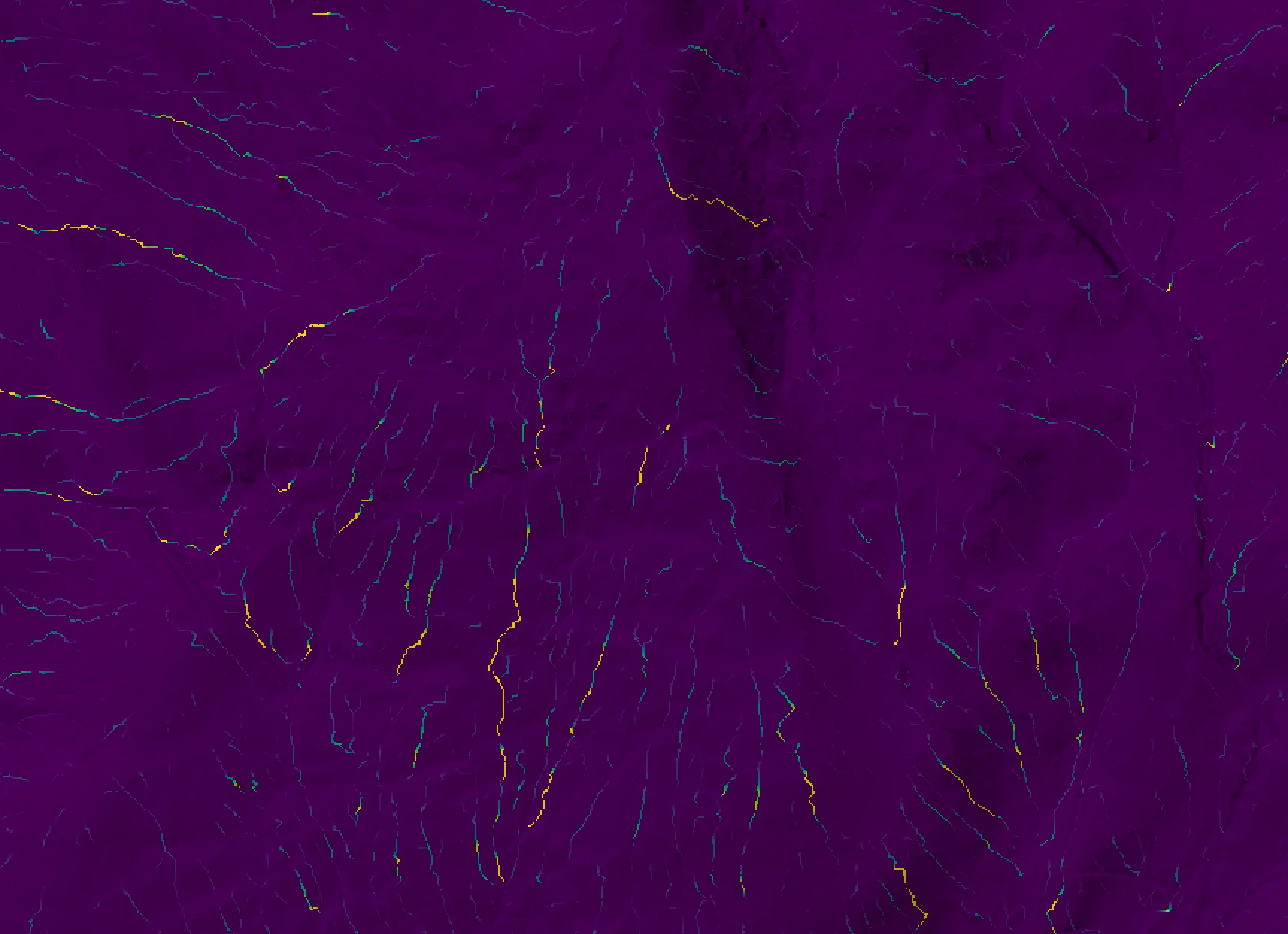

horizontal_excess_curvature

License Information

Use of this function requires a license for Whitebox Workflows for Python Professional (WbW-Pro). Please visit www.whiteboxgeo.com to purchase a license.

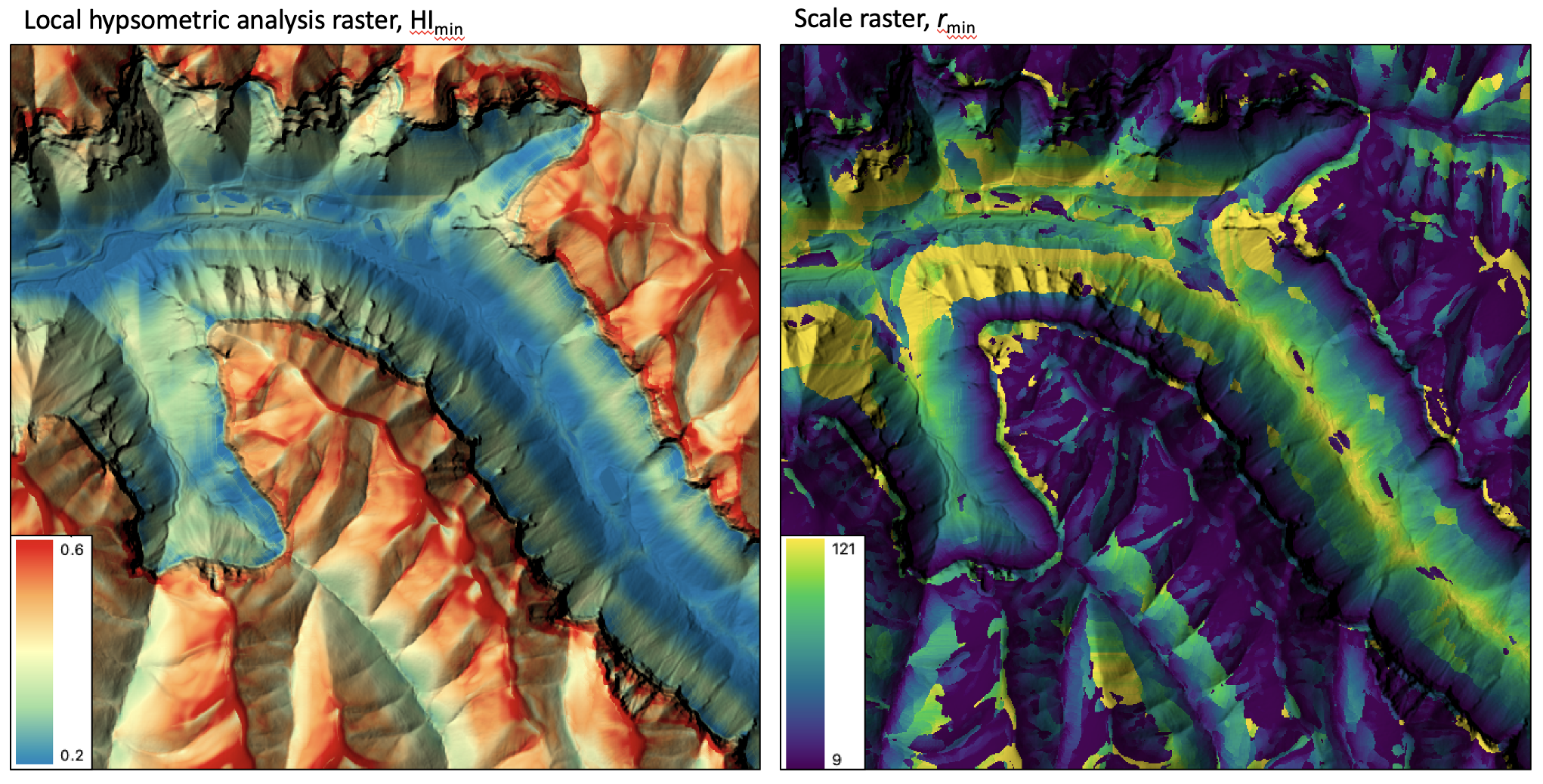

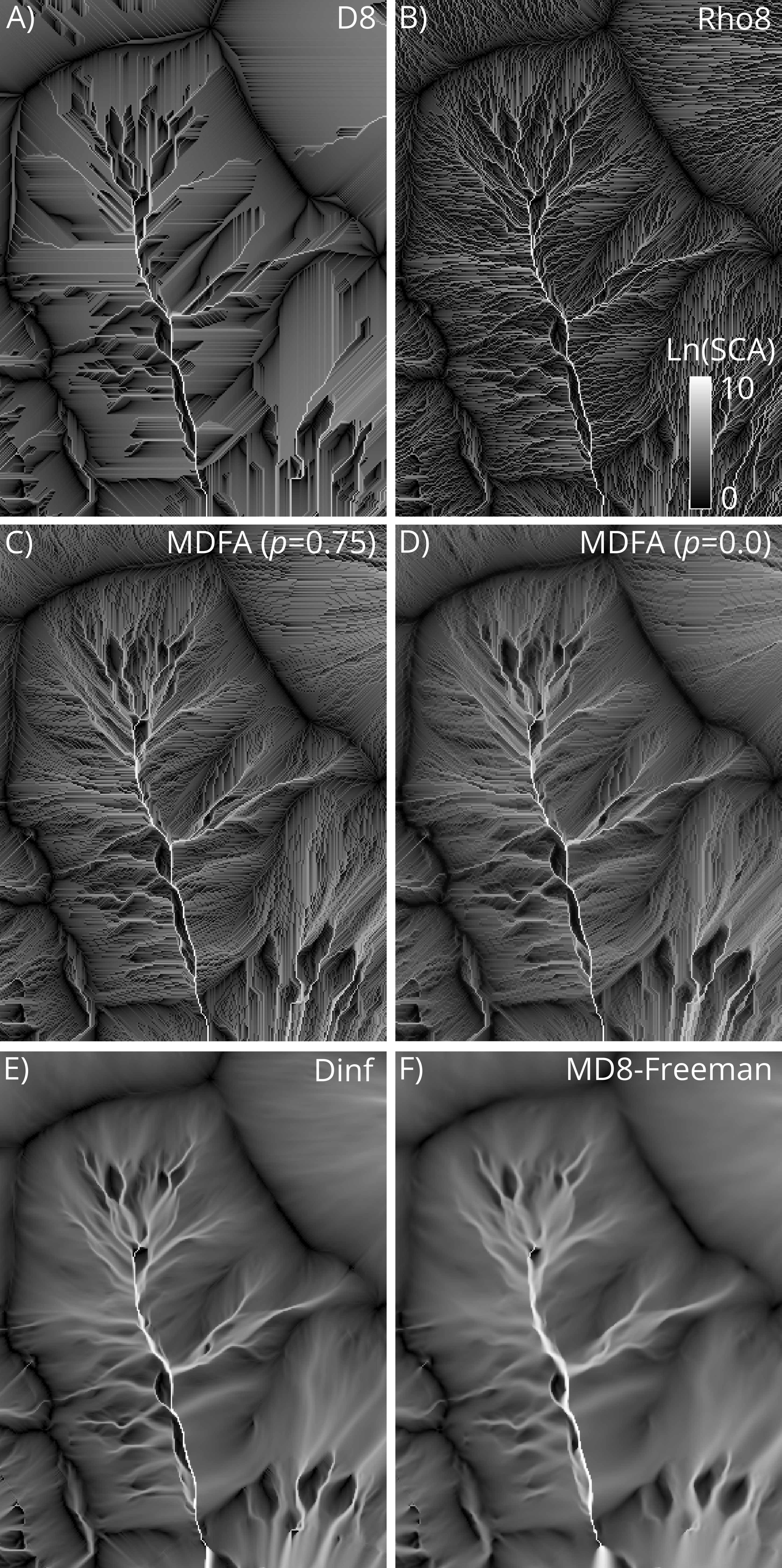

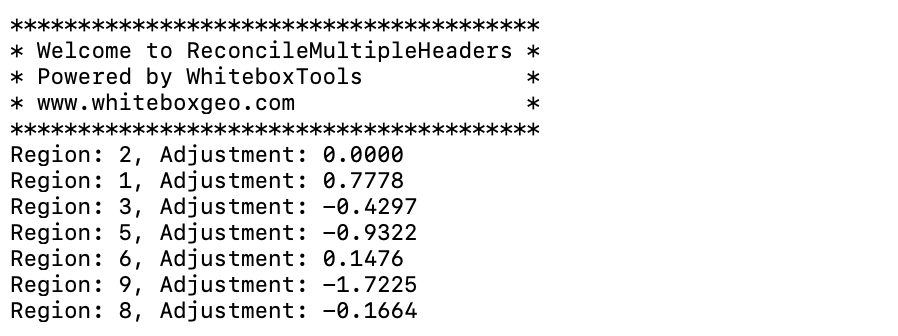

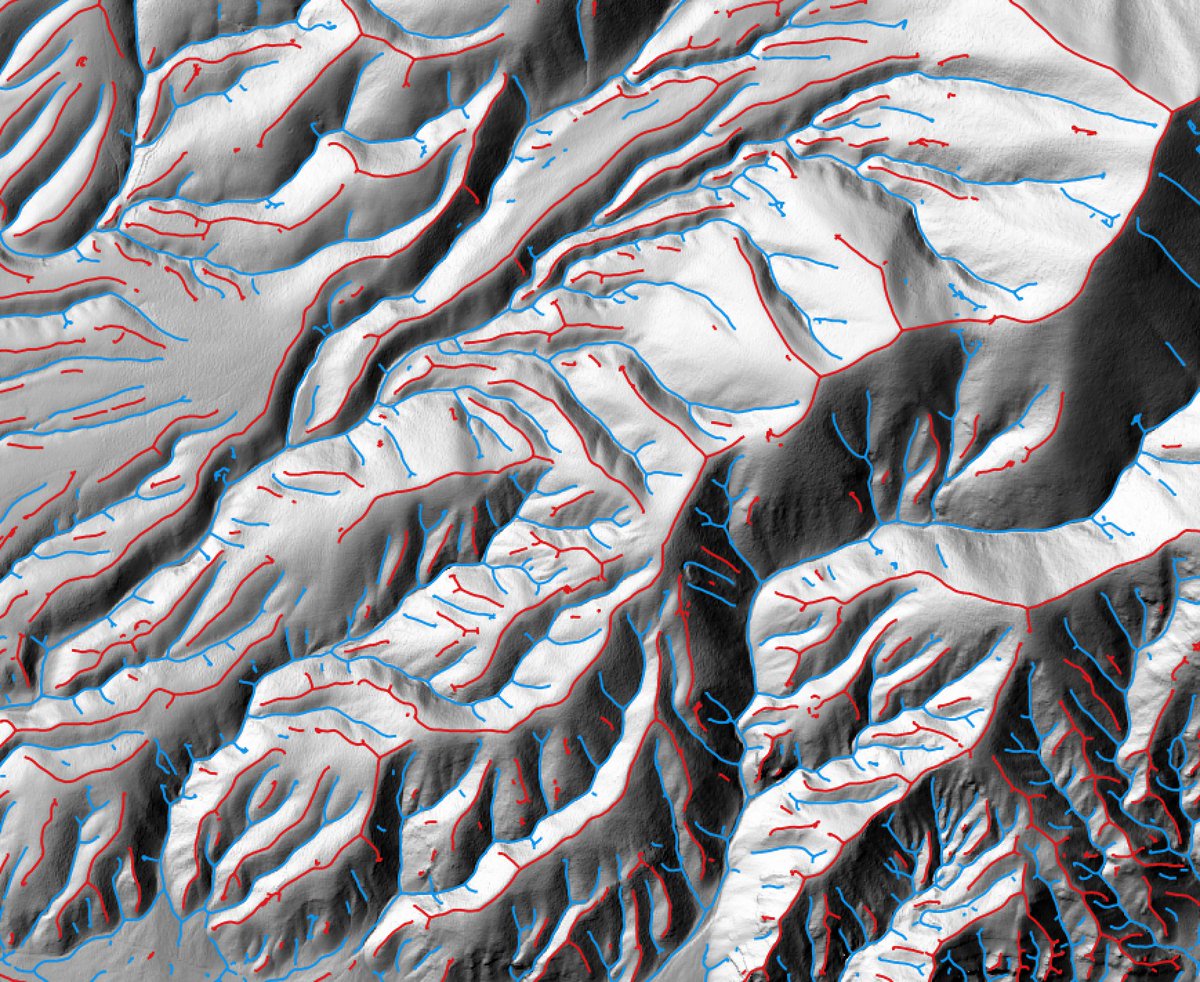

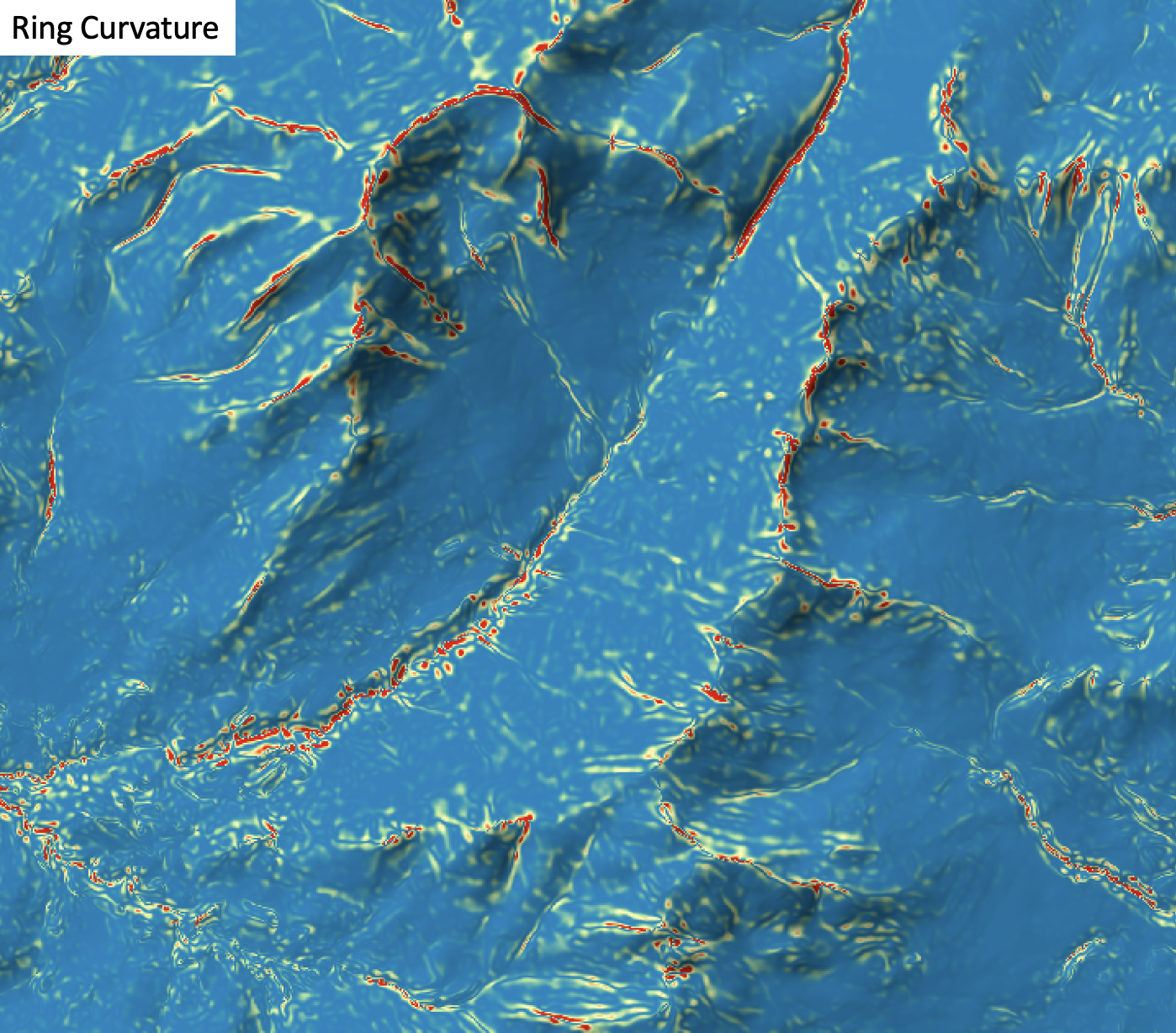

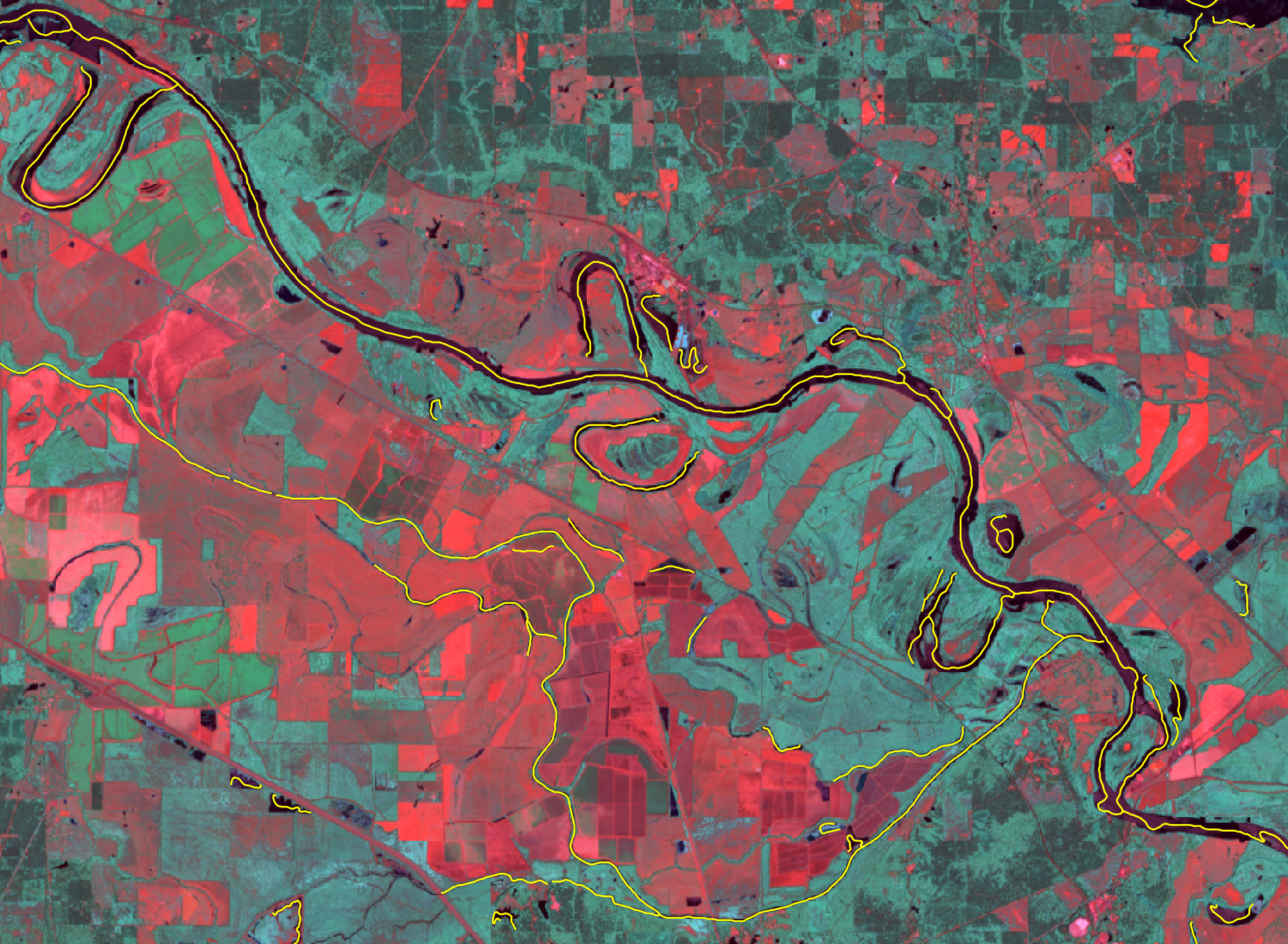

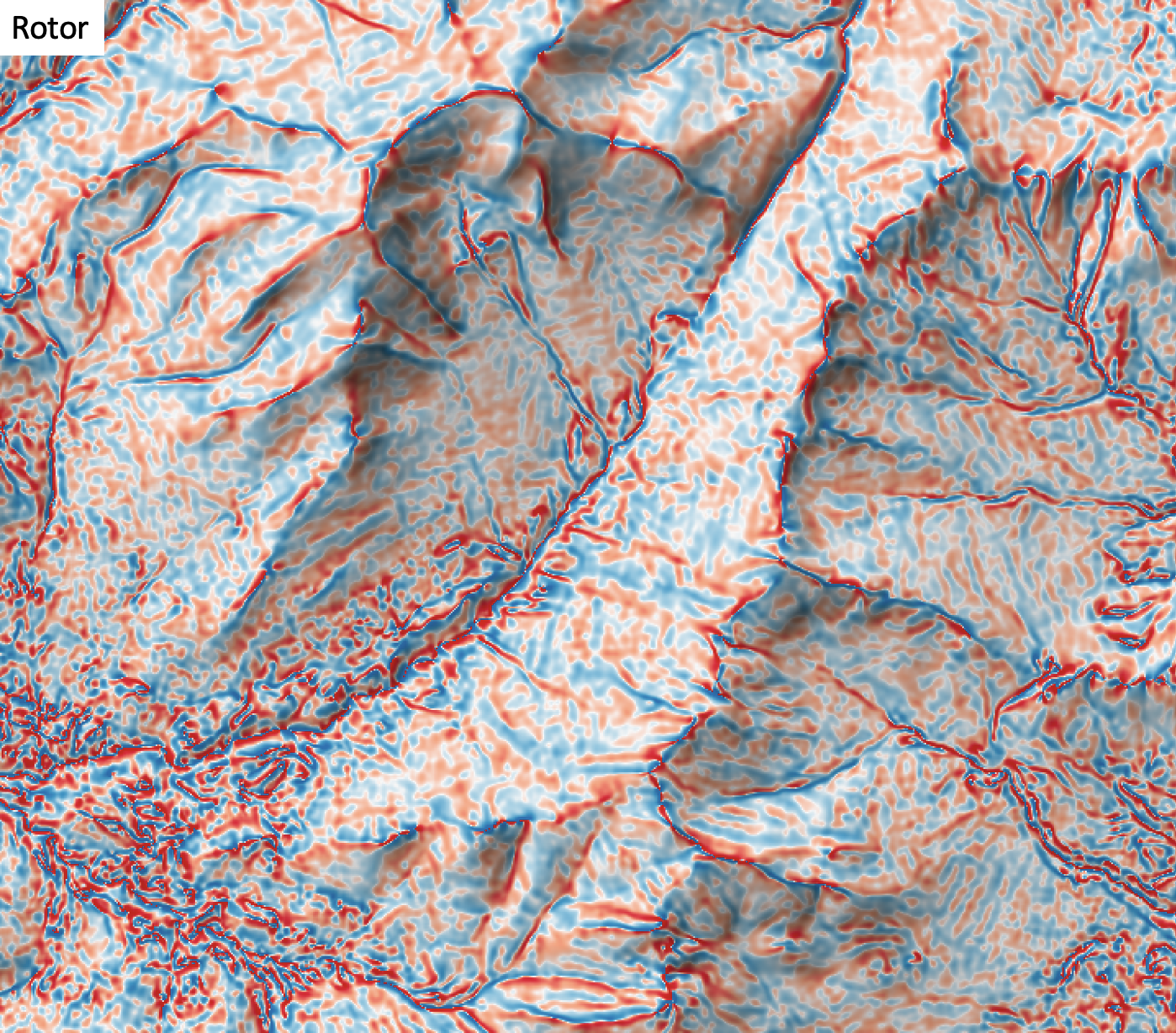

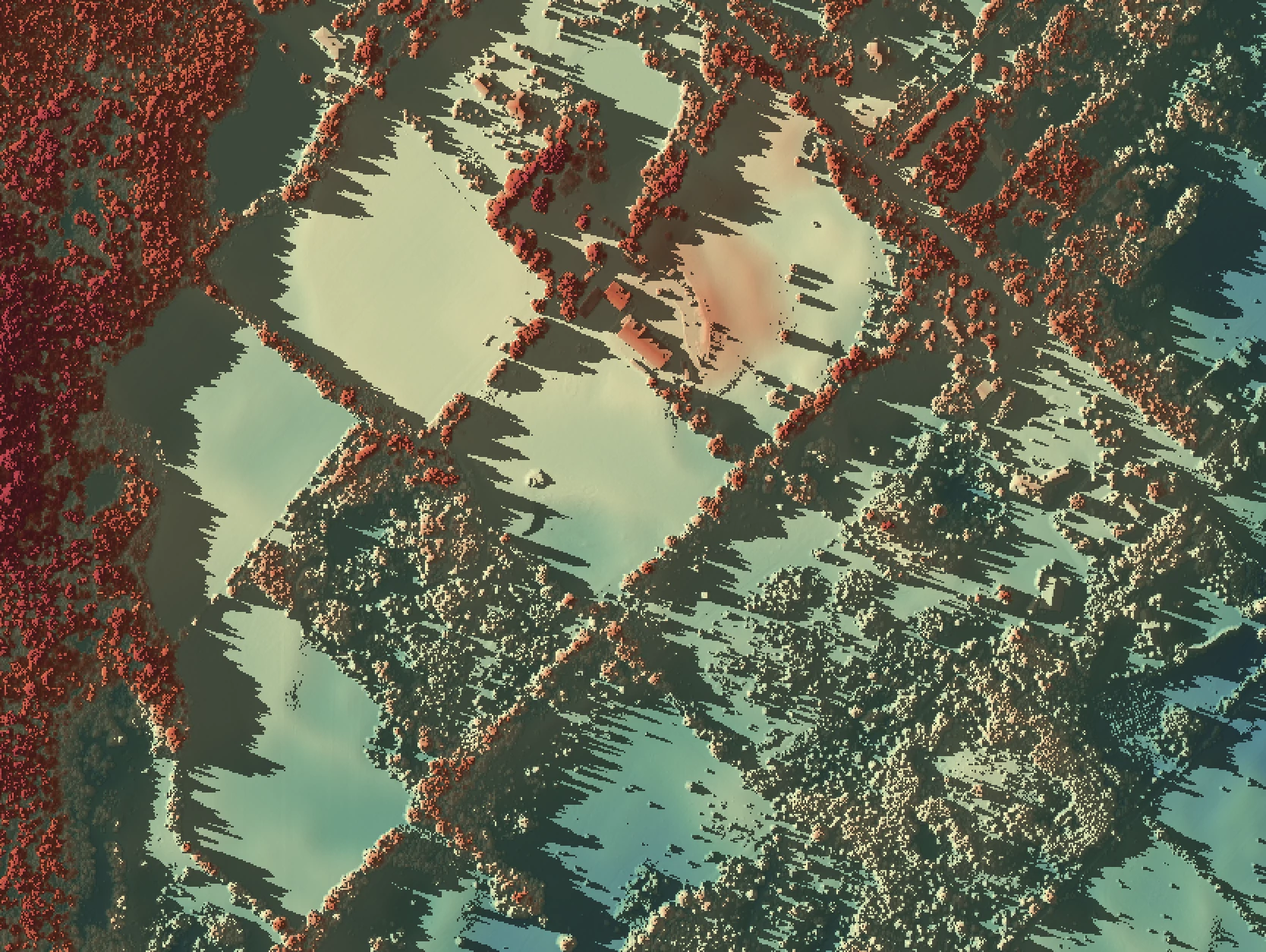

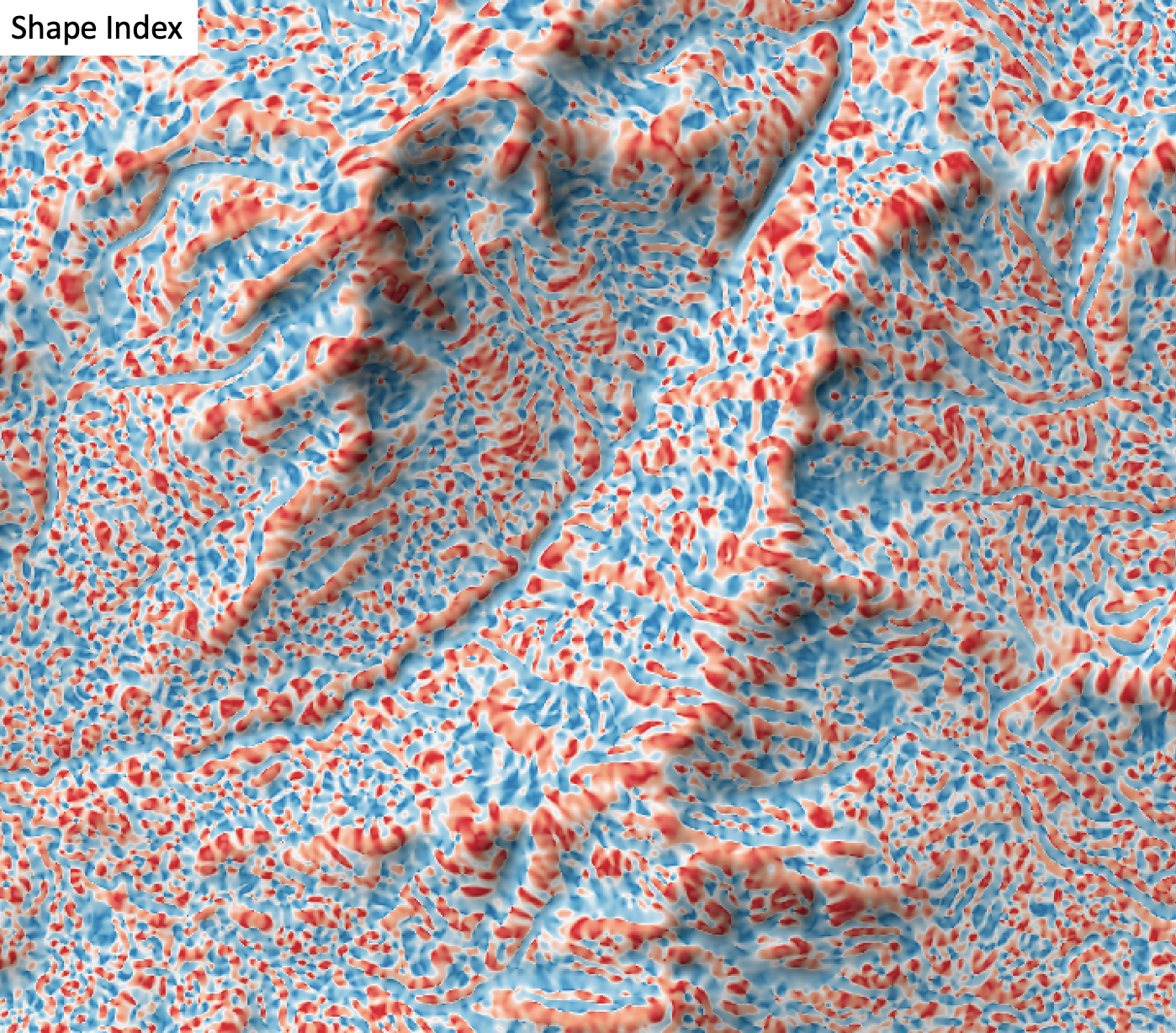

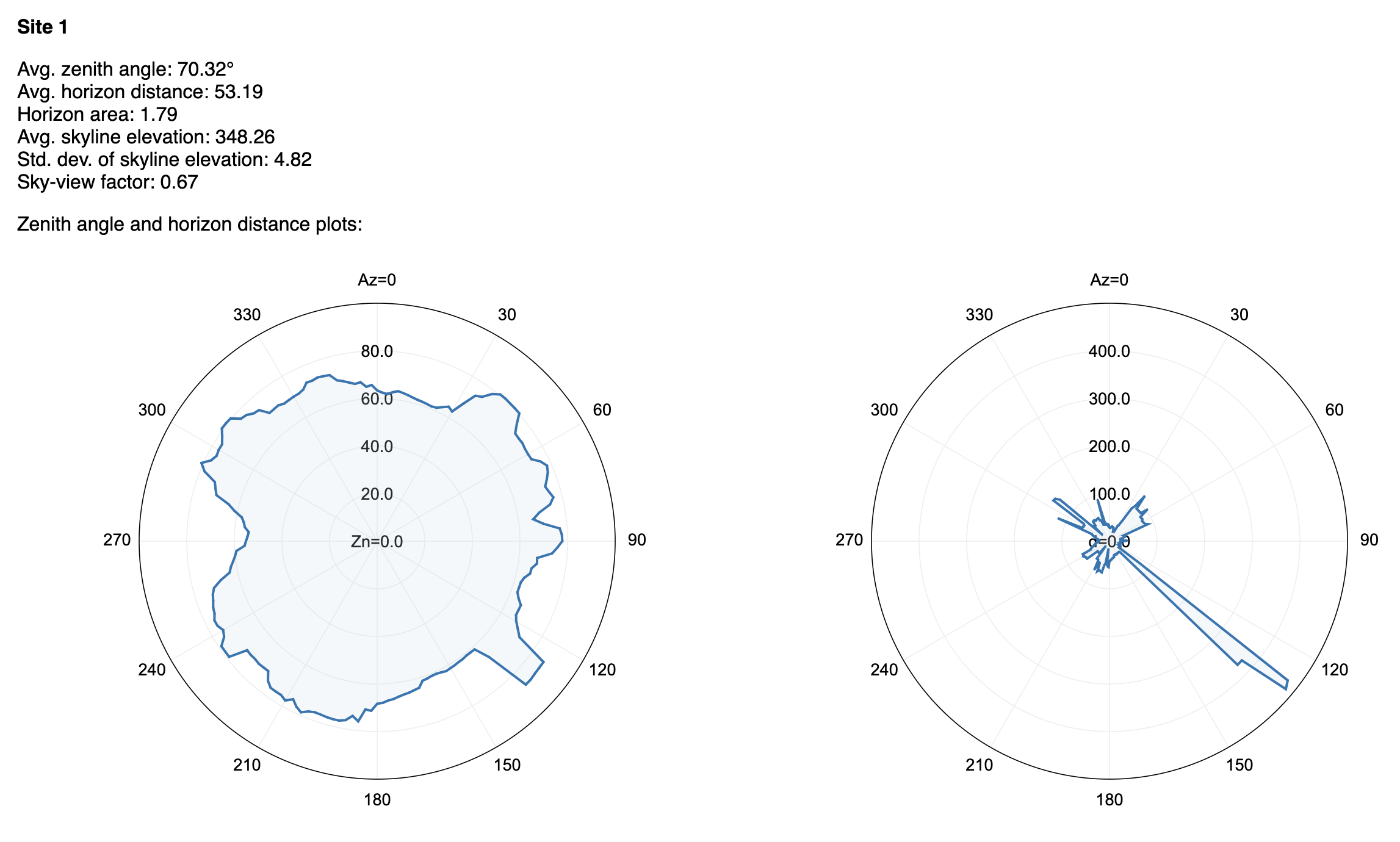

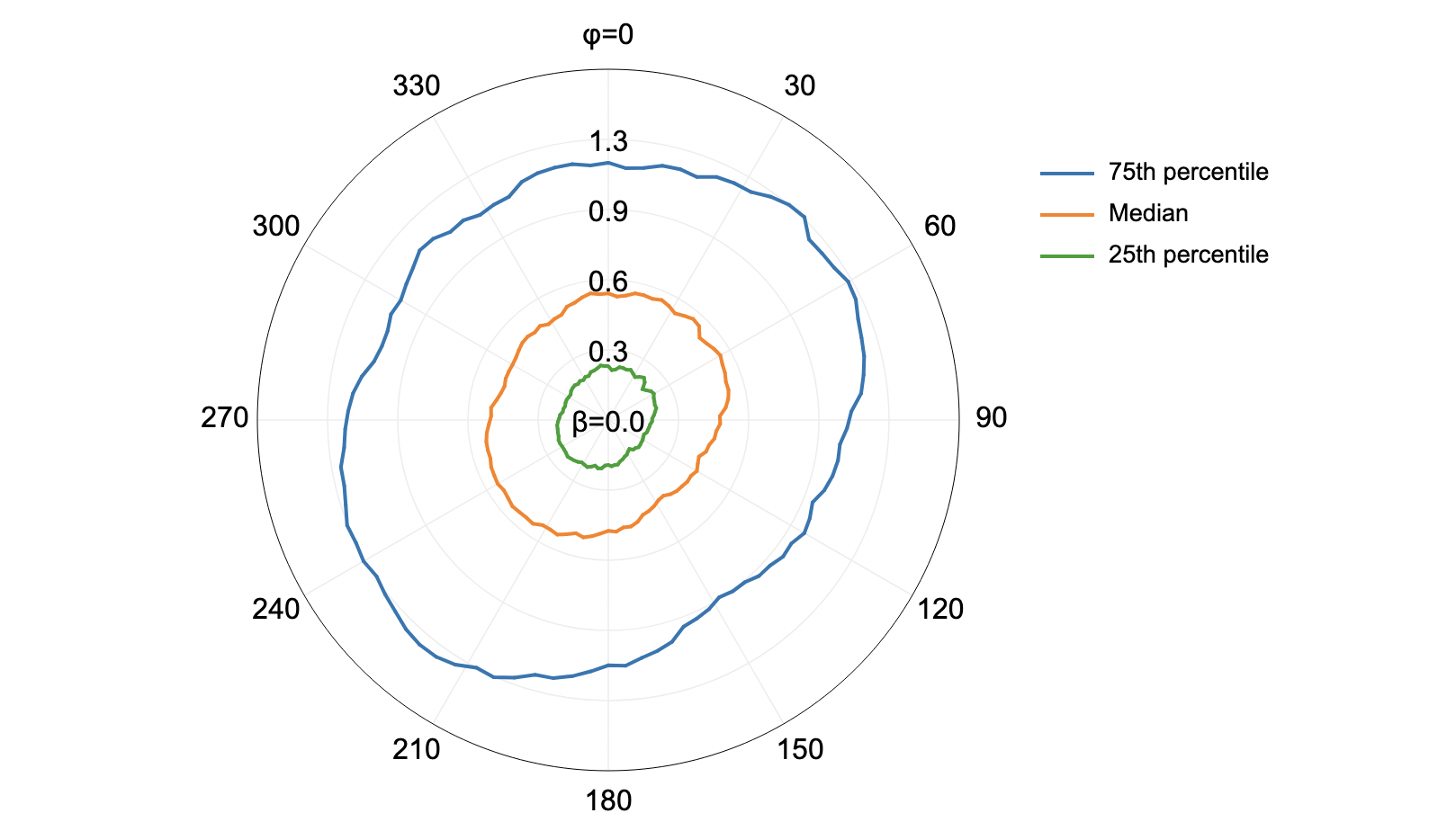

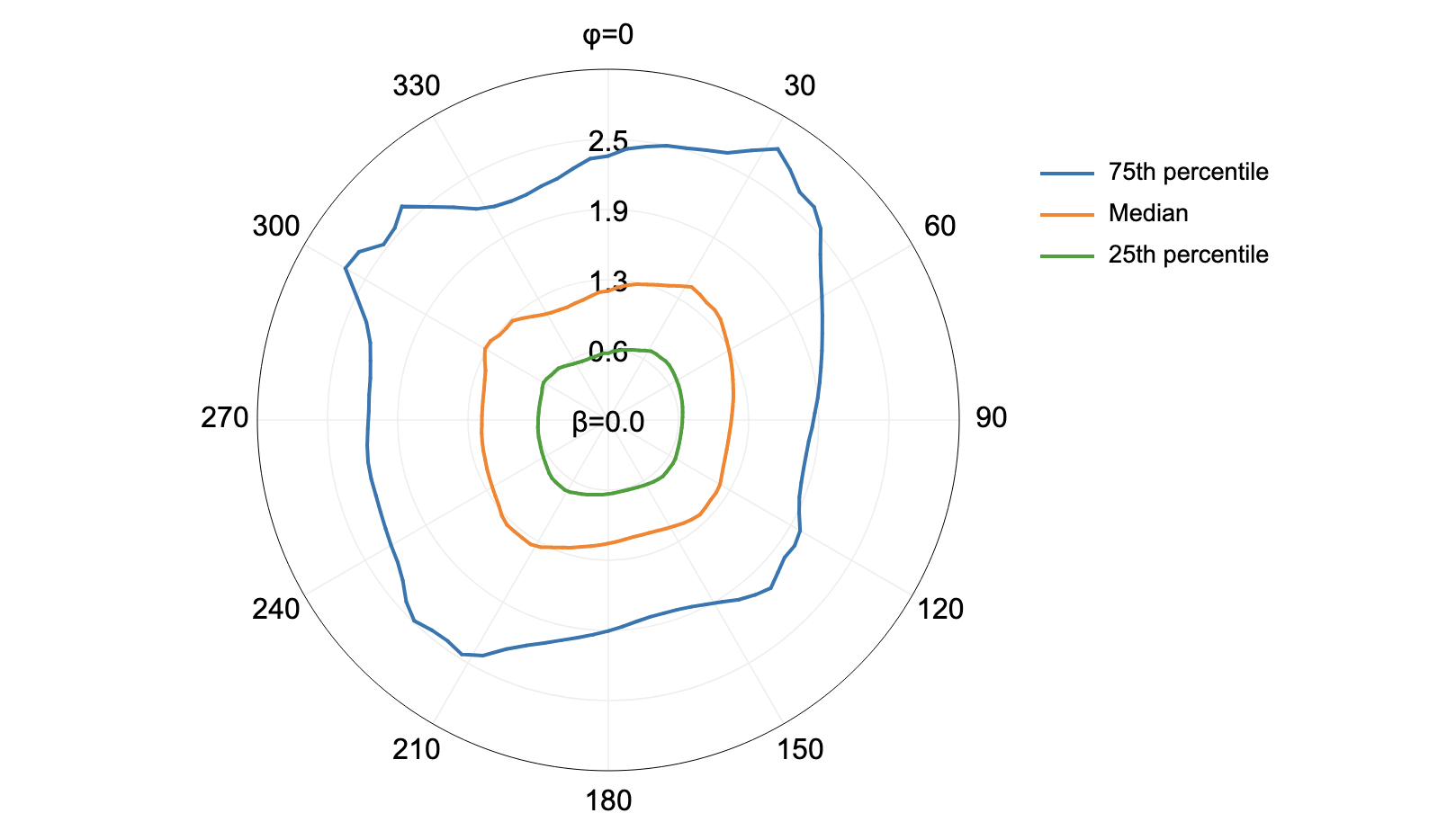

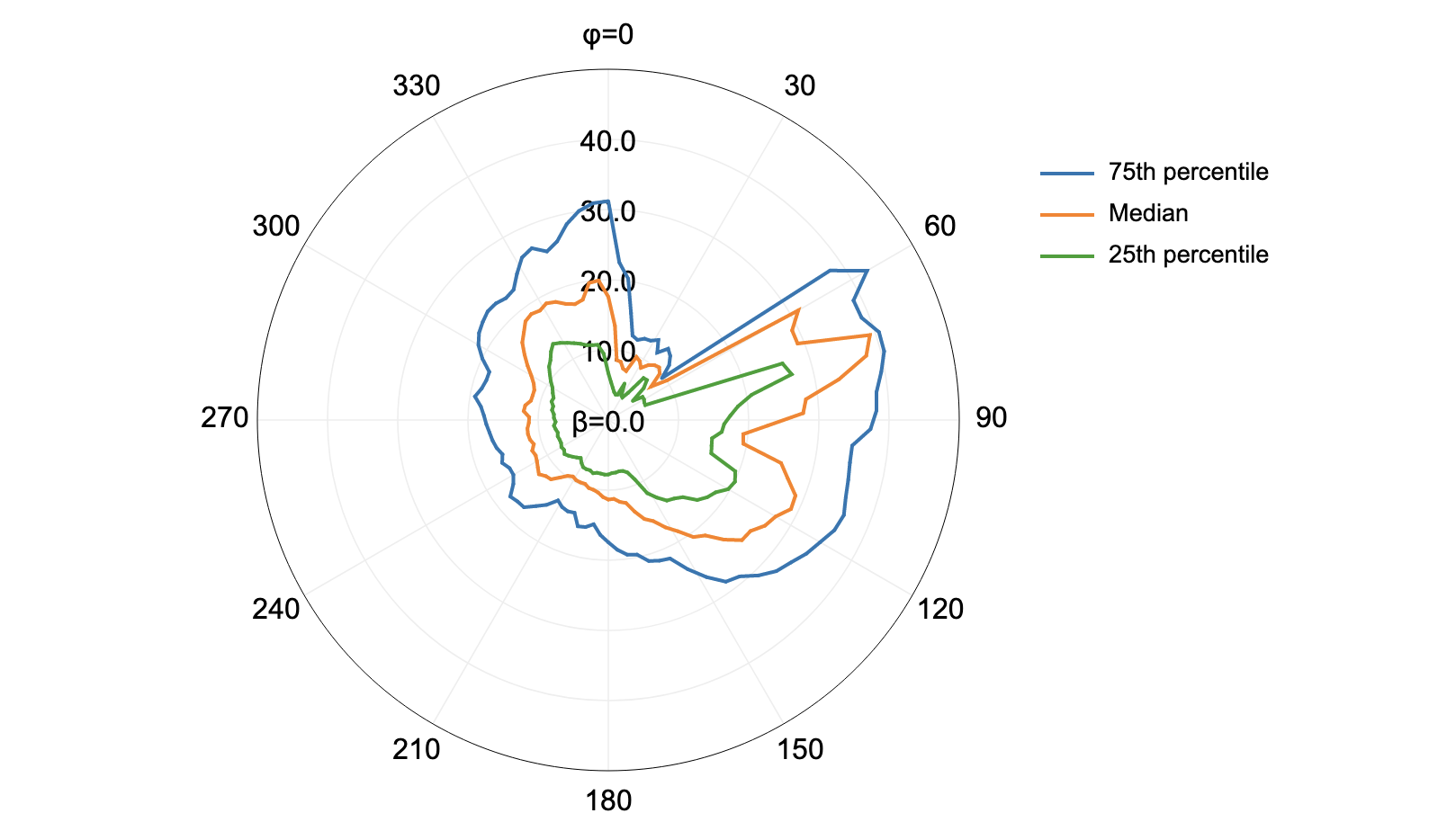

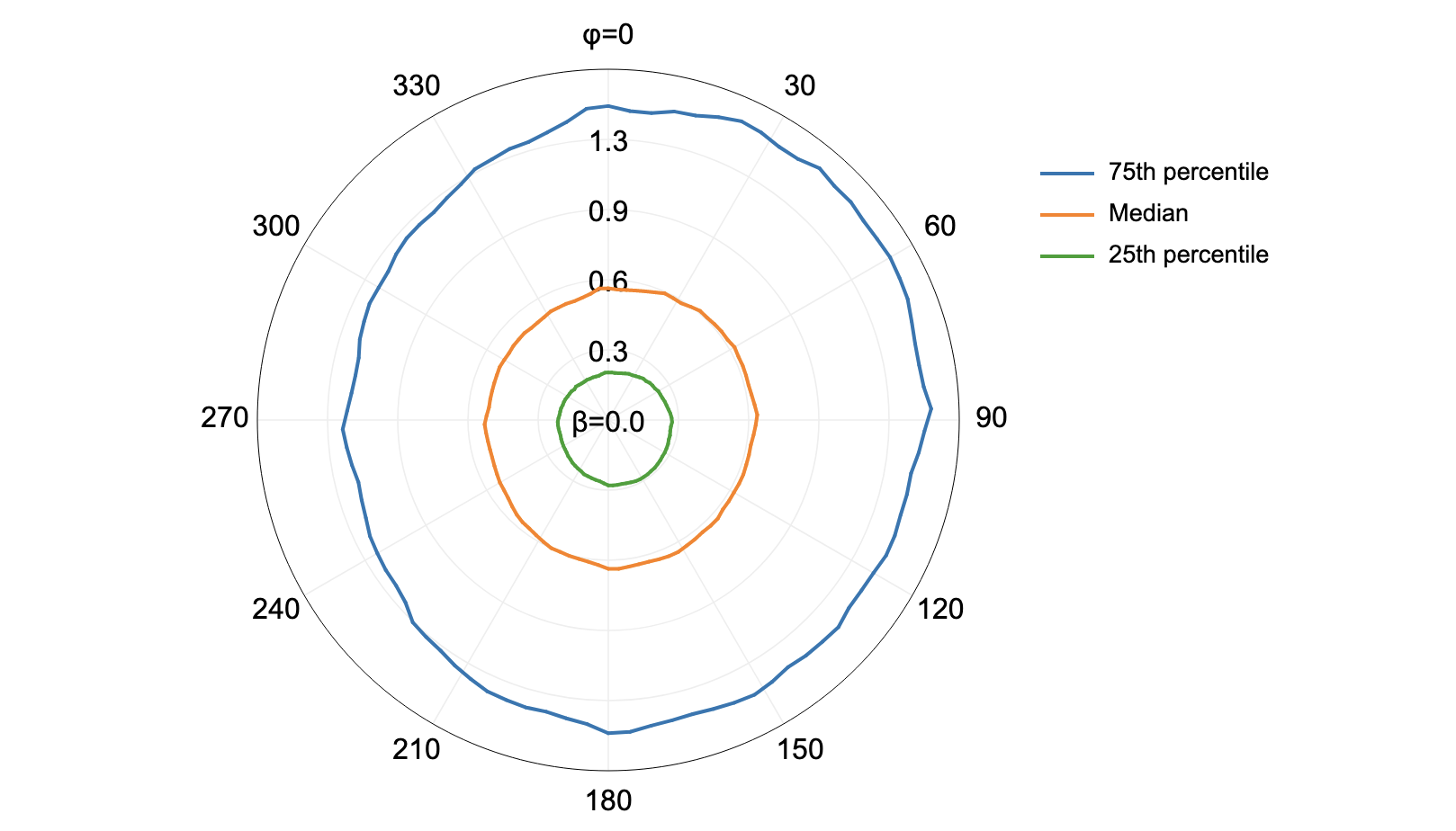

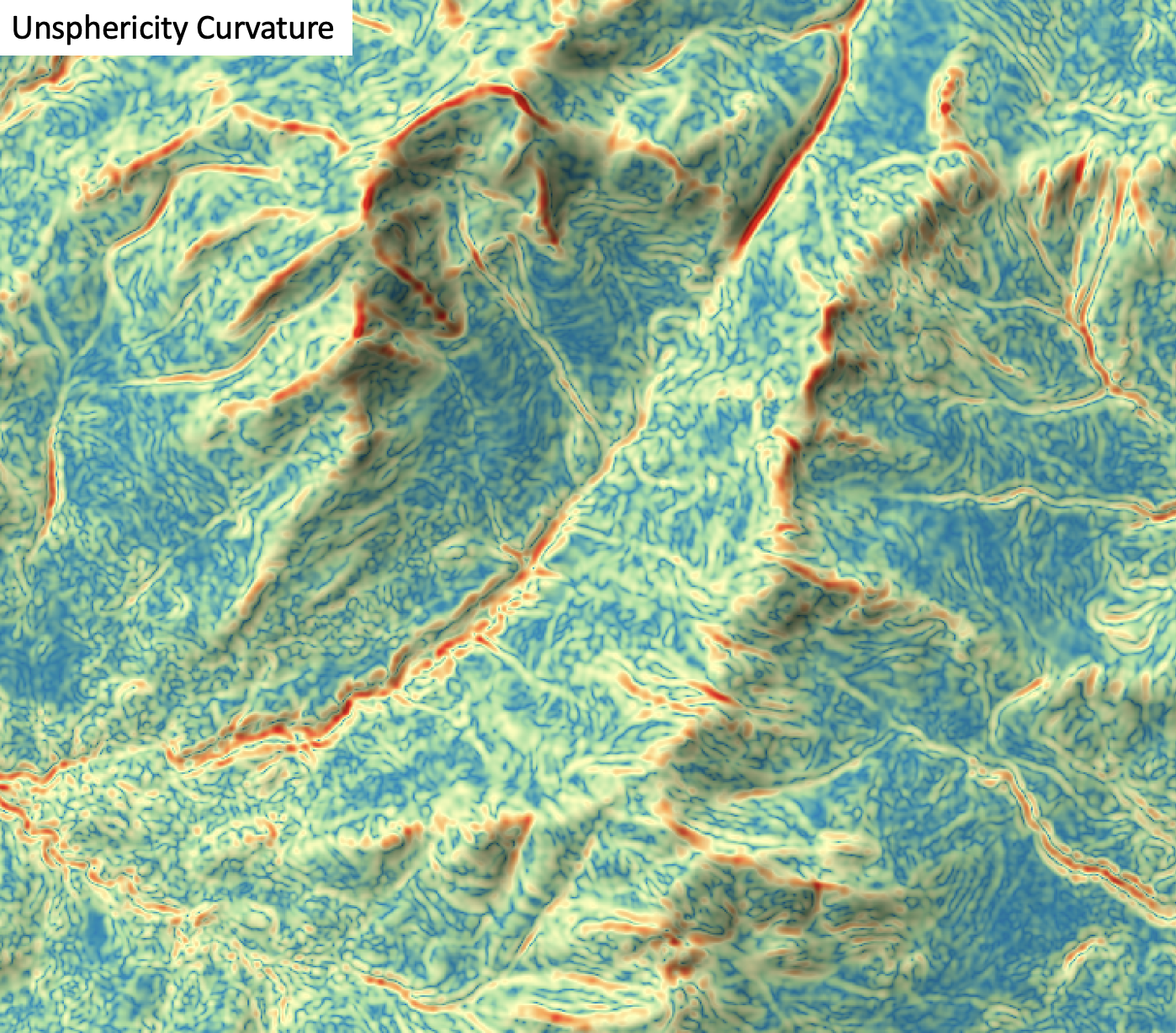

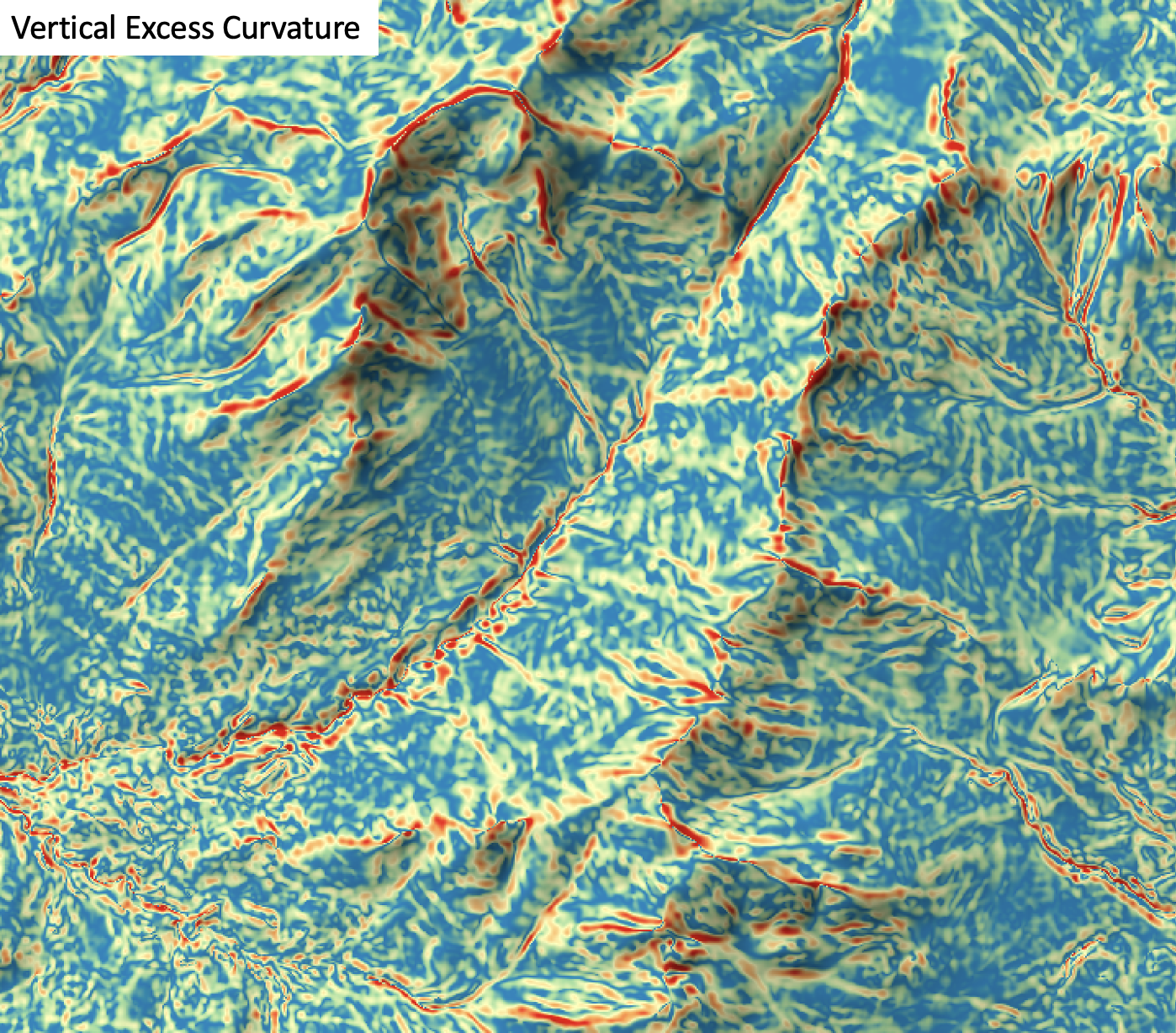

Description